It is now crucial to ensure seamless user experiences through rigorous testing in a world where voice-activated systems, interactive voice response (IVR) technology, and sophisticated chatbots are becoming more and more common. Here's a thorough guide on Voice and Conversational Interface Testing, which is a must-read for anyone interested in technology, development, or quality assurance, especially those working with target audiences who will be interacting with voice-enabled systems.

What Readers Wouldn’t Miss

📌 Security Considerations in Voice and Conversational Interface Testing

📌 Techniques used in testing Natural Language Understanding (NLU) in Conversational Interfaces

📌 Best Practices for Testing Multi-turn Dialogues in Conversational Interfaces

📌 Basic Components of Voice and Conversational Interface Testing

📌 Key Challenges in Testing Voice and Conversational Interfaces

📌 Why is it Crucial to Test Voice and Conversational Interfaces

📌Evaluating Conversational and Voice Interfaces for Quality

What is Voice and Conversational Interface Testing?

A key aspect of ensuring the operation and user experience of voice-enabled technologies, such as chatbots, voice-activated systems, and interactive voice response (IVR) systems, is voice and conversational interface testing. To ensure smooth user-interface interactions, this testing process includes assessing a number of factors, including voice quality, intelligibility, and overall performance.

Why is testing important for Voice and Conversational Interfaces

In order to guarantee the efficiency and dependability of voice and conversational interfaces, testing is essential. Testing is necessary to provide users with seamless and secure voice experiences, from confirming the accuracy of voice biometrics to validating the functionality of Interactive Voice Response (IVR) systems.

Interactive Voice Response Testing (IVR):

- Ensures smooth functionality of automated phone systems.

- Validates prompt accuracy and response handling.

Voice Biometrics Testing:

- Verifies the accuracy and security of voice-based .authentication.

- Checks for false acceptance and rejection rates.

Testing Voice Quality:

- Ensures clear and natural voice output for user interactions.

- Validates sound clarity and intelligibility.

Tech Product Testing Voice:

- Verifies compatibility and performance across different devices.

- Ensures consistency in voice recognition and response times.

Testing is crucial for Voice and Conversational Interfaces because it:

- Validates the functionality of Interactive Voice Response (IVR) systems.

- Verifies the accuracy and security of voice biometrics.

- Ensures clear and natural voice output quality.

- Validates compatibility and performance across various devices.

Key challenges in testing Voice and Conversational Interfaces

Since voice-based conversational interactions take place over a wide range of devices and environments, testing voice and conversational forms is fraught with issues. Among these challenges are:

Voice Over Testing:

- Maintaining a constant speech quality across various settings and devices.

- Checking for problems with compatibility, delay, and audio distortion.

Voice Quality Testing:

- Evaluating the vocal output for intelligibility, naturalness, and clarity.

- Addressing accuracy of speech recognition, echo, and background noise.

Testing Interactive Voice Response (IVR):

- Verifying accurate prompts and system responses.

- Managing multi-step interactions, error recovery, and intricate dialog flows.

Variability in Voice Quality:

- Maintaining a natural and clear voice over a range of platforms, devices, and network environments.

Compatibility and Integration:

- To guarantee smooth operation and integration, voice functions are tested on a range of browsers, hardware combinations, and operating systems.

Speech Recognition Accuracy:

- Assessing how well the system can understand and react to a range of speech patterns, human language, and accents.

Environmental Factors:

- Taking into account ambient sounds, echoes, and more elements that may have an impact on speech recognition and user experience.

Basic Components of Voice and Conversational Interface Testing

Comprehensive testing is necessary to make sure smooth conversational flow. Below is a summary of the main points to concentrate on:

Speech Recognition (ASR):

- Accuracy: Does the technology accurately comprehend spoken words and commands?

- Wake Word Detection: Does it prevent false positives from background noise by only activating when the desired wake word (such as "Alexa" or "Hey Google") is heard?

- Background Noise: Is the system able to comprehend speech in noisy settings (such as those with music or traffic)?

- Accents and Dialects: Can various speaking tenors and accents be detected by the system?

Understanding Natural Language (NLU):

- Intent Recognition: Is the system able to recognize the user's intended activity (such as playing music or setting an alarm)?

- Entity Extraction: Is it possible for the system to recognize important information from the user's request, such as the alarm's precise time or the music's genre?

- Dialogue Flow: Does the discussion proceed organically, with suitable questions and answers?

TTS, or speech synthesis:

- Clarity & Naturalness: Does the speech produced by the system sound natural and clear?

- Pronunciation: Is the system appropriately pronouncing names and words?

- Emotional Tone: Is it possible for the system to use voice inflection to convey various emotions?

Accessibility:

- Task Completion: Is the task the user has requested—like setting an alarm or playing music—successfully completed by the system?

- Error Handling: Does the system handle mistakes or miscommunications with grace?

- Recoveries: Is it simple for the user to fix errors or reword requests?

Testing Voice-Activated Systems and Chatbots

Chatbots and voice-activated devices are quickly multiplying. Technologies like voice commands for ordering takeout and conversational interfaces for customer service are revolutionizing our interactions with the digital world. Modern interfaces often act as virtual personal assistants, offering tailored responses and services.

Testing the happy path ensures the interface functions optimally under ideal conditions:

- Recognizing User Intent: Voice interactions are more free-form than online interfaces, which have distinctly labeled buttons and choices. Testing makes sure the system understands spoken instructions and phrases accurately and captures the user's intent. Can you picture requesting the "weather forecast" and receiving a carrot cake recipe in return? 🎤

- Understanding the Nuances of Speech: There are certain errors in speech recognition. Testing finds out how well the system responds to diverse speech styles, accents, and background noise. Misunderstandings shouldn't result from mumbling! 🗣️

- Creating Natural Conversations: A chatbot that seems awkward to converse with can turn people off significantly. Natural language understanding (NLU) is tested to make sure the system can comprehend user intent and respond in a way that is engaging and seems natural. 💬

- Testing the Voice's Functionality: Chatbots and voice-activated devices are frequently entry points for finishing complex tasks. Testing makes sure the system can comprehend the user's request and carry it out properly. Providing helpful responses enhances user satisfaction and trust in the interface. 🛠️

- Beyond Precision: Advanced systems are not immune to misinterpretation. Testing makes sure the system handles errors graciously and gives users clear cues to help them rephrase their requests or fix problems. A good system should maintain user control over the interaction by facilitating easy recovery. 🔄

Types of Conversational Interfaces

Conversational user interfaces are revolutionizing how we interact with technology. Imagine a world where you can order dinner, check the weather, or book a flight simply by talking to your phone or computer. As technology advances, we're seeing more conversation-driven systems shaping digital interactions. There are different types of systems as:

- Chatbots: You can have polite human conversations with these, just like with your assistants. Chatbots are everywhere, whether they're assisting you with customer service questions, placing a pizza order, or just having a conversation about the weather!

- Voice-Activated Interfaces: While visual interfaces are common, the trend is shifting towards more intuitive voice graphical user interfaces. Magic occurs when you speak and they listen! With just your voice, these devices can play music, answer queries, operate your smart home and much more.Unlike traditional graphical interfaces with buttons and menus, voice interfaces rely on natural language processing to understand and respond to user commands.User Examples : Amazon Echo, Amazon Alexa, Google Assistant

- Systems for Interactive Voice Response (IVR): An IVR system is used when you phone a business and are prompted to touch buttons or utter commands by a robotic voice. While voice interfaces lack body language, they compensate through tone and expression in speech.

- Virtual assistants: An advanced form of voice-activated systems and chatbots. They can send messages, handle your calendar, complete activities, and even predict your requirements based on previous exchanges. It's similar to carrying a personal helper!

- AI-powered messaging platforms: To improve user experiences, chatbots driven by Conversation AI are being added to platforms such as Facebook Messenger, WhatsApp, and Slack and various mobile applications.

Use specific tools for Voice and Conversational Interfaces

Let's look at a few helpful resources that make creating and testing voice and conversational interfaces more easier:

With this tool, you may use natural language processing to create chatbots and conversational interfaces. It works well for fostering thoughtful and participatory dialogues.

⏺Amazon Lex:

Do you want to provide your mobile apps the ability to talk via text and voice? Engaging interactions are powered by speech recognition and natural language comprehension provided by Amazon Lex, a part of AWS.

This framework provides a full range of tools and services for creating conversational agents if you're interested in creating bots for different platforms such as Teams, Skype, and others.

Watson Assistant uses AI and machine learning to improve user interactions and offer insightful data, from building virtual assistants to assessing consumer sentiment.

A well-crafted interface, designed by a skilled conversation designer, can significantly enhance user experiences across many communication channels.

This solution facilitates the management of intricate dialog flows and guarantees seamless user interactions with conversational interfaces.

Common testing methodologies used for Voice and Conversational Interfaces

It's like giving voice and conversational interfaces a comprehensive check-up to make sure they're in excellent condition! Now let's explore some popular testing techniques that assist us in making sure these interfaces function flawlessly:

- Functional testing: This technique verifies that all of your interface's buttons, voice commands, and features operate as they should. It's similar to double-checking that your voice assistant sets your alarms appropriately or that your chatbot answers when you say "Hello".

- Usability Testing: Testing for usability testing helps avert that! It assesses the degree to which people can engage with your interface with ease and without annoyance by determining how simple and intuitive it is.Usability tests can involve observing users as they interact with the system.

- Performance testing: picture your voice recognition taking an eternity to work or your chatbot suddenly slowing down. Performance testing ensures that your voice user interfaces are snappy and quick, easily managing numerous users and tasks.

- Security testing: Everyone wants their data and chats to remain private and safe. Verify through security testing whether your voice user interfaces are protected against unwanted access, user data theft, and general system failure.

- Compatibility testing: To ensure that your interface functions properly across a variety of devices, browsers, and operating systems given the wide variety of platforms and devices available today. No matter how or where a person accesses your interface, compatibility testing guarantees that they receive a consistent experience.

Best practices for testing multi-turn dialogues in Conversational Interfaces

Similar to having a seamless chat with a friend, navigating multi-turn dialogues in conversational interfaces calls for the appropriate strategy and a solid grasp of best practices. The following informal yet useful advice can be used to assess multi-turn dialogues:

- Event-Driven Evaluation: Create test cases that mimic how users might interact with your interface by considering real-world scenarios. This guarantees that your interface reacts correctly to various user intents and paths and helps identify any problems.

- Testing for Edge Cases: Don't merely follow the easy routes. Examine edge scenarios, such as when users deviate from the plan or give unexpected input. Examine how well your UI responds to mistakes, miscommunications, and gracious fallbacks.

- Context Retention: When context is maintained, conversation flow easily. Test situations in which users change the subject or revisit earlier previous interactions. Make sure your interface retains pertinent data throughout sessions so that users may have a consistent and tailored experience.

- Error Handling: Murphy's Law states that anything that can possibly go wrong will. Test situations where errors occur, such as incorrect inputs, system malfunctions, or network outages. Make sure your interface asks for clarification when necessary, displays error messages clearly, and recovers from mistakes without interfering with the flow of the discussion.

- Identification of User Intent: Nearly a mind reader should be your interface. Check how well it interprets user intents across a series of turns. Verify whether it asks users for information that is missing, validates their actions, and predicts their needs based on context cues.

- User Feedback Integration: Make sure your testing procedure includes loops for user feedback. To determine areas for improvement, pain points, and to reach user expectations with multi-turn interactions, get feedback from actual users or beta testers. Utilize these suggestions to improve your interface iteratively.

- Performance and Scalability: Make sure your interface keeps up its best speed and scalability as discussions get longer or more complicated. In order to provide a dependable and consistent experience, test how it manages large numbers of concurrent users, protracted talks, and system resource utilization.

Techniques used in testing Natural Language Understanding (NLU) in Conversational Interfaces

It is similar to teaching your interface to comprehend and react to users' speech like a pro while testing Natural Language Understanding (NLU) in conversational applications. Here are some neat methods to become an expert at NLU testing:

- Example Utterance Examination: To spice up your interface, throw a range of terms at it, from straightforward commands to intricate sentences. Check out how well it understands various user intents and provides accurate responses.

- Test sentences such as "Remind me to set a reminder for 2 p.m." or "What's the weather today?"

- Entity Recognition Testing: Verify whether your interface can recognize and extract particular items or information from user inputs. It's similar to requesting your interface to highlight significant information during a conversation.

- Test the identification of entities for names, dates, locations, or product categories.

- Contextual Understanding: Evaluate how well your UI preserves conversational context during various turns. It's similar to making sure your UI retains previous remarks and carries on a productive dialogue.

- Test situations in which consumers interrupt a discussion to ask further questions or to switch subjects or revisit earlier previous interactions.

With the use of these strategies, you can make sure that your conversational interface provides a smooth and organic user experience by understanding what users are saying and responding appropriately and contextually.

What are the security considerations in Voice and Conversational Interface Testing

Security is like securing the front door when it comes to speech and conversational interface testing; it's critical to maintain everything safe. Unlike traditional text-based interfaces, voice interfaces are susceptible to eavesdropping in situations that are not absolute silence.

The following are a few informal but important security points to remember:

Here are key practices for ensuring security in voice and conversational interfaces:

1. Data Privacy: Encrypt sensitive data and adhere to privacy laws like CCPA and GDPR.

2. Authentication: Use safe techniques like multi-factor authentication and biometrics for user verification.

3. Secure Communication: Employ HTTPS protocols and encrypt voice/text communications to prevent eavesdropping.

4. Access Control: Set permissions to limit access to authorized functions and data.

5. Vulnerability Testing: Conduct regular tests and audits to identify and fix vulnerabilities promptly.

You're not only checking functionality when you take these security factors into account for voice and conversational user interfaces; you're also preserving user confidence and guaranteeing a dependable and safe experience.

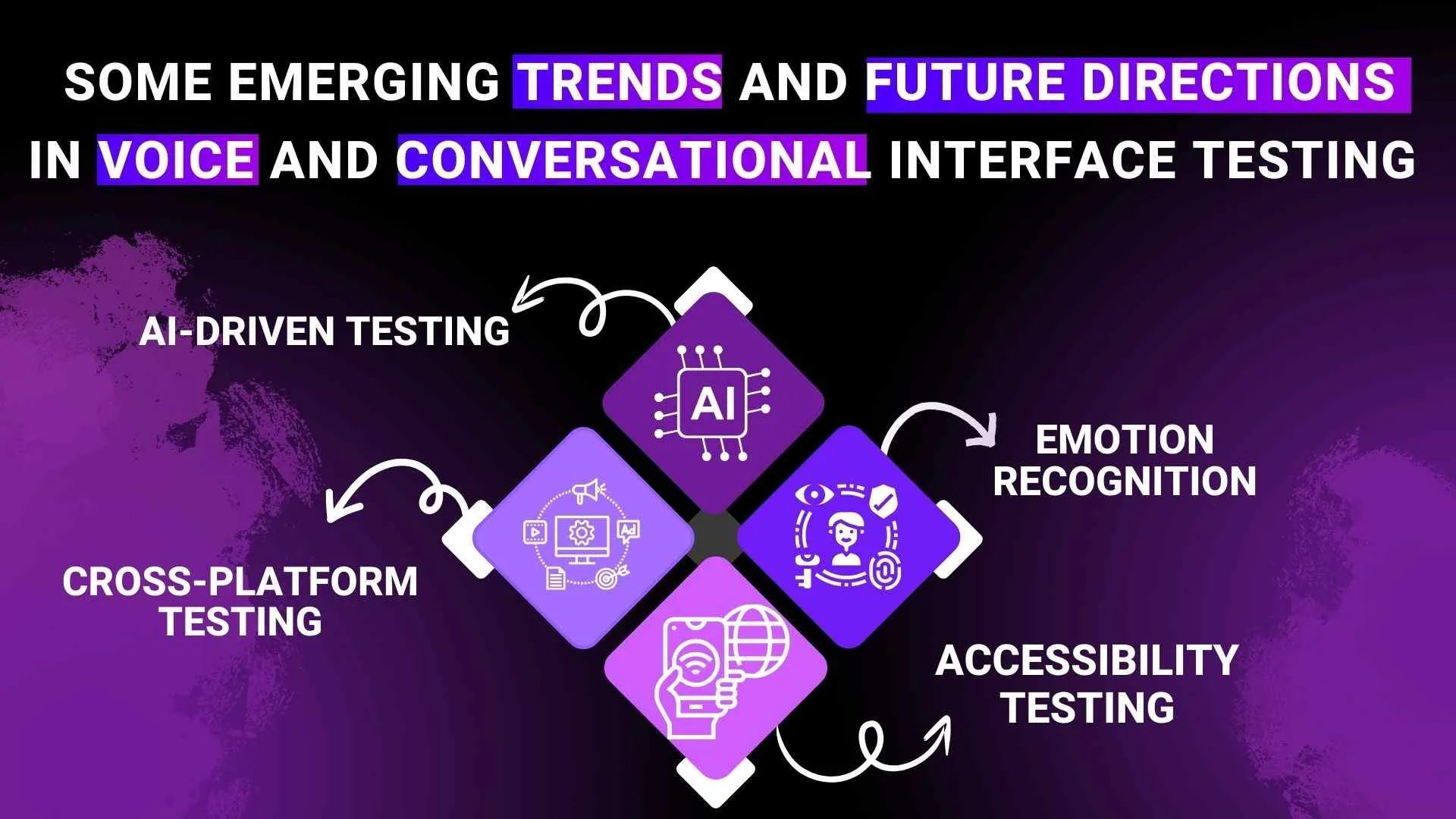

Some emerging trends and future directions in Voice and Conversational Interface Testing

Testing voice and conversational interfaces is developing quickly, opening up new and intriguing possibilities for trends and future directions:

- AI-Driven Testing: As AI-powered testing tools get more intelligent, test case creation is automated, and test coverage for voice interfaces is improved.

- Emotion Recognition: In order to provide more individualized and sympathetic experiences, testing is going beyond words to recognize and assess emotional signs in voice exchanges.

- Cross-Platform Testing: The increasing popularity of smart devices has made it more crucial than available tests across a range of platforms and gadgets in order to guarantee reliability and compatibility.

- Accessibility Testing: With an emphasis on inclusion, testing is being expanded to cover accessibility features for users with a range of needs, guaranteeing that voice interfaces can be beneficial to all.

Wrapping it up!

As we wrap up this comprehensive exploration of Voice and Conversational Interface Testing, it's clear that a robust testing strategy is vital for ensuring smooth interactions in today's world of AI-driven technologies like Google Home and chatbots. By using available frameworks, testing NLP capabilities, and evaluating chatbot usability and response actions, we can enhance human-computer interaction and reduce average chat times.

As language models evolve and basic conversations become more natural, embracing a chatbot framework that considers chatbot windows and user intents will shape the future of intuitive and engaging conversational experiences. Here's to meaningful interactions and seamless voice interfaces ahead!

People also asked

👉How can we test if the voice interface understands user intent accurately?

We can test if the voice interface understands user intent accurately by analyzing its responses to varied user inputs and confirming that it consistently provides the expected actions or information based on those inputs.

👉How can we ensure the voice interface is accessible for users with disabilities?

We can ensure the voice interface is accessible for users with disabilities by incorporating features such as voice commands, speech recognition, and compatibility with screen readers for visually impaired users.

👉How does evaluating the quality of conversational interfaces impact ethics ?

Evaluating the quality of conversational interfaces impacts ethics by ensuring fair and inclusive experiences for all users, avoiding biases in language processing, and respecting user privacy and data protection, thus promoting ethical standards in technology development and usage.

👉What are Voice Interfaces and Chatbots?

Voice interfaces allow users to interact with devices using spoken commands, while chatbots are AI-powered programs that simulate conversation with users, typically through text-based interfaces.Skilled chatbot testers play a crucial role in refining conversational experiences.

👉Which methods can be used to performance tests of voice assistants in busy environments?

To performance-test voice assistants in busy environments, methods such as load testing with simulated user interactions, real-world stress testing in crowded settings, and evaluating response times under varying levels of noise and activity can be employed.

%201.webp)