Do you feel that automation completely replaces human expertise in software testing and test automation?🤔 If so, you are probably missing one important point: while automation tools can carry out repetitive tasks and process large volumes with fantastic speed and accuracy, these tools can't fully replace the indispensable role of human insight.

Intelligent test strategy💡 design requires expert human involvement, along with complicated result interpretation and handling of unique or unexpected issues. An automated system🛠️ can perform tests, which have already been predefined, but it would lack the subtle understanding and creativity to interpret events as an experienced tester would do.

This blog explores why the balance of automation and human expertise is vital in software testing. It emphasizes how human creativity in strategy design, result interpretation, and troubleshooting complex issues plays an indispensable role in the success of testing initiatives.

An Overview of Manual vs. Automated Testing

Software testing has two sides:️ 1. manual testing and 2️. automation. In manual testing, test cases are laboriously executed one by one by the human tester. Automation testing, however, involves the utilization of tools and scripts to perform repetitive tests or volumes of tests with speed and accuracy, thereby ensuring consistency and efficiency.

Both are essential testing techniques🛠️, among many factors, on project complexity, objectives of testing, and the need for manual testers versus speed and accuracy. In the tech scene, sticking to proven testing protocols is crucial, especially with continuous delivery and continuous testing.

When is Manual Testing the Right Choice✅?

It is best fitted for human intuition, creativity, and judgments on the go. It's ideal for exploratory testing, where a tester drives the software with no pre-defined test cases to find unexpected bugs or issues in usability.

UI testing also requires human input in a big way because only human testers can verify the look-and-feel aspect to make sure it aligns with the expectations of the end-users.

Manual testing🙎would be preferred for short-term projects or projects that require ad-hoc testing, as writing and maintaining automated scripts would be highly counterproductive. Besides, if testing involves subjective feedback, like in the case of usability or user experience, manual testing confers upon testers insights that automation cannot.

When flexibility and adaptation are needed, like during early-stage development or complex test scenarios, the choice has to fall to manual testing.

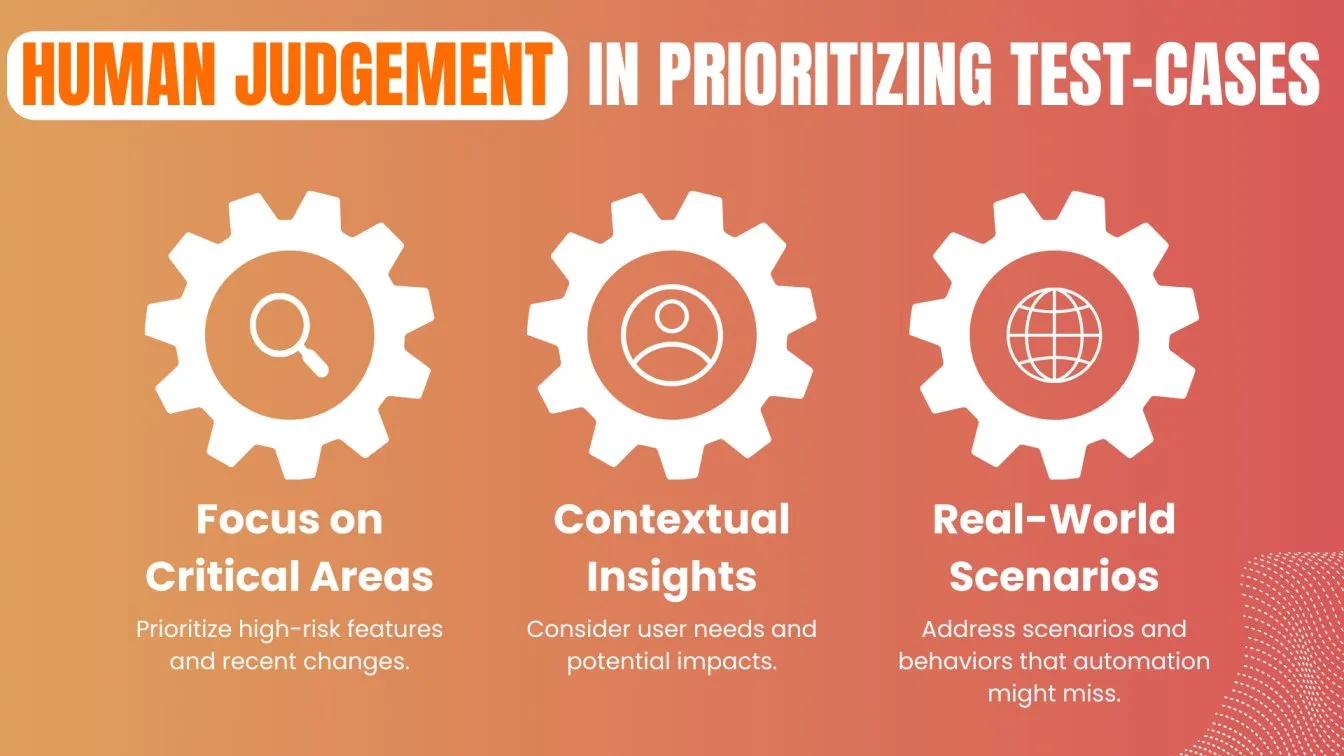

The Role of Human Judgment in Prioritizing Test Cases

In software testing, ⚙️human judgment is important in prioritization of test cases✅ appropriately. While the use of automation tools is good for executing predefined tests, it does not possess intuitive skills that present inexperienced testers.

For example, human software testers👨💻 focus on high-risk areas, like new features or very critical components, in order to ensure that major issues are captured early. In addition, they consider factors such as recent changes and possible impact on users while prioritization of test efforts.

Human experience👨💼plays an important role in balancing test coverage with practical constraints. Testers assess the most critical aspects of an application for realistic scenarios and user behaviors, which may not be considered by Traditional test automation tools. It is this subtlety that makes software testing effective and meaningful.

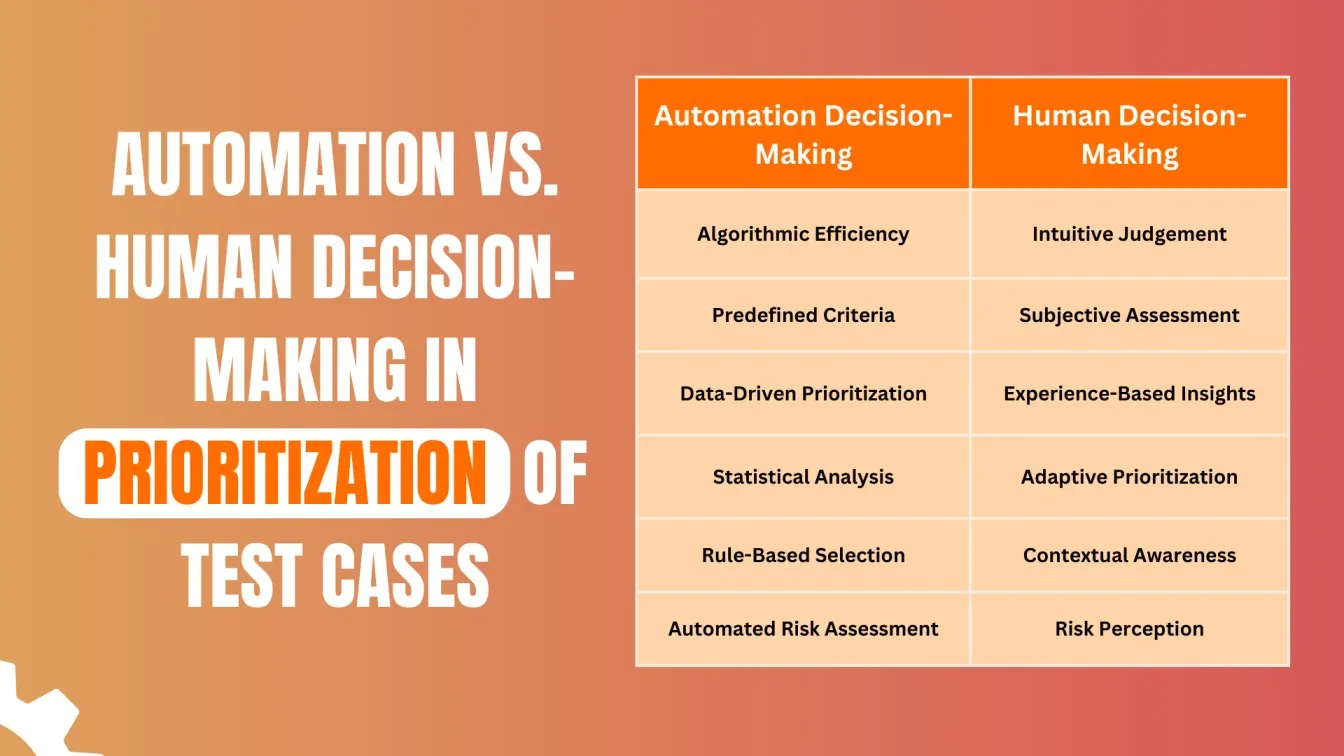

Automation vs. Human Decision-Making in Test Case Prioritization

In modern software development, effective test case prioritization is crucial for ensuring efficient and effective testing. While automation offers significant advantages in terms of speed and efficiency, human decision-making remains essential for ensuring that testing efforts are aligned with overall project goals and objectives.

Seamless Integration with the Development Process:

One of the key benefits of AI-driven test case prioritization is its ability to seamlessly integrate with the development process. This integration can streamline the testing cycle and reduce the risk of defects slipping through the cracks.

Human Expertise for Strategic Decision-Making:

While AI can provide valuable insights and recommendations for test case prioritization, human expertise remains essential for making strategic decisions. This ensures that testing efforts are aligned with the overall goals of the project.

The Benefits of a Hybrid Approach:

AI-powered tools can be used to identify potential high-priority test cases, while human testers can review and refine the list based on their expertise and knowledge of the project. This approach can help organizations achieve a balance between efficiency and effectiveness in their testing efforts.

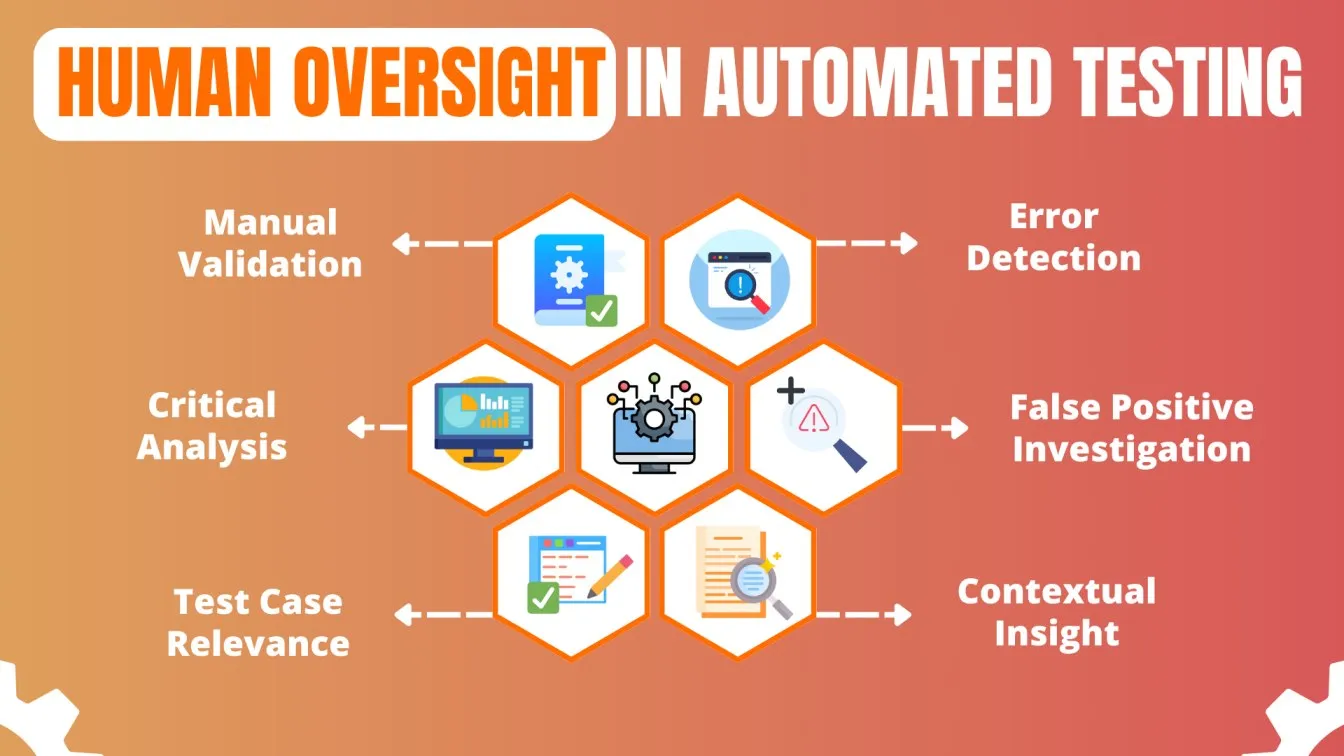

Human Oversight in Automated Testing 🕵️♂️

Even though automated testing increases the speed of testing and makes it uniform, human oversight is important in maintaining quality and relevance in those tests. Automation only takes care of repetitive tasks, identifies code errors, and validates functional requirements but cannot be as pliable and intuitive as a human tester.

Human oversight ensures that the testing remains up to date with the changing business goals, user expectations, and the realities of the real world. Human expertise also comes into the picture when test cases are designed. Only a tester who has deep knowledge of the software functionality and user behavior can be effective at test scripting.

While machines may run the scripts later on, human beings are required to ensure that such test cases reflect the true needs and priorities of the users and the business. Consequently, results emerging from automation tools also require interpretation using human judgment. While automation can make a determination about an error or inconsistency, when the issues of ambiguity and nuance become more complex, human testers come in to interpret the results and make conclusions.

Analyzing Automation Failures and Edge Cases That Require Human Intervention

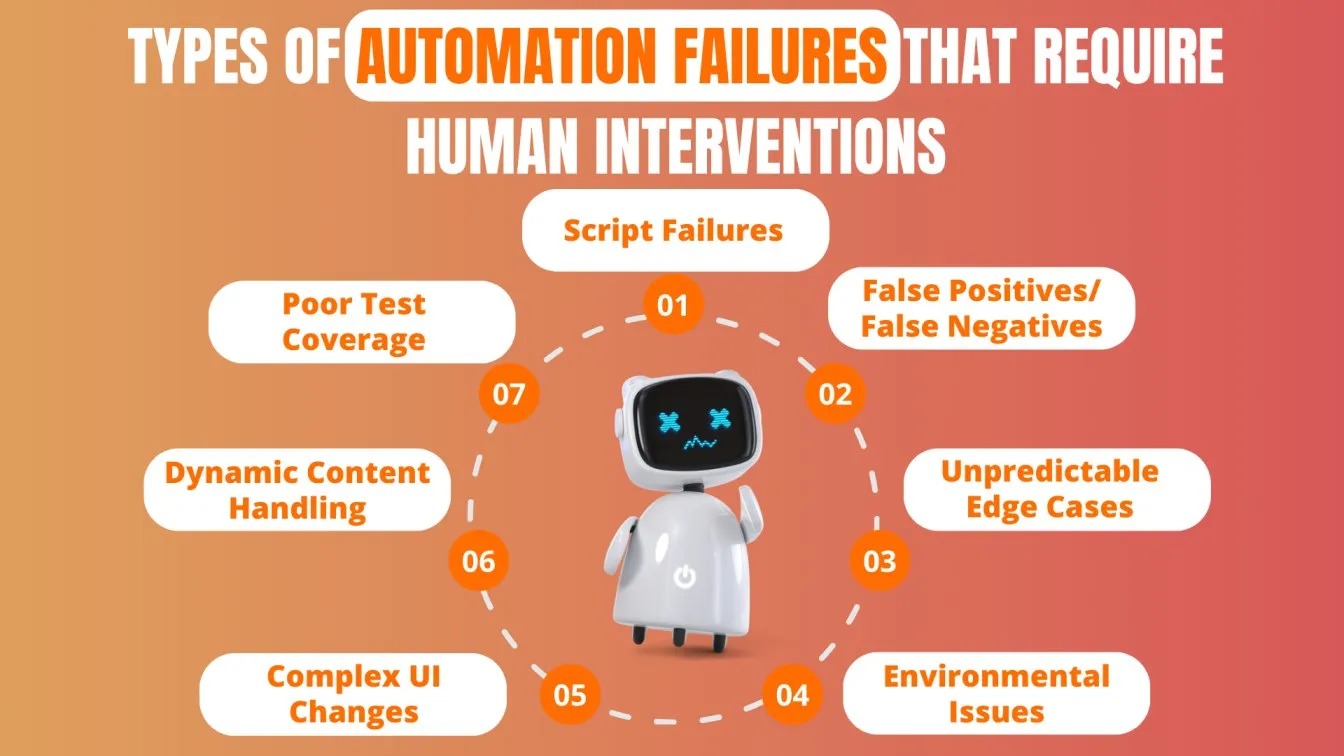

It is while having limitations with automation, as today software testing has been revolutionized by boosting efficiency and reducing manual effort, still, it has not been able to test a realistic environment as always required by all types of users with respect to their needs, since automated scripts, as they are based on specifically defined rules, is prone to failures in complex scenarios, unexpected inputs or unique edge cases, not covered during the development.

Such failure occurs due to many environmental reasons such as changes in the testing framework of software or issues related to integration with third-party systems. In such a case, scripts break or produce false positives and it is the human testers who play an important role in diagnosing the problem, making necessary adjustments, and ensuring reliability in the testing process.

- Types of Automation Failures that Require Human Interventions:

- Script Failures:

- Changes in code or structure may break the scripts of an application.

- Renaming or relocating UI elements can disrupt the script execution.

- False Positives/False Negatives:

- Automated tests can mark bugs where there are none, referred to as false positives, or vice versa, missing problems that do exist.

- Any discrepancies between expected and actual results have to be manually researched.

- Unpredictable Edge Cases:

- Automation tools can't handle conditions not normal or not common.

- Rule-based systems may have passed wrong or misleading unexpected inputs.

- Environmental Issues:

- Network disruption, configuration mismatches, and the like can be the reasons behind script failure.

- Automation tools might not predict or adjust to such environmental changes.

- Human intervention is needed along with diagnosis and fixes for correct test execution.

- Complex UI Changes:

- Major UI changes, such as redesigns or the addition of new elements, break automated tests.

- Automation is dependent on specific UI elements that no longer exist or have changed.

- Dynamic Content Handling:

- Automation falters on content that is updated very regularly, such as news feeds.

- Manual intervention may be required to fix scripts for such modules or to manually test dynamic features.

- Poor Test Coverage:

- Automated tests lack coverage in an area, especially in the case of new features and workflows.

- Unique situations or user behaviors are missing in the predefined test set.

- Script Failures:

- Types of Edge Cases that Require Human Interventions:

- Extreme Data Conditions:

- Input Values: Input values are much larger than normal, smaller than normal, or just plain unexpected as are a few other areas of failure. These are tested by human testers to ensure the system behaves correctly.

- Peculiar User Scenarios:

- Inhuman User Flows: Users might interact with your product and need to be tested manually for all edge cases.

- Integration with External Systems:

- Third-Party Services: Edge cases, for instance, timeout or service not available for APIs and services from external entities, are things that require human intervention to deal with and validate responses.

- Extreme Data Conditions:

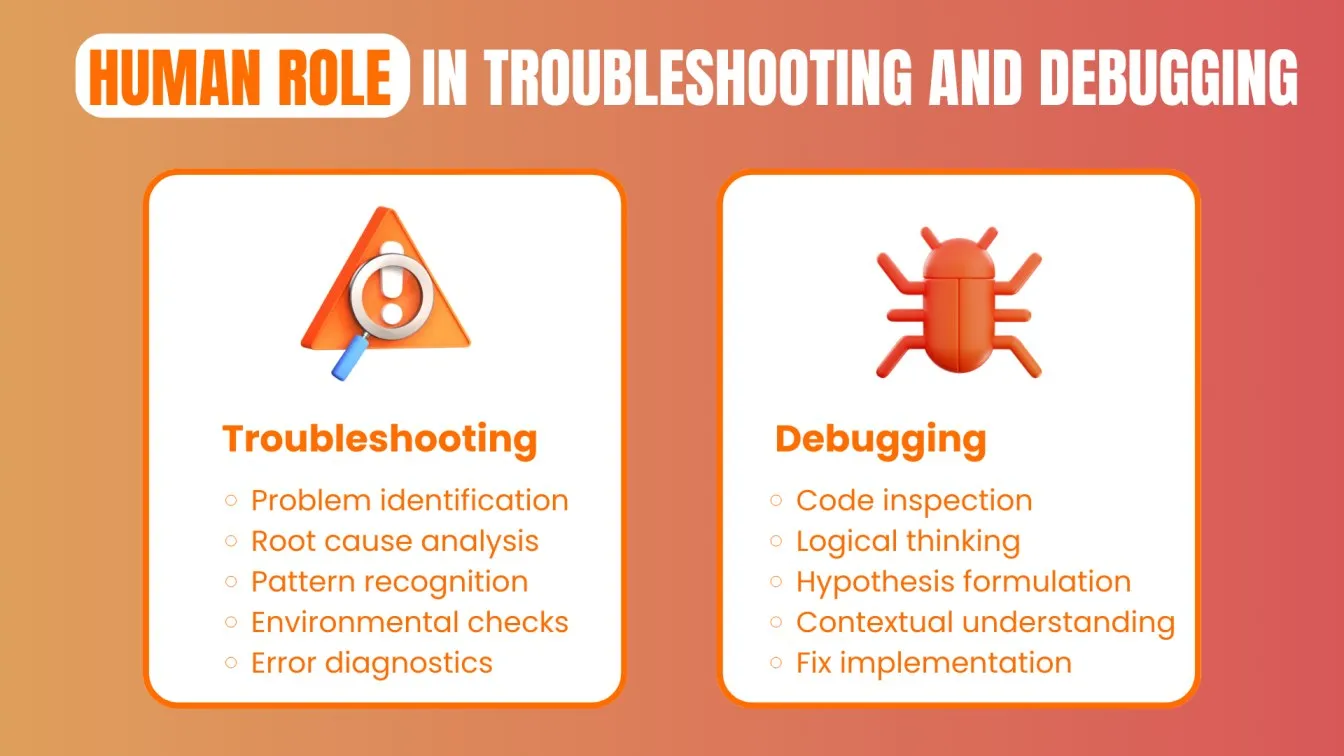

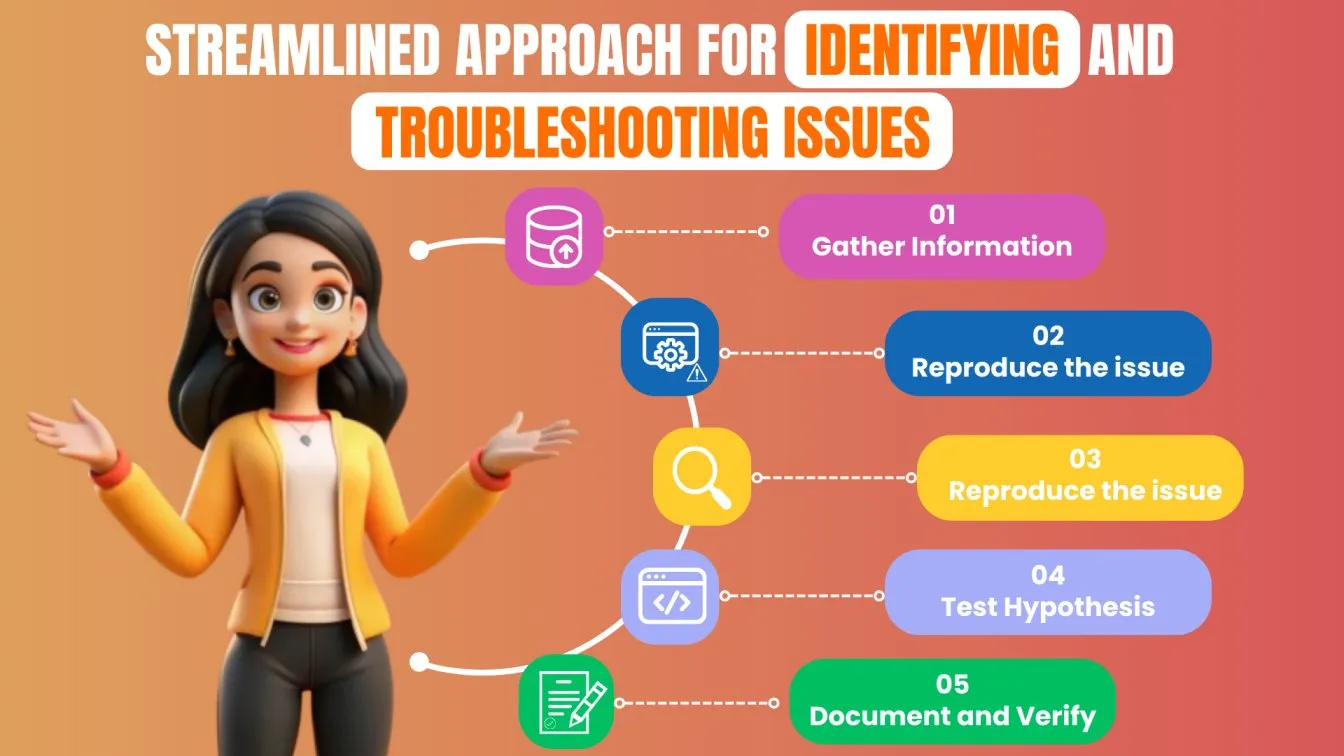

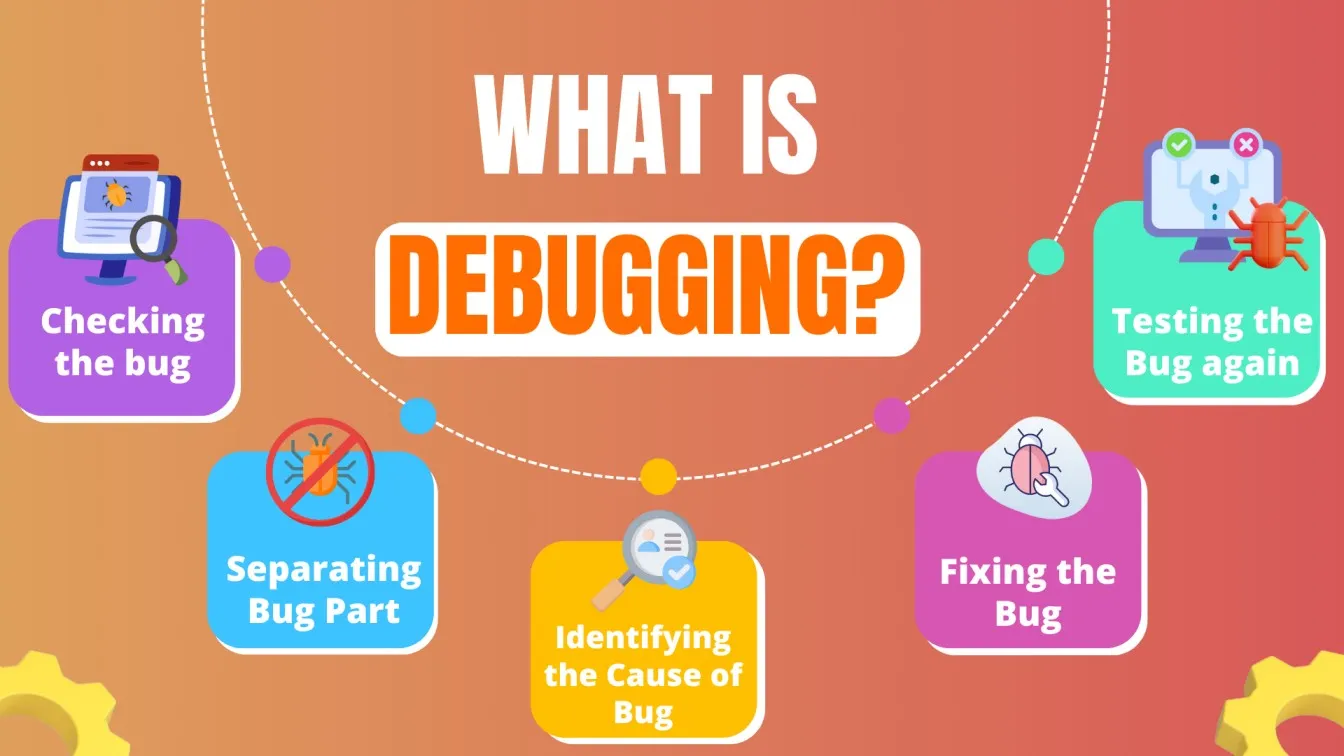

The Human Role in Troubleshooting and Debugging

While the automation test tools are great in terms of issue detection, most of them fail at actually diagnosing the root cause of a complex problem. For instance, an automated test may be able to flag an error condition.

However, it lacks the finesse of looking through error logs and tracking where such an issue may have emanated from. Critical thinking and knowledge of contexts are some of the most essential skills that should be part of effective troubleshooting possessed by human testers.

- Human Role in Troubleshooting:

- Log Analysis: Error logs are studied and interpreted manually to find out problems that might not be explicitly explained by automated tools.

- Context Knowledge: Testers apply their knowledge in the functionality of the application and user scenarios to diagnose problems in specific contexts.

- Diagnostics of Complex Issues: Human beings have to deal with complex issues much beyond the reach of automation, requiring an understanding of complex interactions and system behavior.

- Cross-Functional Coordination: Testers are in the middle of communication with developers and other stakeholders, as problems and information gathering frequently cut across more than one system or component.

- Human Role in Debugging: Importance of Human Expertise in Software Testing

- Code Review: It involves manual inspection of the source code by humans for logical gaps or inconsistencies that may not be identified using automation testing.

- Root Cause Identification: This skill involves testers who have knowledge of the application and its architecture to try to trace and determine the underlying causes of failures.

- Problem Reproduction: Humans reproduce the problems under different circumstances to understand and isolate the problem further.

- Solution Implementation: Here, the developers exercise their coding talents to fix the problems and test whether the affordable solutions indeed fix the issues without adding any new bugs.

The Importance of Human Expertise in Software Testing

While automation makes software testing efficient, human involvement is still crucial to the process. Automated tools do the repetitive tasks; however, they mostly lack the intuition and context that comprehensive testing requires.

Human testers are part of designing the test case and finding the edge cases, which are complex in nature. They interpret results within the context of the functionality of an application and user experience. Troubleshooting and adapting to changes, and ensuring test relevance means the software meets the high quality standard for a good user experience.

- Key Aspects of Human Expertise in Software Testing:

- Complex Problem-Solving

- Root cause analysis: Investigation and resolution of issues that are complex, and the automated tool would overlook.

- Strategic adaptation: Changes in testing methods are adapted according to unforeseen problems.

- Quality Assurance Beyond Automation

- Comprehensive Review: Humans ensure that the software meets quality standards and user expectations.

- Adaptation to Changes: Testers modify testing approaches in response to new features or increase code quality.

- Creativity in Test Case Generation

- Innovative Scenarios: Testers create unique test cases to explore diverse user interactions and edge cases.

- Flexible Strategies: Human Intelligence develop and adjust testing strategies to accommodate evolving requirements.

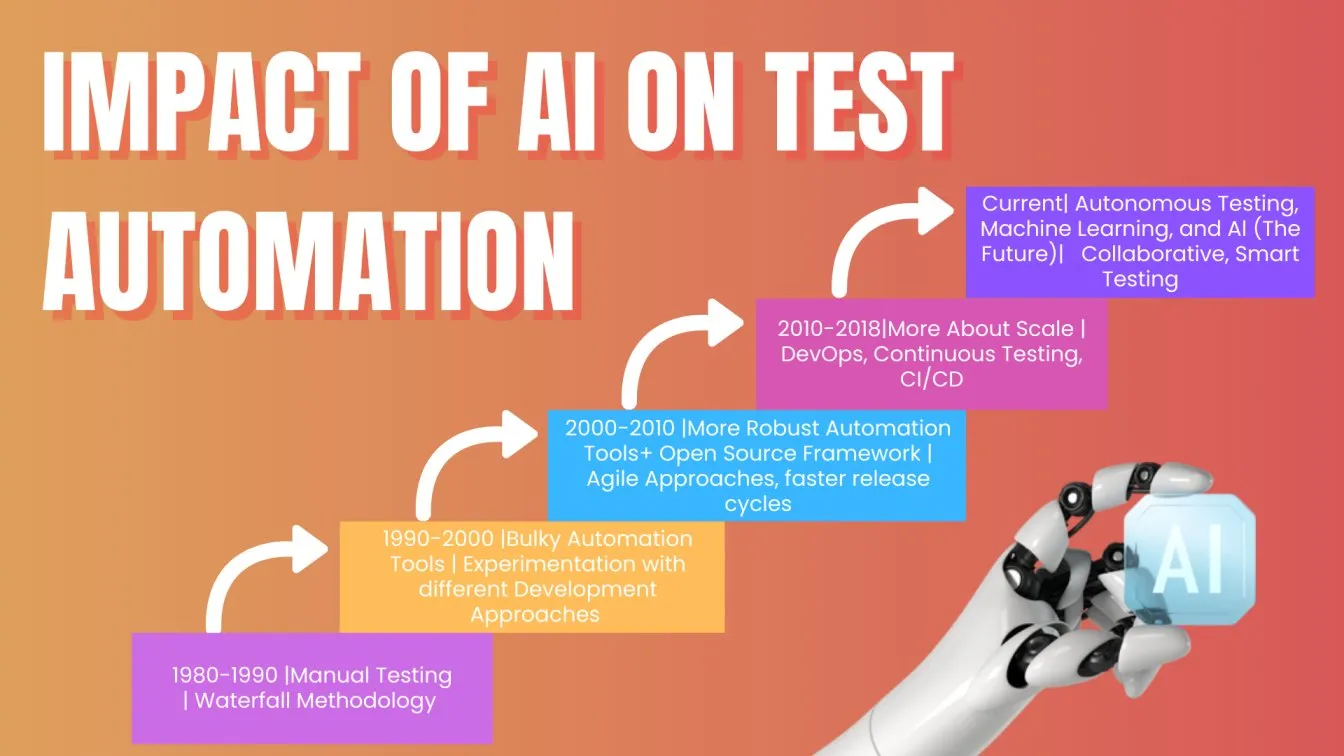

The Impact of AI on Test Automation

Artificial intelligence is bringing new answers to redesign the test automation landscape and then ensure highly improved processes for testing and software quality in organizations. Including AI and ML techniques in testing brings automated, optimized testing methods well beyond current systems of practice. AI-powered tools use generative AI-based approaches to automate procedures for tests thereby enhancing their efficiency and precision.

These AI-based features include automatic test case generation, possible defect prediction, and test data synthesis. Therefore, much wider coverage is ensured in software quality. With machine learning algorithms applied, there would be better estimates obtained on key areas concerning priority for testing. So, job redundancy of testing can be reduced while still managing enough testing. This not only increases the accuracy but also renews the means of testing by organizations to achieve high standards of software quality much more economically.

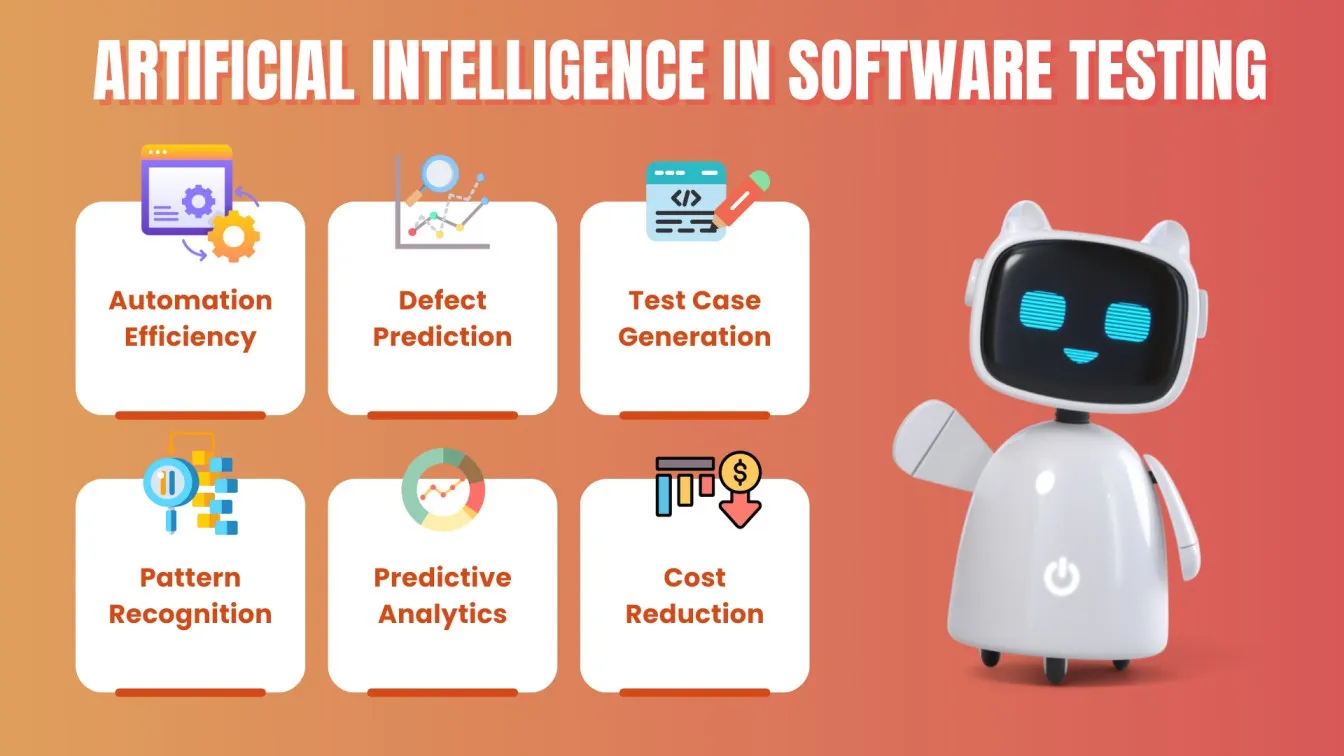

AI in Software Testing

Artificial intelligence (AI) has emerged as a powerful force in software testing. With AI-driven automation, organizations can significantly enhance their testing processes and improve overall software quality. AI-driven testing methods and AI-driven tools encompass a wide range of techniques, from AI-based test automation tools to AI-assisted tester roles.

Automation testing frameworks powered by AI can automate repetitive tasks, such as test data generation and test execution. Additionally, AI-driven testing tools can analyze vast amounts of test data to identify patterns and anomalies, enabling proactive defect detection.

While AI-powered testing can be effective for many testing scenarios, human expertise remains essential for identifying and addressing edge cases that are difficult to anticipate or replicate through automated testing. By incorporating both automation and human judgment, organizations can improve the overall quality and reliability of their software products.

Quick Recap🌟

Automation has changed the whole scenario of software testing. It deals with the repetitive tasks that were earlier done by a human being. However, it can never take away the most important aspect - human expertise. In speed and accuracy, automated tools undoubtedly conquer human abilities, but making sense of complex results and designing intelligent test strategies requires a very rational Real-time insight of humans. As intuitive guesswork is usually used in manual testing, exploratory testing, and subjective evaluations, no automated process can be capable of replacing such a human mind.

Human judgment in prioritization of test cases according to risk and user need, and oversight ensures automation tests align with business goals and adapt to real-world scenarios. Another area where human involvement is essential is in code review prior to the execution of the same code. It makes sure defect detection is early in the process, enhancement in the quality of code is ensured, and maintainability is guaranteed. Thus, the combined efficiency due to automation strategy and insight by a human leads to a more robust and reliable software testing result.

People also asked

👉 What is the software testing process?

Software testing is a systematic process of evaluating a software product to ensure it meets specified requirements and functions as intended.

👉 What is the main benefit of test automation?

The main benefit of test automation is the increased efficiency and speed in executing repetitive and large-scale tests, which helps in faster feedback and providing high-quality software.

👉 What is the difference between software testing and automation testing?

Software testing encompasses both manual and automated methods for validating software functionality, while automation testing specifically refers to using tools and scripts to perform these tests automatically.

👉 What does a QA automation tester do?

A QA automation tester designs, develops, and executes automated test scripts to verify software functionality and performance, and often maintains automation testing frameworks.

👉Is coding required for automation testing?

Yes, coding is typically required for automation testing as it involves writing scripts and developing test cases using programming languages and tools to automate test execution.

%201.webp)