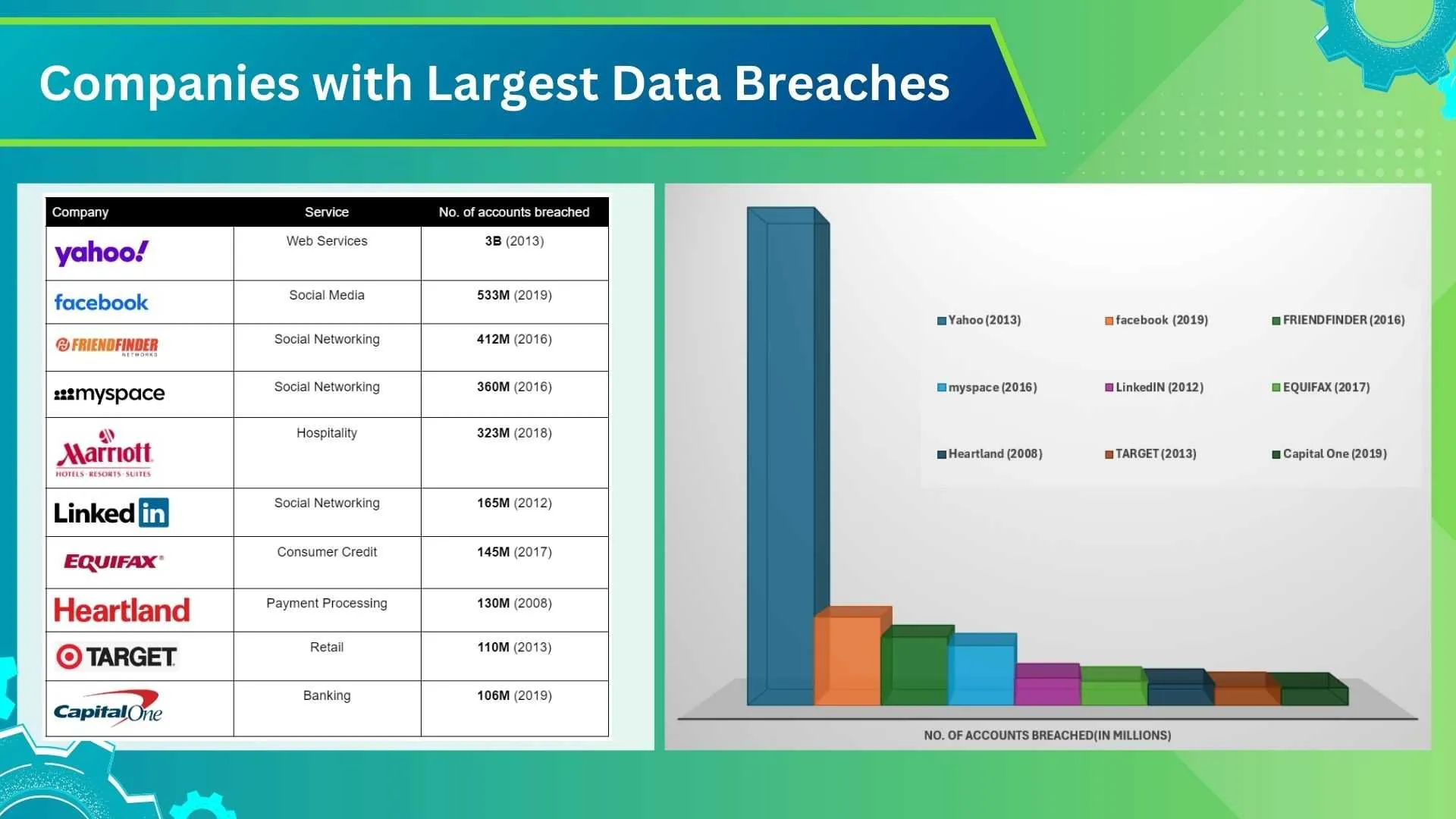

In today's digital age 📱Software has become an integral part of our lives, powering everything from social media platforms to financial transactions 💰 and healthcare systems 🏥. However, as our reliance on them grows, so does the importance of ensuring their quality, reliability and security. The Facebook data breach in the year 2018 serves as a stark reminder of the consequences of overlooking testing the software. This underlines the critical role of safeguarding user data and protecting against potential security breaches.

However, this is just one of the many potential scenarios where ineffective testing can result in significant consequences. In this context, it becomes necessary to discuss the list of 10 software testing errors that you must be aware of in 2024 to reduce risks and ensure the integrity of your product 🎯, a brief overview is listed below.

▶️ Lack of regular updates and open channels between stakeholders.

▶️ Ambiguity in project objectives and testing scope.

▶️ Improperly documented Pass/Fail results.

▶️ Conducting testing at an unsuitable stage.

▶️ Neglecting aspects like performance, security and usability testing (Non-Functional Testing).

▶️ Relying on a narrow range of test data sets and Failing to incorporate exploratory testing techniques

▶️ Inadequate documentation and tracking of reported bugs

▶️ Neglecting the system's testability leading to time-consuming testing processes.

▶️ Failure to maintain previous test data results.

What are errors in Software Testing?

Software errors are certain flaws or faults that cause the system to produce incorrect or unexpected results. These can stem from various sources such as coding mistakes, Human Errors or miscommunication between the Testing teams. Identifying and correcting these errors is crucial to ensure the software functions as intended.

These errors have widespread effects, including degraded performance, security vulnerabilities and poor user experiences 👎. Performance errors might cause slow response times, affecting user satisfaction and productivity. Security errors could expose sensitive data to breach, leading to legal and financial repercussions. Usability errors can result in a confusing interface that hinders user interaction.

Addressing these errors through systematic testing and proper use of software testing tools is essential to mitigate risks and ensure the software meets its intended requirements.

Effective testing techniques help in uncovering these errors early in the development cycle 🛞. By doing so, teams can save significant time and resources. Software testing solutions ensure higher quality and reliability, ultimately leading to a better end product.

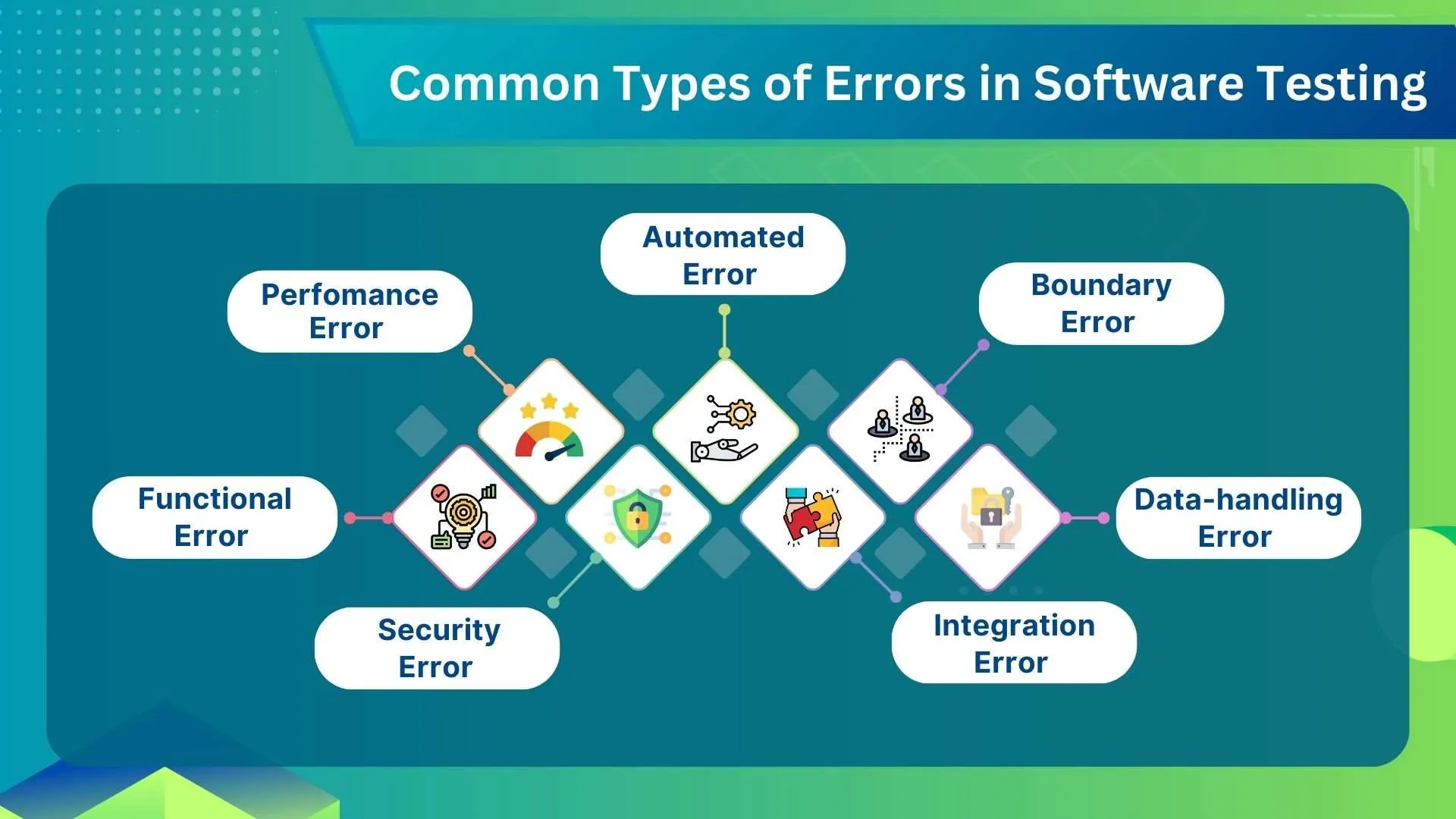

Common Types of Errors in Software Testing

There are several common types of errors which hinder the Software Testers:

🏓 Functional Errors: The most Basic Error where the software does not perform its intended functions.

🏓Performance Errors: These include the Issues related to speed, responsiveness and stability which come under software performance testing mistakes

🏓Security Errors: Vulnerabilities that expose the software to threats and attacks.

🏓Usability Errors: Problems that affect the user experience and ease of use.

🏓Compatibility Errors: Failures when the software does not work correctly across different environments or devices.

🏓Automated Testing Errors: Mistakes arising from improper use of automation tools, leading to Automated testing software testing mistakes.

🏓Integration Errors: Issues that arise when different modules or components of the software fail to work together properly.

🏓Regression Errors: New bugs that appear in previously working functionality after changes or Software updates.

🏓Configuration Errors: Mistakes related to incorrect setup or configuration of the software environment.

🏓Data Handling Errors: Problems with how the software processes, stores or retrieves data.

🏓Boundary Errors: Failures that occur when the software does not handle edge cases or limits properly.

🏓Logic Errors: Flaws in the software's algorithms or decision-making processes that lead to incorrect outcomes.

The true cost of ignoring Software Testing

In the previous section, we discussed common errors in software testing. Failing to address these software testing mistakes in the early stages of development can lead to the following problems:

💲Increased Repair Costs: Issues found late in the development cycle or after release are more expensive to fix. The costs include not only the direct expense of debugging and patching but also the potential downtime and lost revenue.

📉 Revenue Loss: Poor quality software can lead to customer dissatisfaction and attrition, directly impacting sales and revenue. Negative reviews and poor user experiences can deter potential customers.

Customer Trust 👍: Releasing buggy or insecure software can erode customer trust. Once lost, rebuilding a damaged reputation is challenging and time-consuming.

🗽Brand Image: Frequent failures or issues with software can lead to a negative perception of the brand, affecting not just the specific product but the entire company.

Complexity 🧩 of Late Fixes: Problems identified late in the development process are often more complex and intertwined with other system components, making them harder and costlier to resolve.

Resource 💰 Allocation: Fixing late-stage errors diverts resources from new development projects, causing delays and increased costs for future initiatives.

Talking about the costs is of no use until the tester understands the reasons for these causes. Some of such mistakes are listed from the next section.

1. Not communicating proactively 📞

Proactive communication is crucial in software testing to prevent misunderstandings and missed requirements can lead to critical errors. Giving updates at Regular Intervals between developers, testers and stakeholders ensure alignment and coherence throughout the development process.

⭐ Effective communication 📧 facilitates early detection of issues, enabling timely interventions and preventing costly rework. Establishing a culture of transparency fosters collaboration and innovation. Utilizing collaborative tools 🧰 and platforms enhances information flow, ensuring that potential Software vulnerabilities are identified and addressed promptly, thereby optimizing the overall process and improving the software product quality.

2. Lack of clarity regarding goals and scope

Clearly defined goals and objectives are fundamental prerequisites for Testing any Software. In the absence of such clarity, teams risk diverting efforts towards inconsequential aspects while overlooking critical functionalities, thereby compromising the effectiveness. Ambiguity in goals increases this risk, potentially leading to escalated software testing costs attributable to misdirected efforts and bad Software Quality delivery 🚚.

⭐ Establishing clear objectives right from the project's inception empowers teams to channel their testing cycles smoothly. This strategic clarity enables efficient resource allocation, ensuring that manpower, time and financial resources are judiciously utilized, ultimately delivering High Quality products that meet product owner expectations.

3. Unclear Pass/Fail criteria

Unclear pass ✔️ or fail ❌ criteria in software testing can lead to confusion and inconsistency among team members. It's crucial to explicitly define these criteria to prevent subjective interpretations and ensure objective evaluation of software features. Clear criteria help testers determine whether a feature meets required standards, promoting consistency in assessment.

⭐ This clarity fosters a shared understanding among stakeholders regarding success criteria and ensuring alignment of expectations. Well-defined criteria also aid in more accurate regression testing, where changes are evaluated against established benchmarks to detect any new errors. Teams can enhance the reliability of their testing processes, streamline decision-making and ultimately deliver higher-quality software products.

4. Testing at an unsuitable stage of testing

Testing at the wrong stage of development can result in inefficient resource allocation and overlooked defects. If testing is delayed until the end of the development process, defects that could have been caught early may go unnoticed, leading to more complex and costly fixes later on.

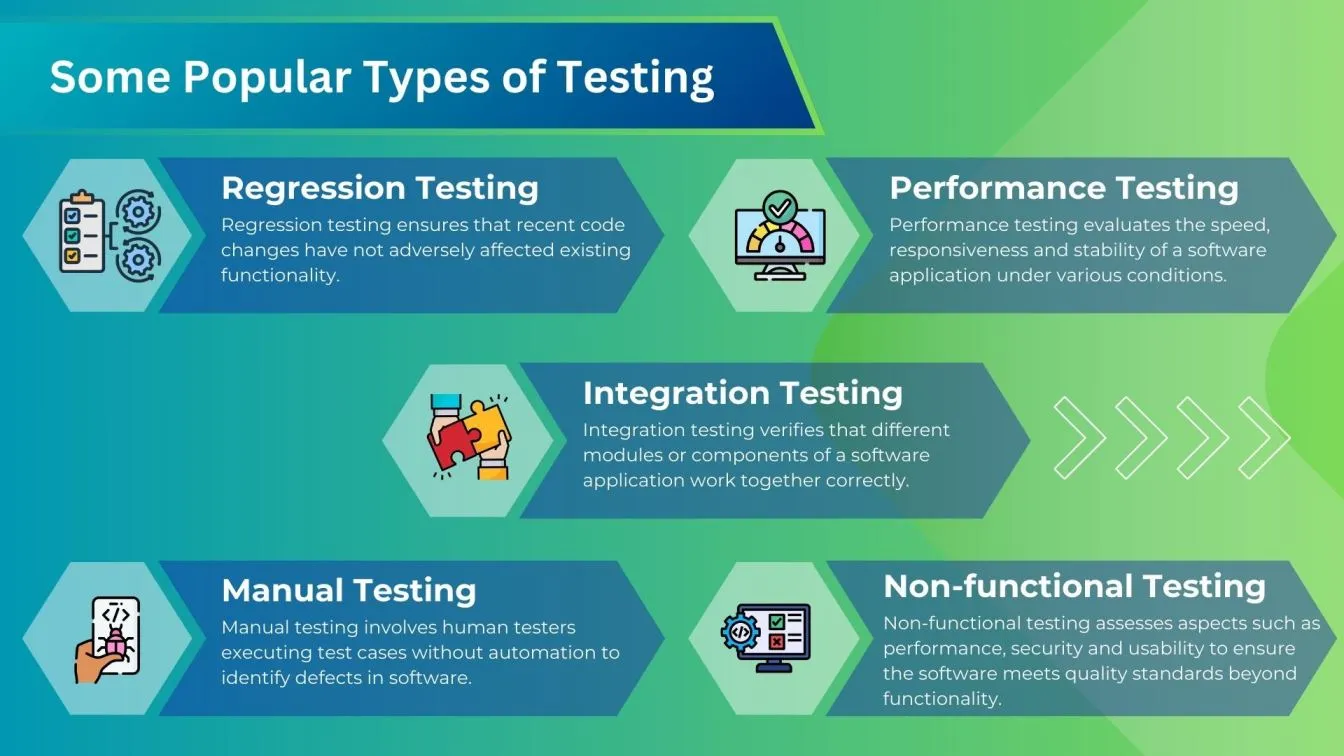

Utilizing different testing types at suitable stages ensures comprehensive coverage. For instance, conducting unit testing early on allows for the detection of individual component flaws (unit-level bugs 🐞), while system testing and user acceptance testing are better suited for later stages when the software is more complete.

⭐ This strategic approach aids in effectively managing the costs by addressing issues early and preventing them from escalating into larger problems during later stages of development. These methods include performance testing 🚀, integration testing and manual testing 👷.

5. Ignoring non-functional testing

Non-functional Testing includes performance, security and usability testing, It guarantees that the software meets user expectations and operates effectively under diverse conditions. Neglecting non-functional testing can result in user dissatisfaction and leave the software vulnerable to security breaches.

⭐ Incorporating non-functional testing into the overall testing strategy is crucial for delivering a robust product. Investing in appropriate testing tools tailored for non-functional testing can preempt costly issues and enhance user satisfaction by identifying and addressing potential concerns early in the development process.

6. Limited test data variety

Employing a narrow ⛰️ range of test data can result in incomplete testing and overlooked bugs. Testing with diverse data sets is essential to simulate various user scenarios and reveal hidden issues, particularly edge cases that may not be apparent with uniform data.

A broad spectrum of test data enhances the precision of test results. This diversity is huge for both manual and automated software testing, as it validates the software's ability to effectively manage real-world usage scenarios.

⭐ By ensuring a wide range of test data, software testers can accurately assess the software's performance under diverse conditions, thereby improving its overall quality and reliability. To generate diverse and comprehensive test data, several tools can be employed. Tools like Mockaroo and Datagen allow testers to create large volumes of realistic test data quickly. Apache JMeter is useful for generating complex test data. Tools like SQL Data Generator by Redgate can create test data for databases, ensuring that varied data sets are used in testing database-driven applications.

7. Ignoring exploratory testing

🗺️Exploratory testing allows testers to actively investigate the software, leveraging their experience, intuition and creativity to uncover defects and potential issues. Neglecting exploratory testing can result in missed defects, limited testing coverage, reduced user satisfaction and increased risk of production failures.

Without the dynamic exploration provided by this approach, critical defects may go unnoticed, testing coverage may be incomplete and usability issues may persist, ultimately leading to a suboptimal user experience and potential system failures in production.

⭐ Incorporating exploratory testing into the testing process enables testers to uncover unexpected defects, explore various user scenarios and identify usability issues proactively, thereby enhancing the overall quality and reliability of the software product.

8. Improper bug reporting and tracking

Effective 📍tracking and reporting of Software Bugs are essential for a successful software testing process. Incomplete or unclear bug reports can lead to miscommunication and delays in fixing issues. Proper documentation 📓 ensures that developers understand the problem and can address it efficiently.

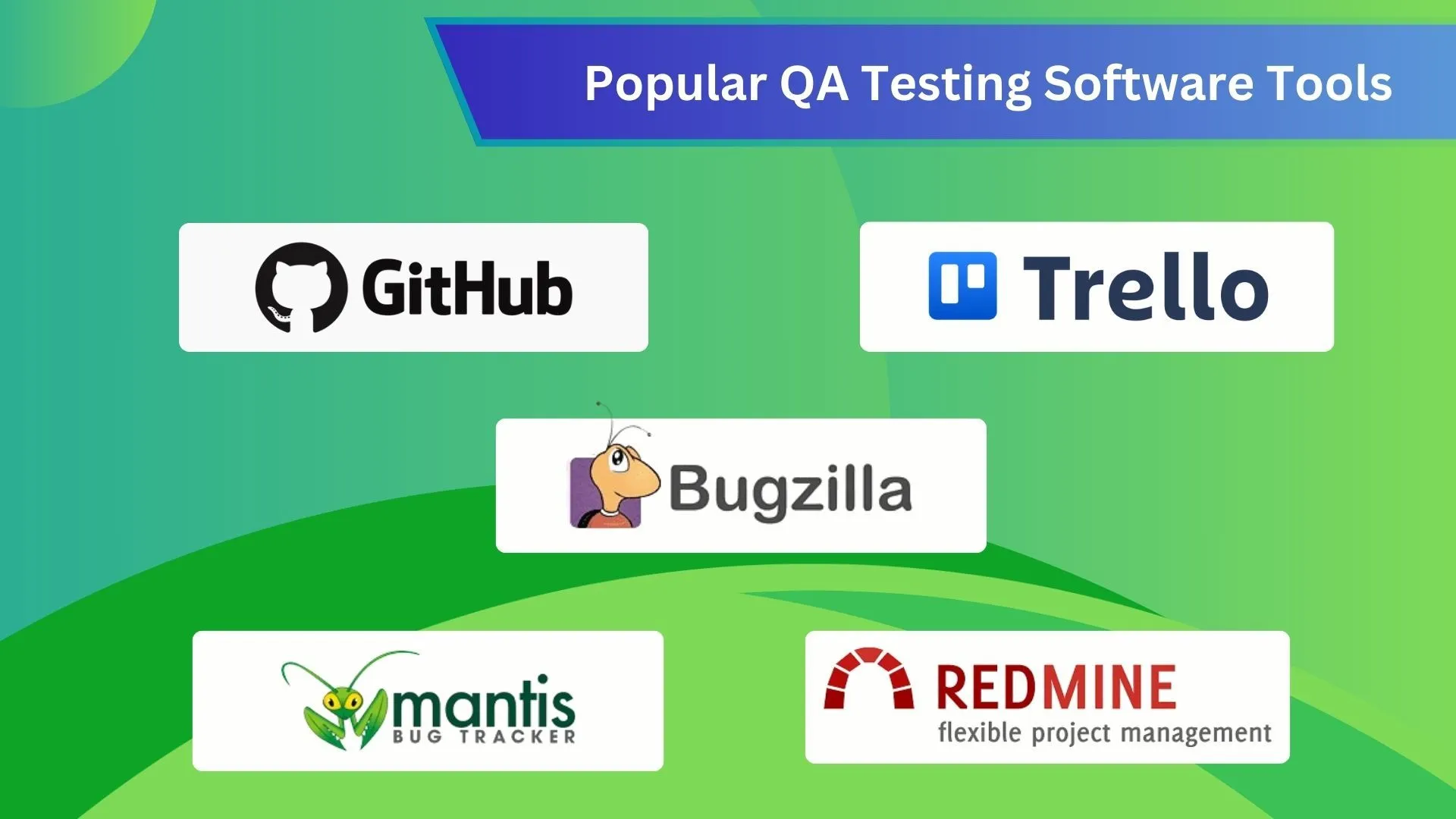

⭐ Dedicated bug tracking tools help in streamlining the entire process. These tools provide a centralized platform for reporting, tracking and resolving bugs, ensuring that nothing is left unchecked.

Here are some popular bug tracking tools which can aid in your software testing

Popular bug tracking tools include:

Jira: Widely used for issue and project tracking, offering robust features for bug tracking and agile project management.

Bugzilla: An open-source bug tracking system known for its powerful search capabilities and extensive customization options.

Trello: A visual project management tool that can be used for bug tracking with its card-based system and integration with other tools.

Redmine: An open-source project management and issue tracking tool that supports multiple projects and flexible role-based access control.

MantisBT: A free and open-source bug tracking system that is user-friendly and easy to set up.

GitHub Issues: Integrated with GitHub repositories, this tool provides a simple and effective way to track bugs and feature requests.

9. Not improving the system's testability

Improving the testability of a system involves implementing strategies for flexible testing. This can be achieved through the adoption of practices such as modular design and comprehensive logging to track the system's behavior during testing. Without a focus on improving testability, the testing process becomes more complex and time-consuming, as testers struggle to navigate through intricate systems and interfaces.

⭐ Testability contributes to effective cost strategies in software testing by minimizing the need for extensive rework and debugging efforts during later stages of the Software development Process.

10. Overlooking the importance of keeping previous test results

❎ Failing to maintain previous test results can pose significant challenges in tracking the software's evolution and identifying persistent issues. Without access to historical data, testers may struggle to assess the software's stability over time and may overlook recurring patterns during regression testing. This oversight can result in redundant testing efforts and missed opportunities to address underlying issues effectively.

⭐ Maintaining a comprehensive archive of past test results plays a vital role in facilitating informed decision-making and streamlining testing processes. By referencing historical data, teams can prioritize testing efforts more effectively, focusing on areas that have historically posed challenges or exhibited vulnerabilities. Overall, overlooking the importance of maintaining previous test results undermines the efficiency of the testing strategy and compromises the quality and reliability of the software product.

Failing to learn from mistakes

Learning about all of these mistakes is of no use if you repeat them again in future. By analyzing what went wrong before, you can refine your testing strategies and avoid making the same errors again. Taking the time to learn from past experiences will save you time and money in the long run.

Learning from past mistakes is a fundamental aspect of QA testing. They Implement a feedback loop where lessons learned are documented and shared to enhance the overall testing process. This approach fosters a culture of continuous improvement and helps in delivering better software.

Wrapping up!

Software testing is crucial for ensuring the quality, reliability, and security of operating systems in today's digital world. By addressing common mistakes like poor communication, unclear pass/fail criteria, and ignoring non-functional testing, organizations can find ways to improve and reduce risks. Focusing testing efforts based on risk analysis, thoroughly analyzing requirements, and using automation tools can make testing more efficient and effective.

Best practices include documenting test processes and using tools like Jira for tracking defects and Selenium for automated testing. These tools help organize testing activities, improve accuracy, and ensure defects are fixed efficiently. Good documentation also helps keep testing consistent and provides a guide for future tests.

By taking a proactive approach to software testing and following these best practices, organizations can improve product quality, make development smoother, and deliver software that meets user expectations. This not only leads to happier users but also gives a competitive advantage in the market.

People also asked

👉 What are some good practices for writing clear pass/fail criteria?

Good practices for writing clear pass/fail criteria involve defining specific, measurable criteria that align with project objectives and stakeholder expectations. Utilizing descriptive language, providing examples and ensuring clarity on expected outcomes can enhance understanding and consistency in evaluation.

👉 What strategies can we use to prioritize testing efforts?

Conducting impact analysis, collaborating with stakeholders to identify priorities and leveraging test automation for repetitive tasks can optimize resource allocation and focus testing efforts on areas with the highest potential impact.

👉 What are some effective ways to test edge cases and corner scenarios?

Effective ways to test edge cases and corner scenarios include thorough requirement analysis, exploring boundary conditions and leveraging exploratory testing techniques. Creating test scenarios that cover both typical and extreme conditions, utilizing equivalence partitioning and conducting stress testing.

👉 What role does documentation play in avoiding software testing errors?

Documentation of Comprehensive test plans, detailed test cases and thorough defect reports facilitate communication, promote consistency and aid in identifying and resolving issues efficiently.

👉 Are there any tools available to assist us in avoiding these mistakes?

Jira provides a robust platform for capturing and managing issues effectively. Selenium automates web browser interactions to facilitate rapid and accurate test execution, TestRail offers comprehensive test management capabilities, execution and reporting.

%201.webp)