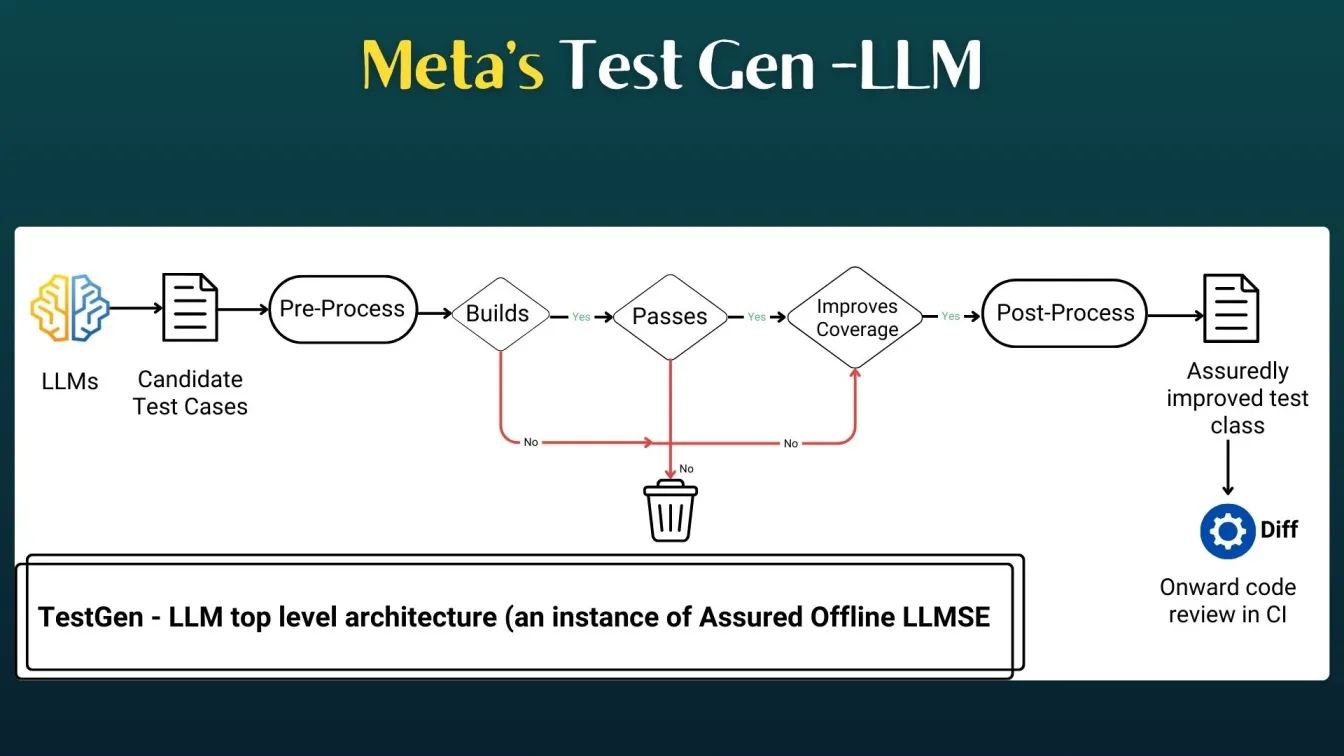

Leveraging the potential of LLMs (Large Language Models) has become a game-changer. LLM-based testing offers an AI-driven approach to generating unit tests with enhanced accuracy and efficiency. Unlike traditional manual testing, which is time-consuming and prone to human errors, AI-powered test cases can generate correct test inputs and provide comprehensive test coverage. With tools like Meta's TestGen-LLM, developers can optimize their testing workflows by automating the creation of unit tests.

Mutation testing and flaky tests often pose significant challenges in maintaining a reliable codebase. By using natural language descriptions and applying mutation-guided LLM-based test generation, developers can uncover edge cases and detect compiler errors. Additionally, AI-based testing tools ensure that ground truth code aligns with expected outcomes. The ability to convert actual code into actionable insights through line-level code coverage reports enhances the overall reliability of the application.

Moreover, LLM-powered test generation is not limited to simple test scenarios. It extends to generating JUnit test methods, supporting code assistants, and facilitating automated program repair. Through effective post-processing, LLMs transform basic prompts into refined test cases tailored to specific requirements. By applying custom prompts, developers can further fine-tune the generated test cases to meet project-specific needs. This innovative method enhances software quality and accelerates the development cycle.

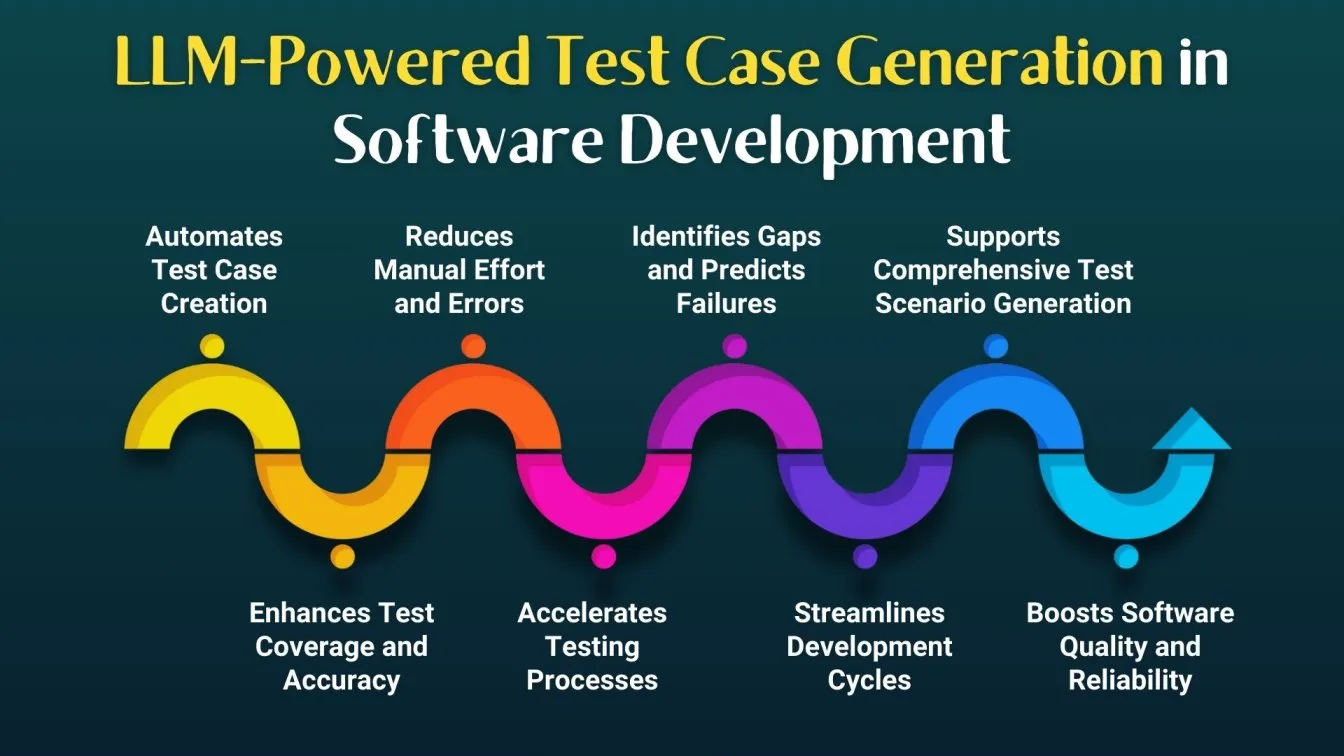

Introduction to LLM-Powered Test Case Generation

Maintaining high software quality requires efficient and reliable testing processes. Traditional test case generation methods often involve manual efforts that are time-consuming and prone to human error. Large Language Models (LLMs) are transforming this landscape by automating test case generation, improving accuracy, and accelerating the testing process.

Key Advantages of LLMs in Test Case Generation:

- Automated Test Creation – Generates test cases based on requirements and user stories.

- Enhanced Coverage – Identifies edge cases and boundary conditions.

- Test Automation Support – Suggests scripts for automation tools.

- Predictive Analysis – Identifies potential failure points and recommends improvements.

- Efficiency Boost – Reduces manual effort and speeds up the development cycle.

By integrating LLMs into testing workflows, teams can optimize their testing strategies, minimize risks, and ensure high-quality software releases. This blog explores how LLM-powered test case generation works, its benefits, implementation strategies, and best practices. Let’s dive in! 🚀

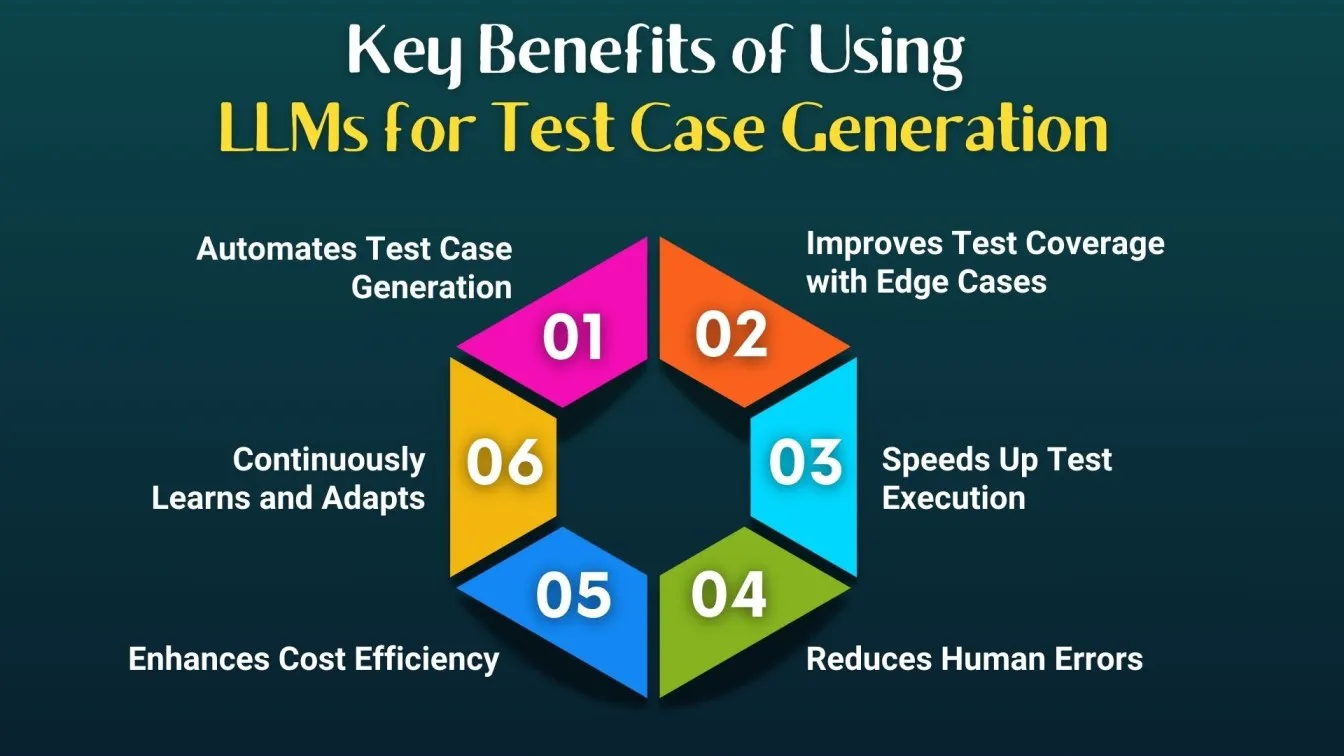

Why Use LLMs for Test Case Generation?

Incorporating Large Language Models (LLMs) into test case generation offers numerous advantages that enhance the quality and efficiency of software testing. By automating key aspects of the testing process, LLMs reduce manual efforts, minimize errors, and ensure comprehensive coverage.Key Benefits of Using LLMs for Test Case Generation are:

- Automation: Generate test cases automatically from requirements, user stories, or code.

- Improved Coverage: Identify edge cases and complex scenarios often missed manually.

- Faster Execution: Speed up test generation and execution using AI-powered automation.

- Error Reduction: Minimize human error in test case design and scripting.

- Cost Efficiency: Reduce resource-intensive testing phases.

- Continuous Learning: Enhance test accuracy by learning from previous test results.

By integrating LLMs into your software testing workflow, teams can accelerate development cycles while maintaining high-quality standards. These models can analyze vast datasets, predict failures, and suggest improvements, leading to more resilient software. Embracing LLM-powered test case generation empowers teams to build reliable applications faster, optimize resource utilization, and stay competitive in today’s agile development environment.

How LLMs Enhance Test Coverage and Efficiency

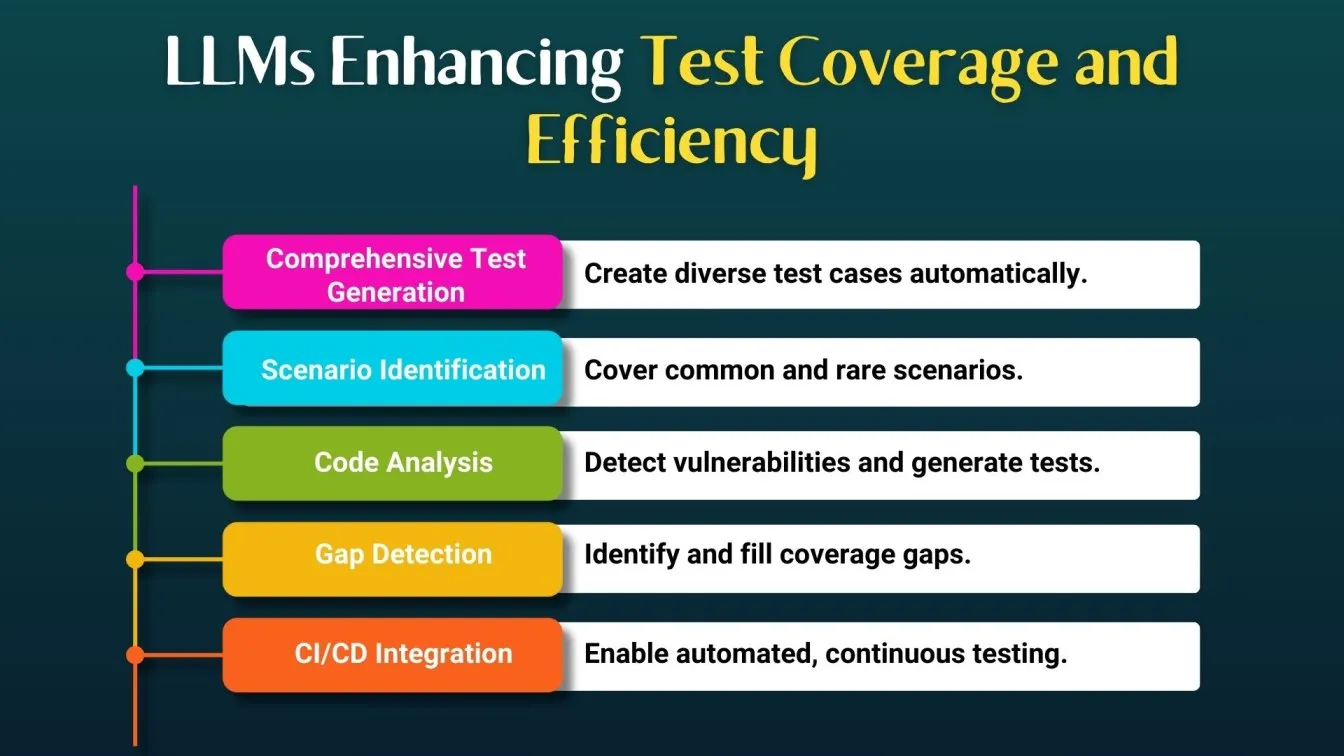

Large Language Models (LLMs) significantly improve test coverage and efficiency by automating the creation of diverse, accurate test cases. Traditional testing methods often miss edge scenarios, but LLMs analyze application data, code, and historical test results to generate comprehensive test scenarios. This not only ensures broader coverage but also accelerates the testing process, reducing manual effort and minimizing errors. Key Ways LLMs Enhance Test Coverage and Efficiency are :

- Comprehensive Test Generation: Automatically generate functional, edge, and boundary test cases.

- Scenario Identification: Create realistic test scenarios covering both common and rare use cases.

- Automated Code Analysis: Analyze source code to detect vulnerabilities and generate appropriate test cases.

- Faster Test Creation: Accelerate the test generation process, reducing manual intervention.

- Gap Detection: Identify test coverage gaps and recommend additional test scenarios.

- Smart Prioritization: Prioritize critical test cases using application risk and historical data.

- Continuous Improvement: Refine test cases based on past test results for higher accuracy.

- Reduced Redundancy: Minimize duplicate or irrelevant test cases.

- Enhanced Test Data Generation: Produce realistic test data for more effective testing.

- Seamless Integration: Integrate LLM-powered tools into CI/CD pipelines for automated testing.

By incorporating LLMs into your testing workflow, you ensure faster releases with fewer bugs. The enhanced test coverage and efficient test generation capabilities empower development teams to maintain high software quality while reducing testing time and costs.

Types of Test Cases LLMs Can Generate

Large Language Models (LLMs) are capable of generating a variety of test cases, ensuring comprehensive software testing. By analyzing application requirements, user stories, and past test data, LLMs can automatically create relevant and accurate test cases. This helps cover all aspects of software functionality, including both common and complex scenarios.

Below is a table outlining the types of test cases LLMs can generate and their purposes:

By leveraging LLMs for automated test generation, teams can significantly reduce manual efforts, improve coverage, and ensure faster releases with higher software quality.

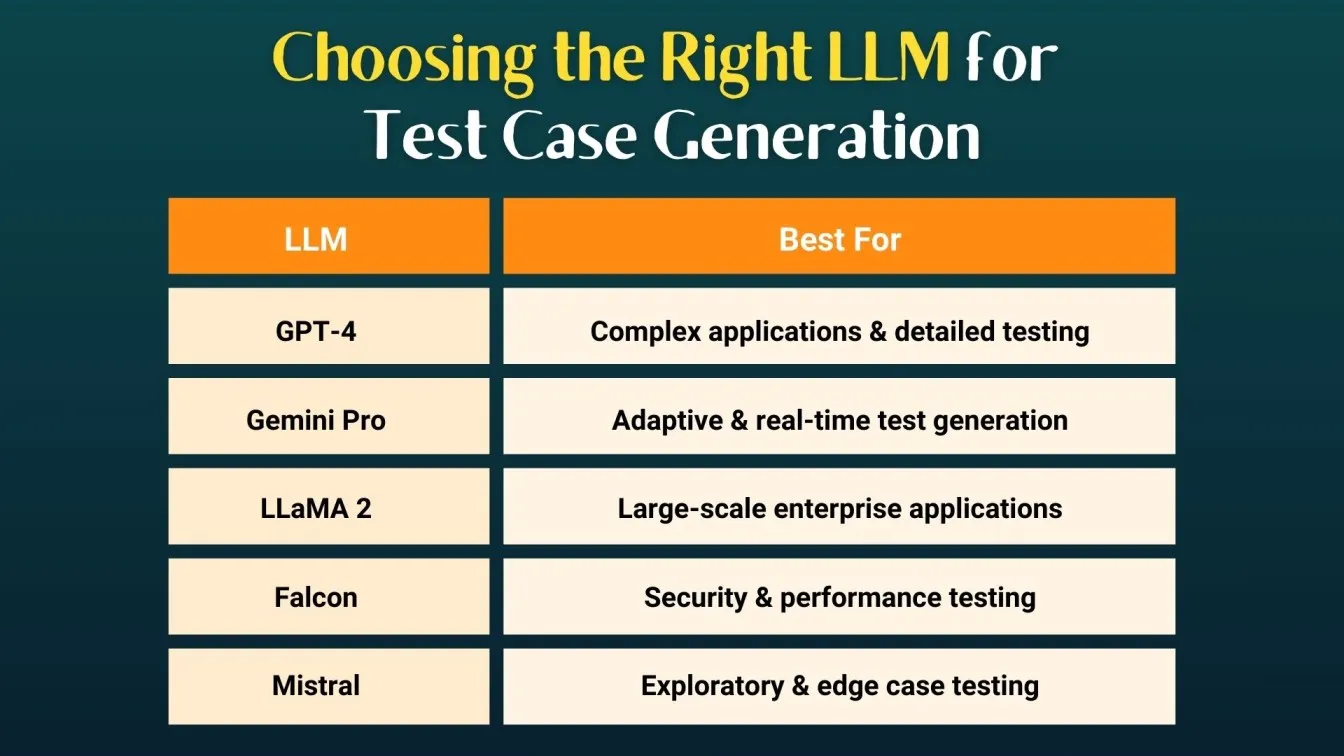

Choosing the Right LLM for Test Case Generation

When selecting a Large Language Model (LLM) for test case generation, understanding their strengths and ideal use cases can help you make an informed decision. The new LLM suite offers advanced tools for developing LLM AI applications, including powerful LLM agents that excel in various tasks; its performance is regularly assessed using rigorous LLM benchmarks and det led LLM evaluation metrics.

Here are some of the most popular LLMs available and how they can benefit your testing process.

GPT-4

Best for: Complex applications and detailed testing.

GPT-4 is an advanced LLM capable of generating highly detailed and context-aware test cases. Its superior natural language understanding and reasoning abilities make it ideal for complex applications that require nuanced test scenarios. Additionally, it supports multi-modal capabilities, making it versatile across various testing needs. The ChatGPT app experienced a temporary outage yesterday, leading many users to explore a ChatGPT alternative, while others considered subscribing to ChatGPT Plus; meanwhile, investors closely monitored ChatGPT stock for any market impact.

Gemini Pro

Best for: Adaptive and real-time test generation.

Gemini Pro offers exceptional adaptability, making it suitable for dynamic applications requiring frequent updates. It handles large-scale data effectively and can be fine-tuned for specific testing tasks. Its real-time reasoning ability ensures quick adjustments based on live feedback.

LLaMA 2

Best for: Large-scale enterprise applications.

LLaMA 2 is a cost-effective open-source model that supports extensive customization. It is ideal for companies needing domain-specific test case generation. Its scalability and flexibility make it a great choice for enterprise-level applications.

Falcon

Best for: Security and performance testing.

Falcon stands out for its contextual understanding and minimal hallucination rates. It is particularly effective for generating test cases that identify system vulnerabilities, making it a strong choice for security and performance testing.

Mistral

Best for: Exploratory and edge case testing.

Mistral is designed for rapid processing and efficient test generation. Its low memory footprint and speed make it perfect for exploratory testing, especially when identifying unexpected bugs or edge cases.

Each LLM has its own strengths, so selecting the right one depends on your application's complexity, testing scope, and budget. Carefully evaluating these factors will help you maximize your test coverage and improve software quality through automated test case generation.

Step-by-Step Guide to Implement LLM-Powered Test Case Generation in 2025

Implementing LLM-powered test case generation can significantly enhance your software testing process by automating test creation, improving coverage, and reducing manual effort. Follow this comprehensive step-by-step guide to seamlessly integrate LLMs into your testing pipeline.

STEP 1: Define Your Objectives

Start by clearly defining your goals for using LLMs. Identify whether you aim to generate functional, performance, regression, or security test cases. Understanding your specific needs will guide the model selection and implementation process.

STEP 2: Choose the Right LLM

Select an LLM that aligns with your project’s requirements. Options like GPT-4, Gemini Pro, LLaMA 2, Falcon, or Mistral are excellent choices depending on your budget, scalability, and desired output. Evaluate their performance using sample data before making a final decision.

STEP 3: Prepare Your Data

- Collect application data such as API documentation, user stories, and functional requirements.

- Ensure data quality by removing inconsistencies and duplicates.

- Format the data in a readable structure using JSON, CSV, or plain text.

- Consider anonymizing sensitive information to ensure privacy compliance.

STEP 4: Set Up Your Environment

- Install the required LLM libraries using packages like transformers from Hugging Face or LangChain.

- Ensure compatibility with your current tech stack.

- Set up API keys for external LLMs like GPT-4 or Gemini Pro.

- Create a secure environment to manage your data and models.

STEP 5: Fine-Tune the LLM

- If required, fine-tune the LLM using domain-specific data.

- Apply transfer learning to train the model on application-specific terminology.

- Regularly validate the model's accuracy using benchmark datasets.

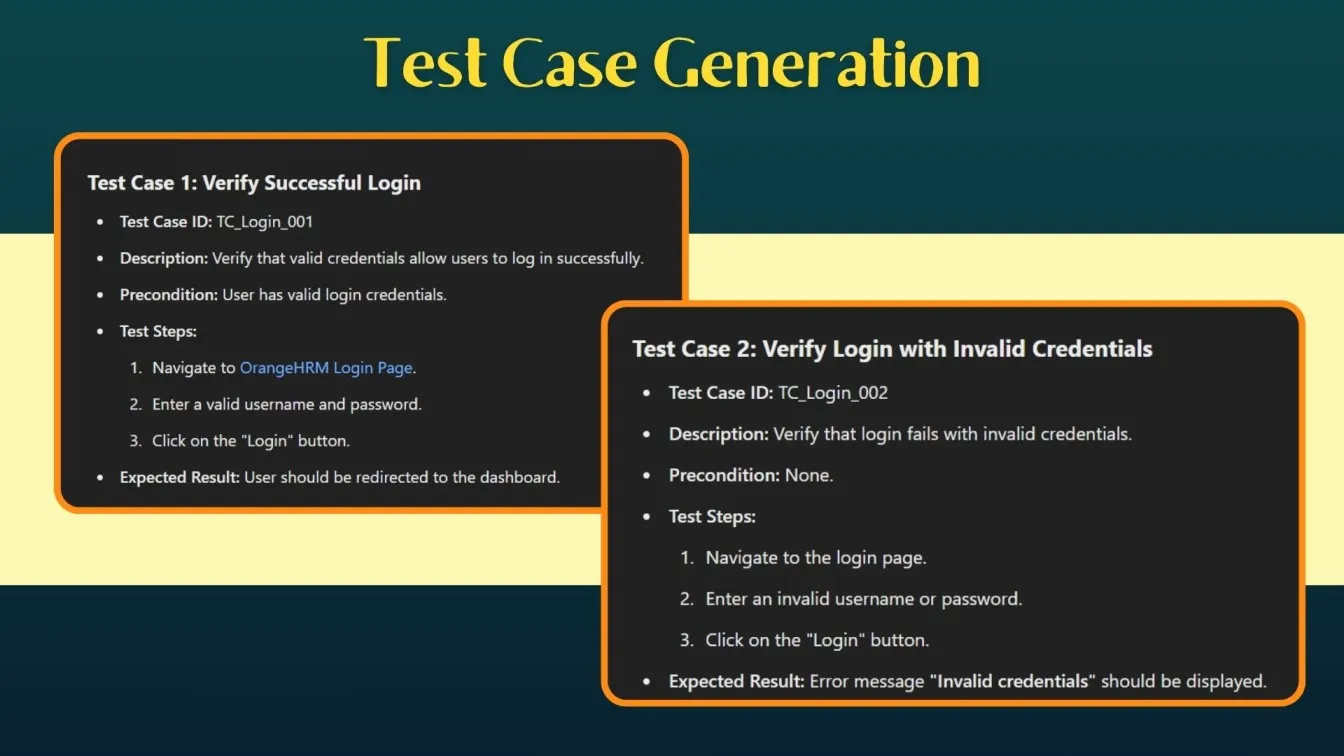

STEP 6: Generate Test Cases

- Provide clear prompts that include context and expected outcomes.

- Use LLMs to generate a variety of test scenarios such as positive, negative, edge, and boundary cases.

- Implement prompt engineering techniques to refine test case generation.

STEP 7: Validate and Optimize

- Review the generated test cases for relevance and accuracy.

- Collaborate with QA engineers and developers for feedback.

- Continuously improve prompts and fine-tuning for better results.

- Implement automated validation pipelines to detect anomalies.

STEP 8: Integrate with Your Test Management System

- Connect the LLM output to tools like Jira, TestRail, or Zephyr.

- Automate test execution using frameworks like Selenium, Playwright, or Postman.

- Monitor and analyze test results for insights and improvements.

STEP 9: Monitor and Maintain

- Set up monitoring to track the model's performance.

- Implement regular model updates and retraining.

- Use feedback loops to improve test case quality.

- Ensure compliance with data privacy regulations.

STEP 10: Measure Success

- Track key metrics like test coverage, defect detection rate, and execution time.

- Compare manual vs. LLM-generated test case effectiveness.

- Gather feedback from stakeholders to evaluate satisfaction.

By following this step-by-step guide, you can effectively implement LLM-powered test case generation in 2025. Automating test case creation will enhance productivity, improve software quality, and accelerate your development lifecycle. Start small, monitor results, and gradually scale up for long-term success. Embrace the power of LLMs and transform your software testing strategy today!

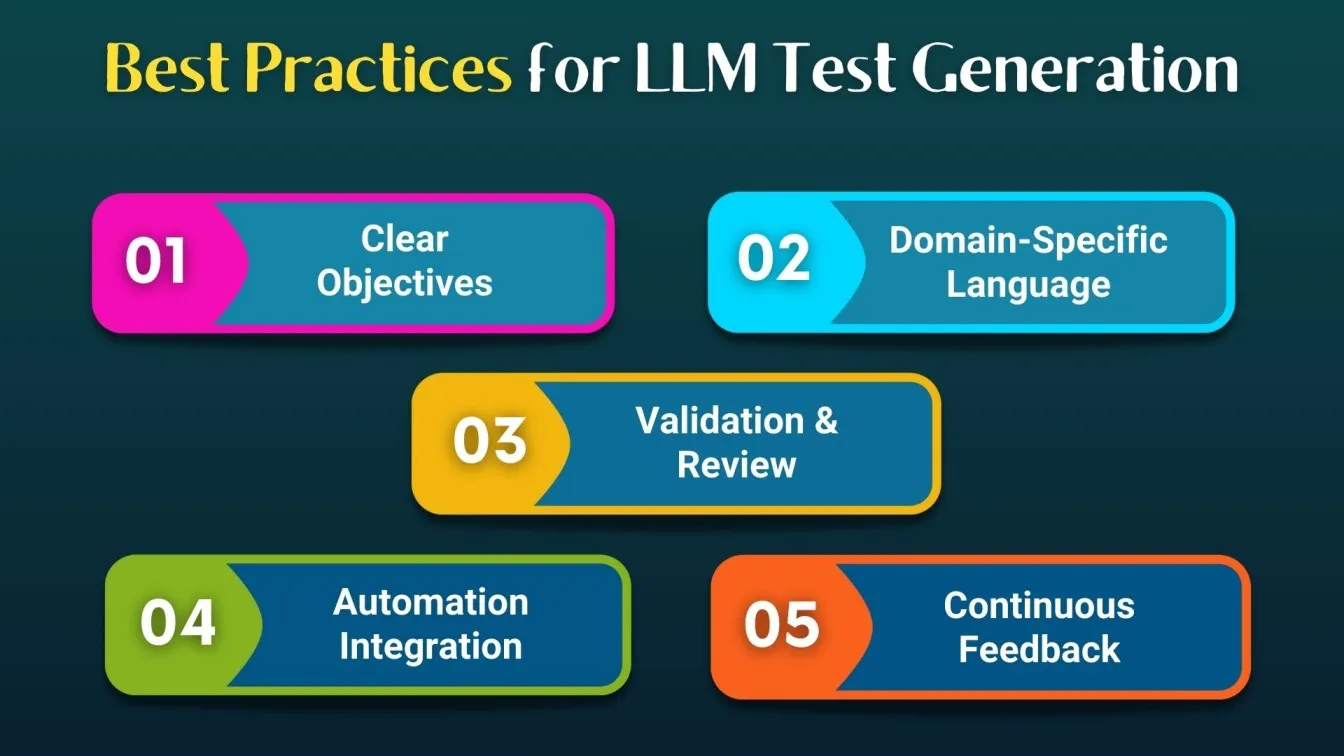

Best Practices for Effective LLM Test Generation

To generate effective test cases using Large Language Models (LLMs), start by providing clear, context-rich prompts with well-defined objectives.

- Clear Context and Objectives: Provide detailed prompts with specific objectives to generate relevant test cases.

- Domain-Specific Language: Use industry-relevant terminology and scenarios for accurate results.

- Systematic Test Design: Apply techniques like boundary value analysis and equivalence partitioning for comprehensive coverage.

- Prompt Chaining: Use iterative prompts to refine and improve test case quality.

- Validation and Review: Manually review generated tests and consult domain experts for accuracy.

- Fine-Tuning: Customize LLMs to reduce bias and enhance domain-specific understanding.

- Continuous Feedback: Establish a feedback loop for model improvement based on real-world results.

- Compliance and Standards: Ensure adherence to relevant testing standards and guidelines.

- Automation Integration: Incorporate LLMs into CI/CD pipelines for dynamic test generation and execution.

- Performance Monitoring: Track accuracy, diversity, and success rates to optimize test generation.

Following these practices ensures reliable, efficient, and high-quality LLM-driven test generation.

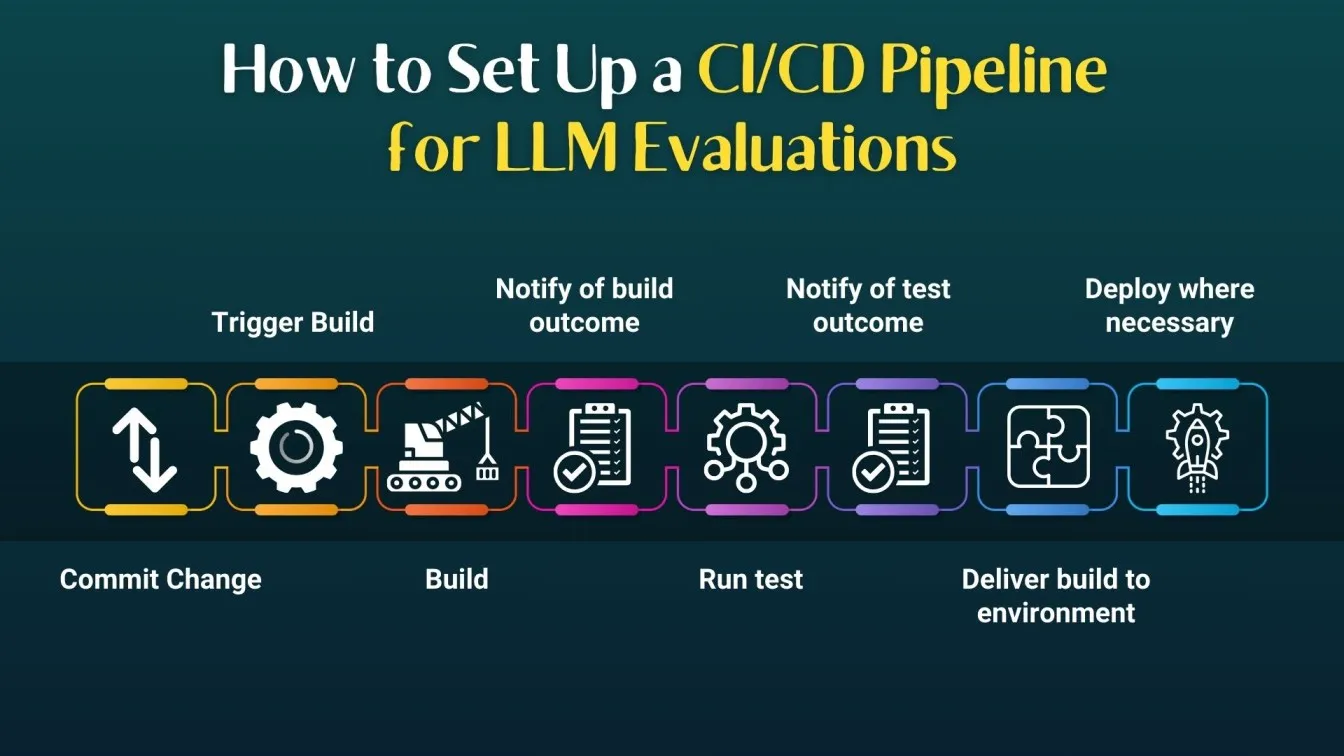

Integrating LLMs into Your CI/CD Pipeline

Integrating Large Language Models (LLMs) into your CI/CD pipeline can enhance automated testing and streamline software delivery. Begin by incorporating LLMs for test case generation, ensuring dynamic and comprehensive coverage across various scenarios. Automate prompt-based test creation and validation by connecting LLM APIs to your testing framework. Use feedback loops from test results to refine the LLM’s performance. Implement LLMs for intelligent code reviews, detecting anomalies, and suggesting improvements. Additionally, leverage LLMs for generating detailed reports, analyzing logs, and identifying root causes of failures. For optimal results, ensure model outputs are monitored for accuracy and consistency. Establish checkpoints for manual verification in critical stages to prevent false positives. By embedding LLMs into the CI/CD workflow, teams can accelerate release cycles, enhance test quality, and achieve faster issue resolution, driving overall software development efficiency.

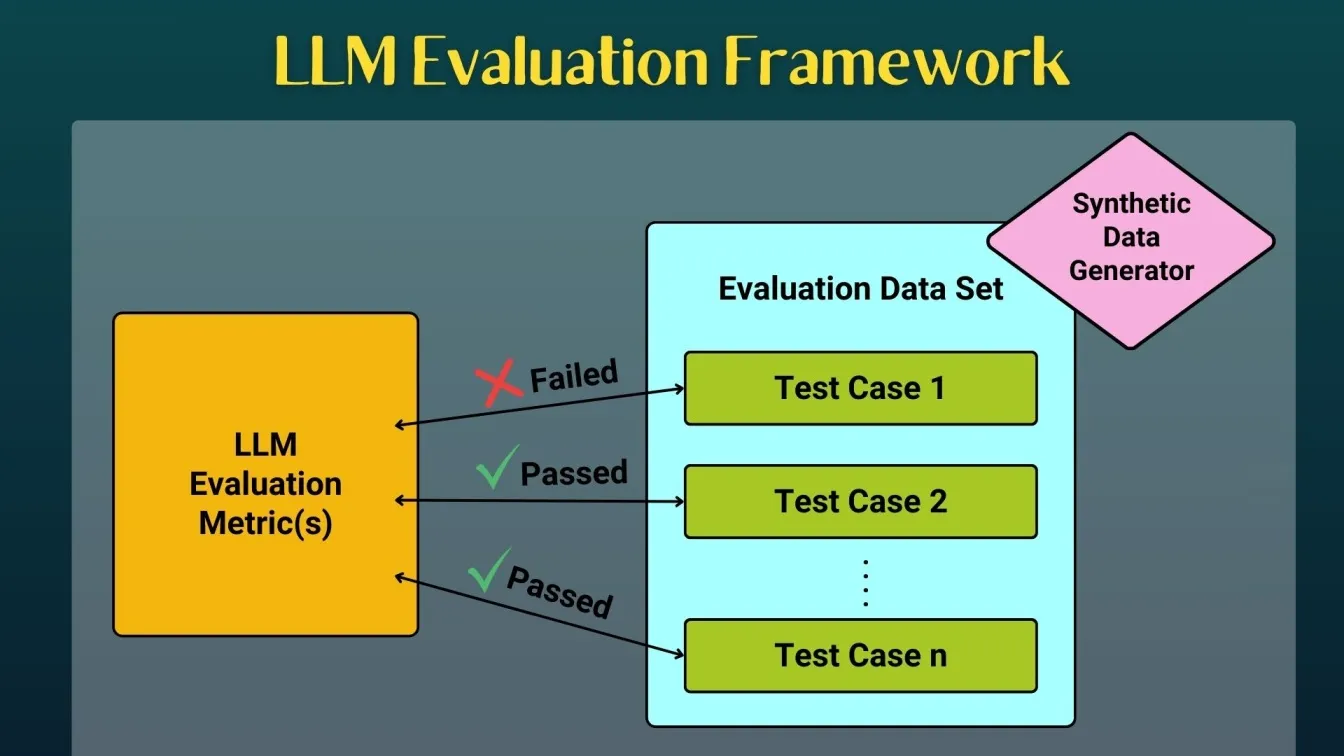

Evaluating the Quality of AI-Generated Test Cases

Ensuring the accuracy, relevance, and coverage of AI-generated test cases is crucial for effective software testing. Evaluation involves both automated and manual validation to improve test quality and identify gaps.

Key Evaluation Metrics:

- Accuracy: Measures how well test cases align with requirements.

- Coverage: Evaluates the extent of application functionality tested.

- Relevance: Assesses whether test cases reflect real-world scenarios.

- Uniqueness: Ensures test cases are diverse and not redundant.

Evaluation Techniques:

- Use automated validation tools to compare test outputs against expected results.

- Implement integration-level test case generation to validate system interactions.

- Leverage unit test generators to check code-level correctness.

- Utilize AI-driven code assistants for test breakdown and optimization.

By integrating LLM-based testing, teams can automate test case creation, analyze multiple programming languages, detect vulnerabilities, and enhance software quality efficiently.

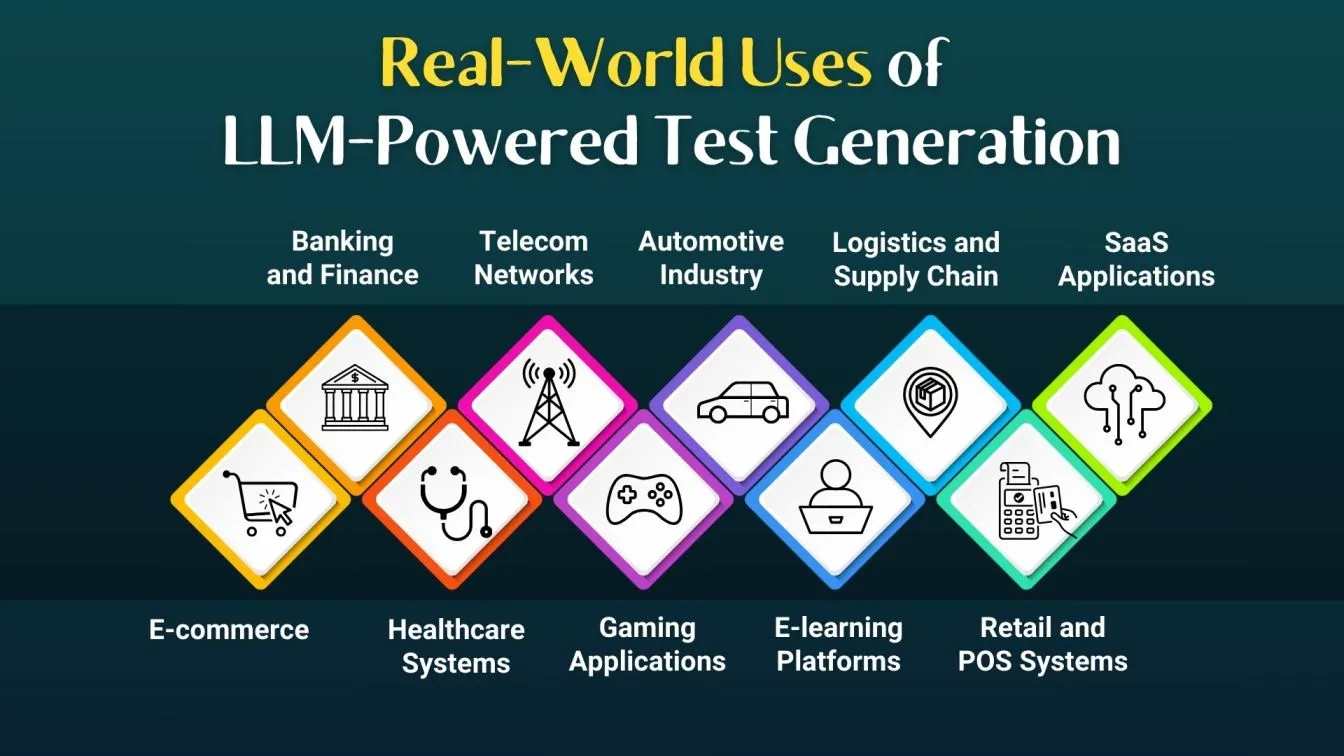

Real-World Examples of LLM-Powered Test Generation

LLMs are revolutionizing software testing by automating test case generation across various industries. Their ability to create comprehensive and context-aware test cases ensures better software quality, faster releases, and improved user experiences.

- E-commerce Platforms: Validate payment gateways, inventory management, and cross-device user experience.

- Banking and Finance: Ensure secure transactions, compliance, and fraud detection.

- Healthcare Systems: Test patient data management, telehealth platforms, and HIPAA compliance.

- Telecom Networks: Evaluate network connectivity, data transmission, and user authentication.

- Gaming Applications: Simulate gameplay scenarios, test performance, and check for multiplayer issues.

- Automotive Industry: Validate autonomous driving algorithms and simulate accident scenarios.

- E-learning Platforms: Generate tests for module completion, progress tracking, and adaptive learning.

- Logistics and Supply Chain: Optimize route management, inventory tracking, and real-time updates.

- Retail and POS Systems: Test payment processing, discounts, and seamless customer interactions.

- SaaS Applications: Ensure API stability, software integrations, and data synchronization.

By leveraging LLM-powered test generation, organizations can enhance testing efficiency, minimize errors, and deliver high-quality software across diverse applications.

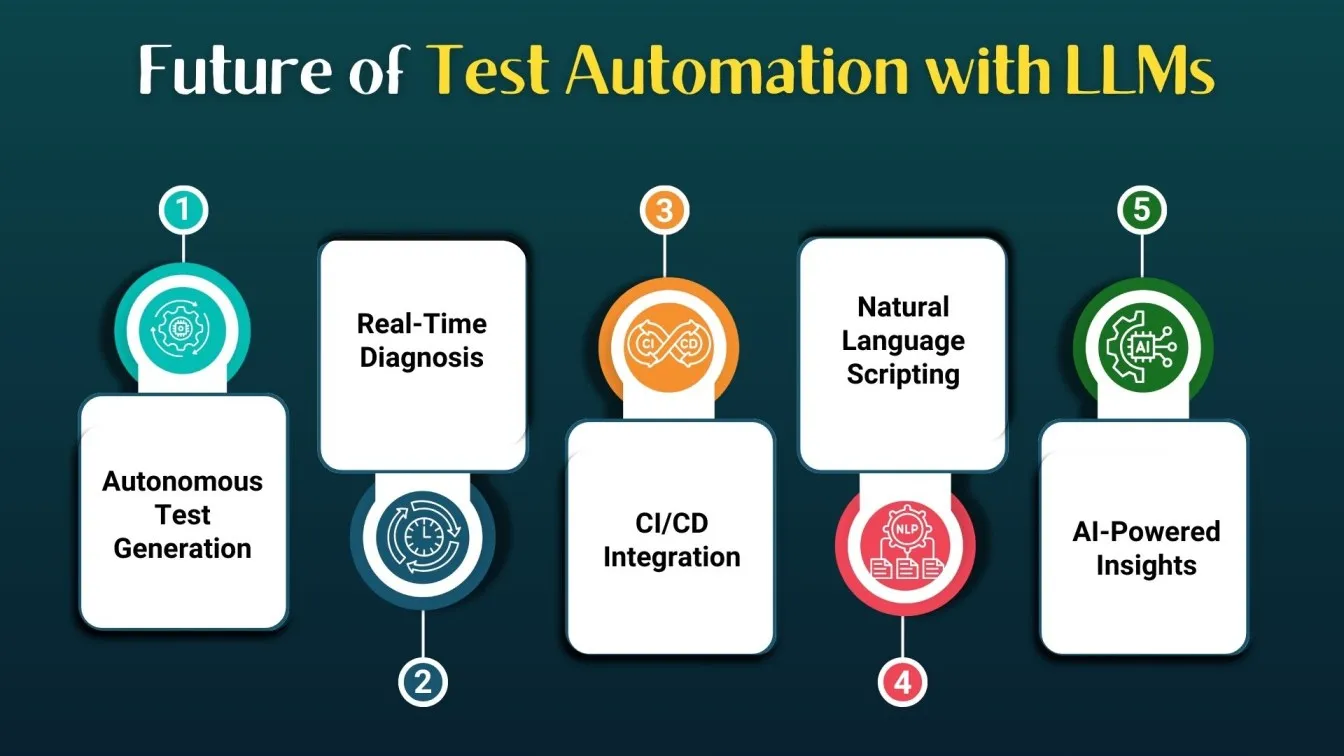

Future of Test Automation with LLMs

The future of test automation with LLMs is set to revolutionize software testing by making it more intelligent, efficient, and scalable. AI-driven models will automate complex test scenarios, improve test coverage, and reduce human intervention. LLM-based tools will enhance unit testing, integration testing, and mutation testing, ensuring higher accuracy and reliability. With self-learning AI, test automation will evolve to predict failures, optimize workflows, and adapt to code changes dynamically. As AI-powered test case generation advances, software teams will achieve faster releases, improved security, and seamless bug detection, ultimately transforming the software development lifecycle.

Conclusion

LLM-powered test case generation is transforming software testing by improving efficiency, accuracy, and test coverage. Traditional methods often struggle with scalability, but llm ai, llm agents, and llm evaluation enable automated test case creation, execution, and optimization. Utilizing test case management tools and test case management tool, teams can streamline workflows while reducing errors.

With AI-driven advancements like chat gpt 4, chat gpt 3.5, chat gpt 3.5 free, and chat gpt 4 price, automated testing is more accessible. Technologies such as gpt-4 turbo, gpt 4 turbo, gpt-3.5-turbo, gpt-3.5 turbo, gpt 3.5 turbo, and gpt chat 3.5 further enhance test automation. Whether using free gpt 4 or free chat gpt 4, integrating AI into test case software simplifies what is a test case analysis and improves test case meaning comprehension. LLMs ensure reliable releases, minimizing outages and maximizing efficiency.

People Also Ask

How can LLMs identify missing test cases to improve test coverage?

LLMs analyze application requirements, existing test cases, and historical test data to detect gaps in test coverage. By comparing expected and tested functionalities, they suggest additional test scenarios, including edge cases and untested paths.

Can LLMs generate edge cases and negative test scenarios effectively?

Yes, LLMs can generate edge cases and negative test scenarios by analyzing input constraints, boundary conditions, and exception handling mechanisms. They identify potential failure points and create robust test cases to validate application stability.

Are LLM-generated test cases reliable for regulatory or compliance testing?

LLM-generated test cases can be reliable for regulatory and compliance testing when fine-tuned with domain-specific rules and validation criteria. However, manual review and expert validation are necessary to ensure adherence to strict industry standards.

What role does domain-specific fine-tuning play in improving LLM-generated test cases?

Domain-specific fine-tuning enhances test case relevance and accuracy by training LLMs on industry-specific data, coding standards, and regulatory requirements. This ensures that generated test cases align with the unique needs of the application and industry.

Can LLMs identify gaps in existing test suites and suggest improvements?

Yes, LLMs can analyze test execution results, detect patterns in failed cases, and identify missing or redundant test scenarios. They suggest improvements by recommending additional test cases, optimizing assertions, and refining coverage criteria.

%201.webp)