End-to-end testing plays a pivotal role in ensuring the functionality and reliability of web applications, but as software complexity grows, the need for smarter and faster testing methods becomes essential.

AI-powered solutions can optimize testing processes, ensuring that tests are more accurate, reliable, and scalable. By using technologies such as Machine Learning (ML) and Large Language Models (LLMs), AI is improving how tests are created, executed, and analyzed.

The future of end-to-end testing is all about combining automation with intelligent analysis to streamline testing workflows.

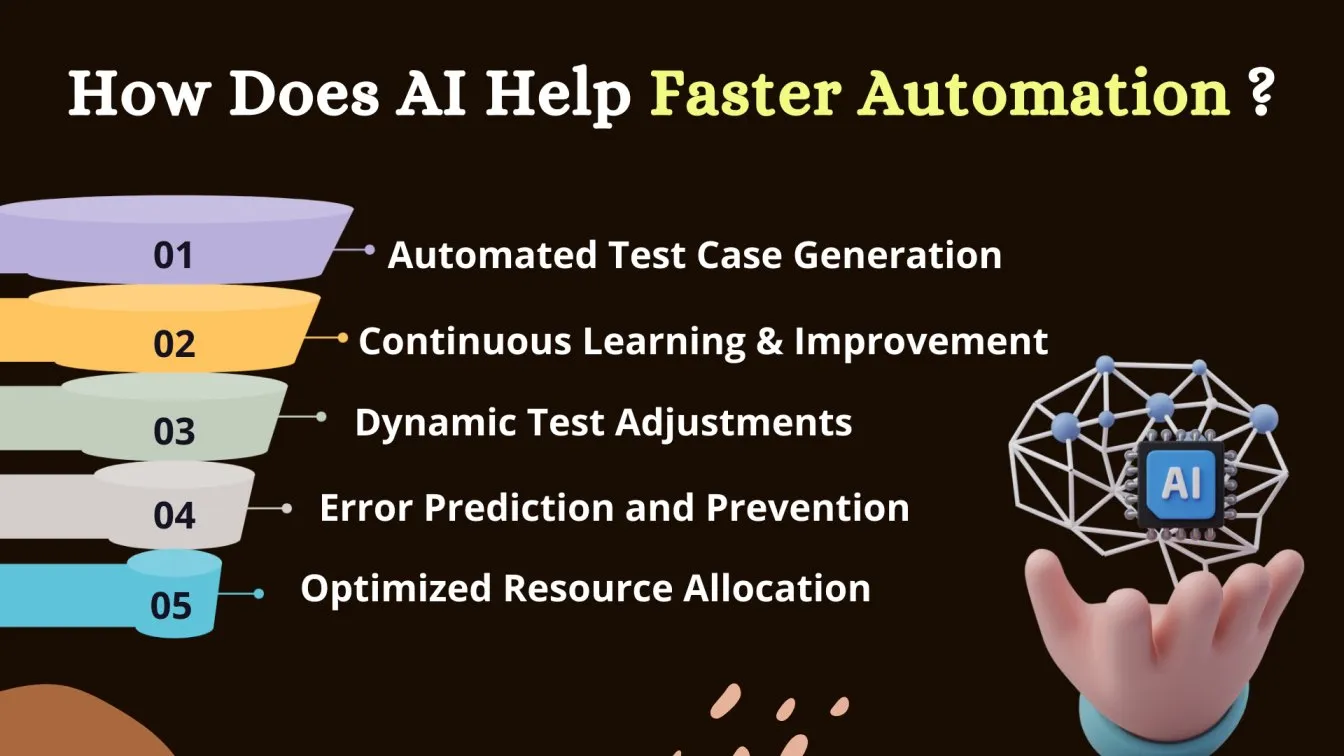

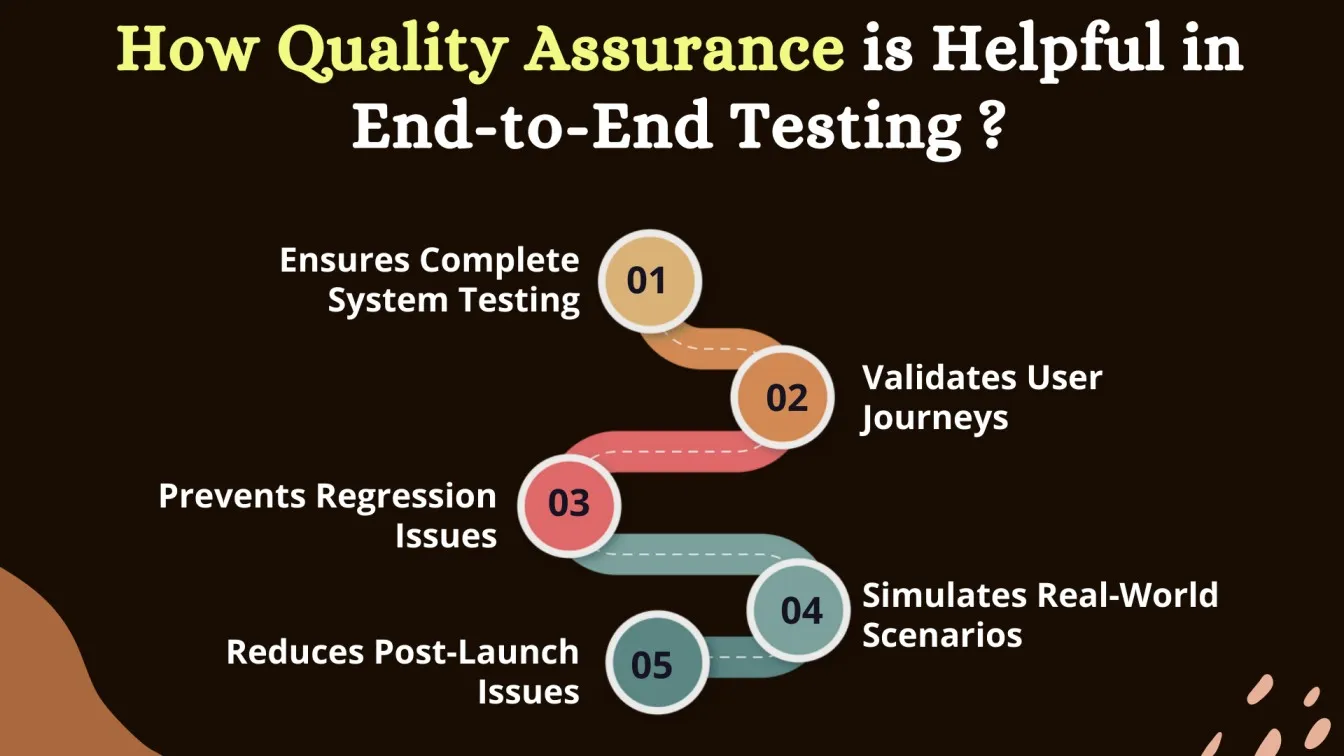

AI is revolutionizing how we approach Quality Assurance (QA) by making it possible to predict issues, detect bugs earlier, and ensure that applications perform as expected under real-world conditions. From automated test case generation to real-time test optimizations, Artificial Intelligence technology is laying the foundation for a more efficient and effective approach to testing.

End-to-end testing is a crucial practice in software testing, ensuring that an application functions correctly from start to finish. Implementing end-to-end automation helps detect issues in workflows, making automated end-to-end testing essential for modern development. Following end-to-end testing best practices, teams can streamline testing, improve coverage, and enhance quality.

What's next? Keep scrolling to find out:

🚀An overview of end-to-end testing and its importance in ensuring seamless user experiences.

🚀How AI improves test coverage by identifying gaps and enhancing test accuracy.

🚀The role of AI in improving quality assurance by reducing errors and speeding up feedback.

🚀How AI automates realistic test data generation, saving time and improving test quality.

🚀Using LLMs to automatically generate and optimize test cases, improving efficiency and coverage.

What is End-to-end Testing??

End-to-end (E2E) testing is a software testing methodology that involves testing an application from start to finish to ensure all integrated components work as expected. The primary goal of E2E testing is to simulate real-world user scenarios to verify that all systems; both front-end and back-end function correctly.

This type of testing ensures that the application behaves as intended across various user interactions, such as logging in, performing transactions, or interacting with external services like APIs or databases.

In E2E tests, the focus is on verifying the complete flow of the application, from the user interface (UI) to the database and other integrated systems.

It ensures that data is accurately passed between components, and the application delivers the expected outcomes. This level of testing helps identify issues that may not be visible during unit or integration testing, as it checks the entire system's behavior in a real-world context.

Tools supporting e2e testing frameworks enable efficient e2e tests, allowing for better performance validation. Companies adopt end-to-end testing automation to simulate real-world user interactions. Understanding what is end-to-end testing with examples provides insight into testing strategies. By leveraging e2e automation, QA teams can enhance efficiency in end to end software testing, ensuring seamless integration and reliability.

Using AI to Enhance Test Coverage and Accuracy

Enhancing Test Coverage with AI

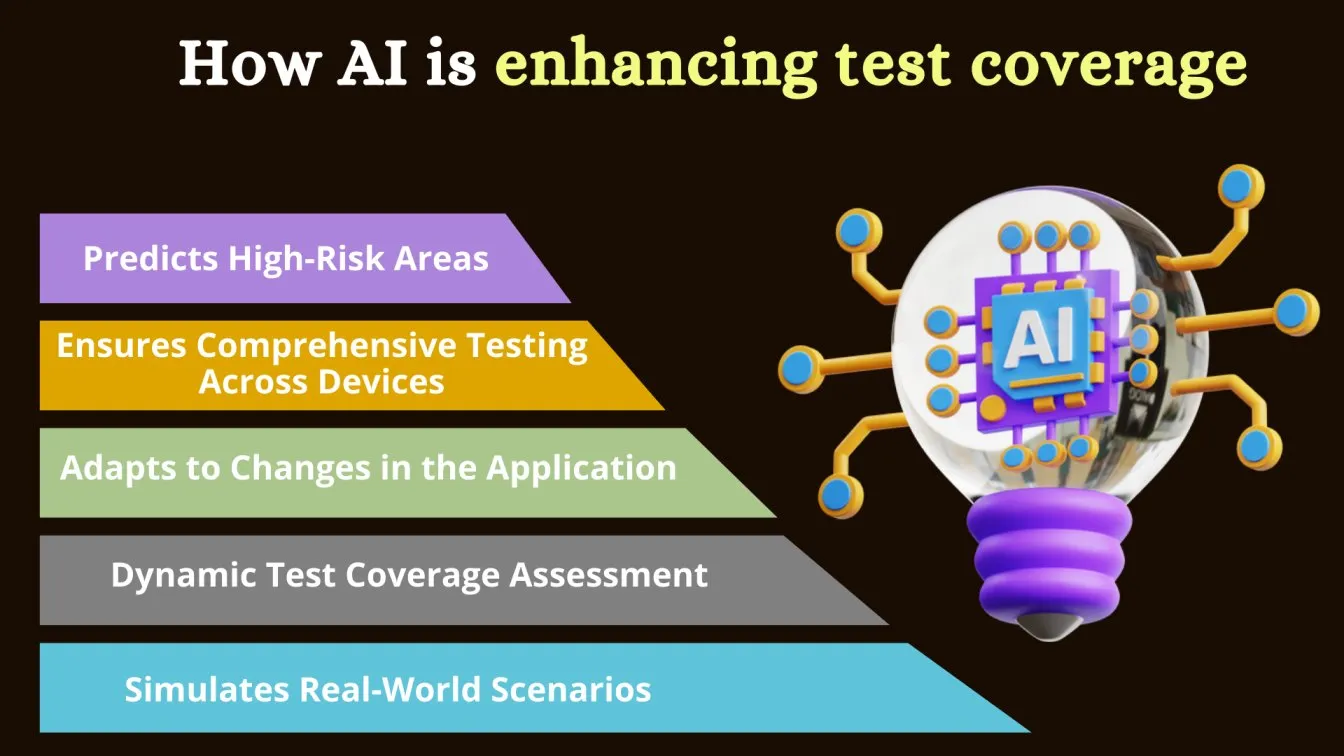

AI can significantly enhance test coverage by analyzing application code and identifying areas that may be under-tested. It uses machine learning algorithms to predict high-risk areas and automatically generates test cases for scenarios that might otherwise be overlooked.

By doing so, AI ensures comprehensive testing across different configurations, environments, and user workflows, helping to maximize test coverage. AI's ability to adapt and identify potential gaps in test coverage ensures that all aspects of the application are thoroughly tested, even those that are hard to detect with traditional testing methods.

Improving Accuracy with AI

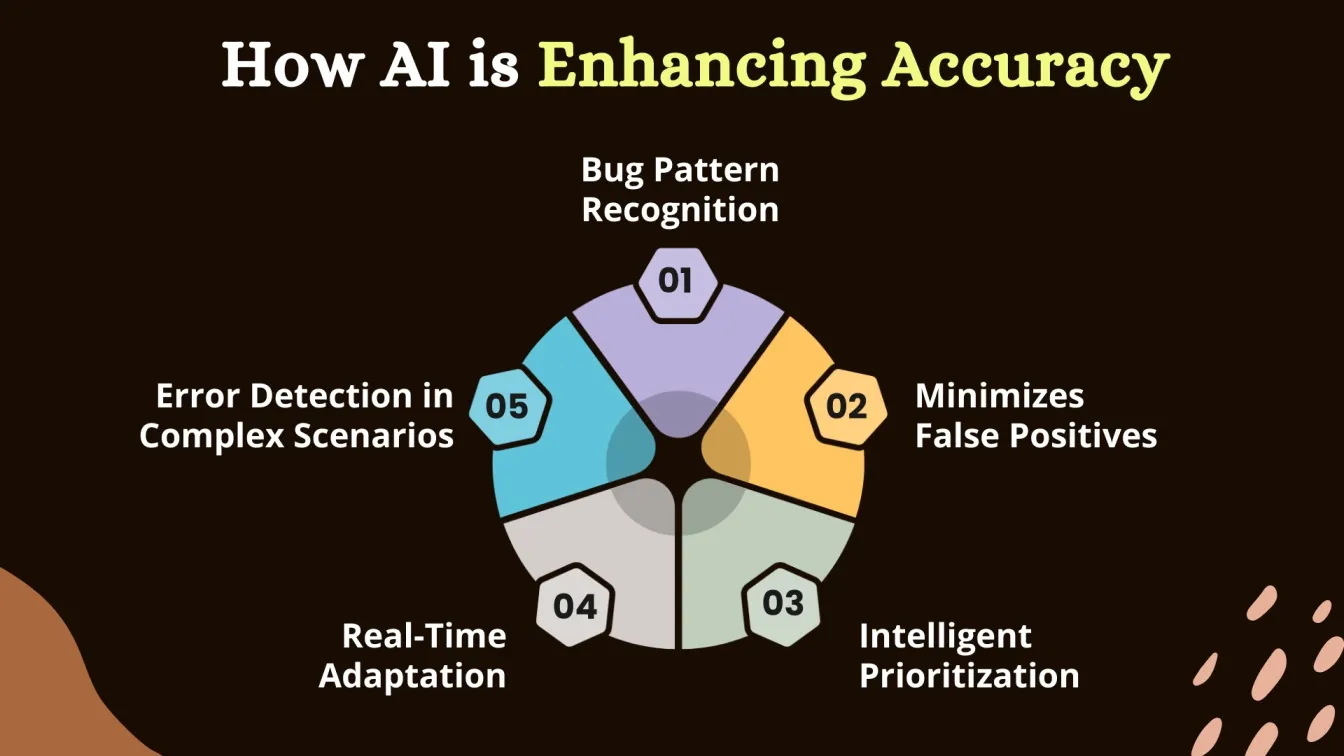

AI enhances the accuracy of testing by automating the detection of issues based on historical AI enhances the testing accuracy by automating the detection of issues using historical data and real-time feedback. Machine learning models can analyze past test runs and usage patterns, identifying complex patterns in defects that might be overlooked by human testers.

AI can prioritize tests based on the likelihood of failure, ensuring that the most critical areas are tested first. Furthermore, AI-powered tools can adapt to changes in the application, reducing false positives and providing more reliable results. This continuous adaptation leads to precise and trustworthy test outcomes, improving the overall effectiveness of automated testing.

How AI Enhances Quality Assurance in Automated Testing

Artificial Intelligence (AI) is revolutionizing quality assurance (QA) in automated testing by improving both efficiency and accuracy. With AI-powered chatbots, testers can now automate routine tasks, such as reporting test results or responding to common queries, which reduces manual effort and speeds up the testing process.

Additionally, generative artificial intelligence can generate test cases automatically, ensuring broader test coverage and the detection of edge cases that might otherwise be missed. By using Artificial Intelligence tools, teams can now simulate real-world user behaviors more effectively, ensuring that the application performs optimally across various scenarios and environments.

AI enhances quality assurance testing by analyzing large volumes of testing data to identify patterns and recurring issues that manual testing methods may miss. This approach to quality assurance helps uncover subtle defects and ensures comprehensive software quality assurance.

Integrating AI-powered solutions into the testing pipeline improves the speed and reliability of automated testing processes, reducing the risk of bugs reaching production and speeding up the testing cycle, leading to higher-quality software.

Automating Test Data Generation with AI

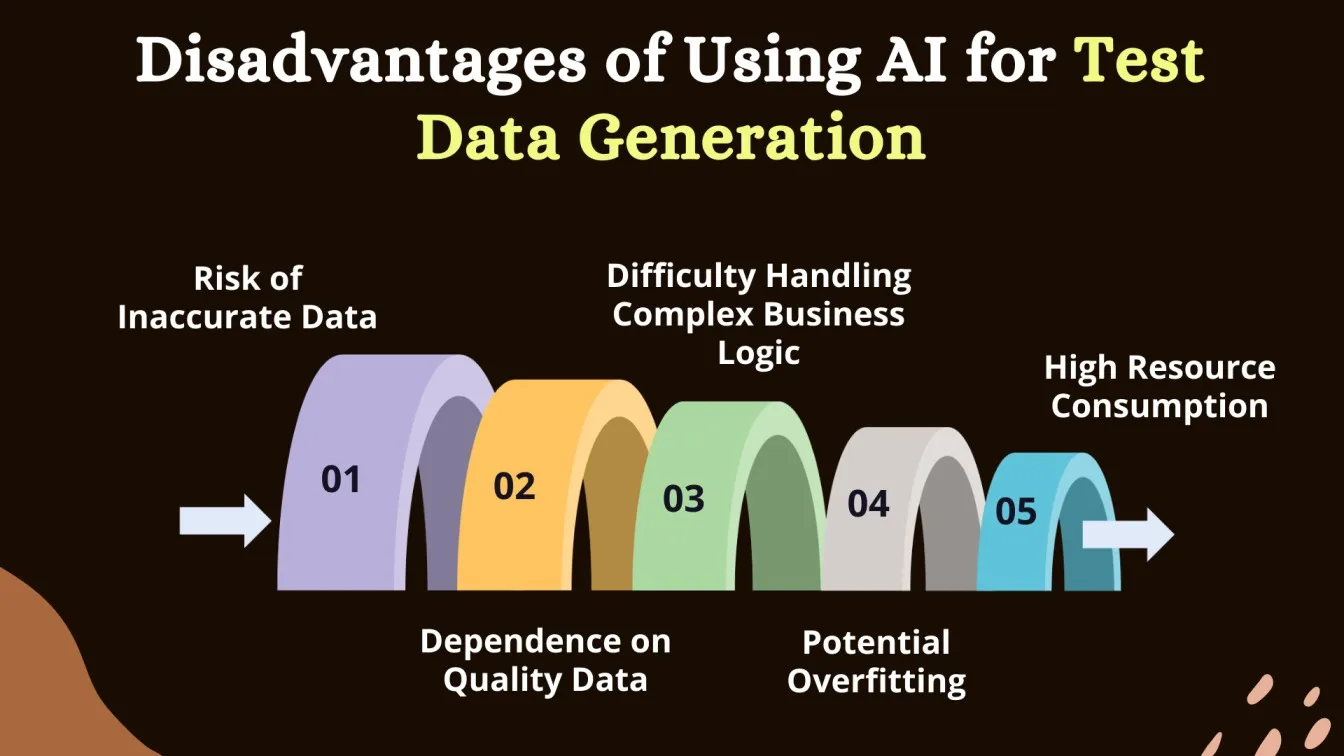

Automating test data generation is a critical aspect of efficient software testing, and AI can significantly enhance this process by eliminating repetitive tasks and reducing manual effort.

With AI-driven tools, the process is streamlined as machine learning models analyze the system’s structure, simulating a wide range of real-world user interactions. This allows AI to automatically generate data that reflects various use cases and potential scenarios, ensuring comprehensive coverage of test cases without the risk of missing critical edge cases.

Furthermore, AI provides predictive analytics, helping to anticipate and create test data for scenarios that might not be immediately obvious. It can identify potential issues by analyzing existing data patterns and generating real-time insights into the areas of the application that need more focus.

This level of intelligence ensures testing efficiency by continuously adapting to changes in the application, thereby reducing human errors and enhancing the continuous testing process.

Additionally, AI provides valuable insights that can be leveraged to make data-driven decisions, ensuring that the testing process remains aligned with the latest software developments and provides actionable insights for improving application performance.

Integrating Large Language Models (LLMs) for Smarter Test Case Creation

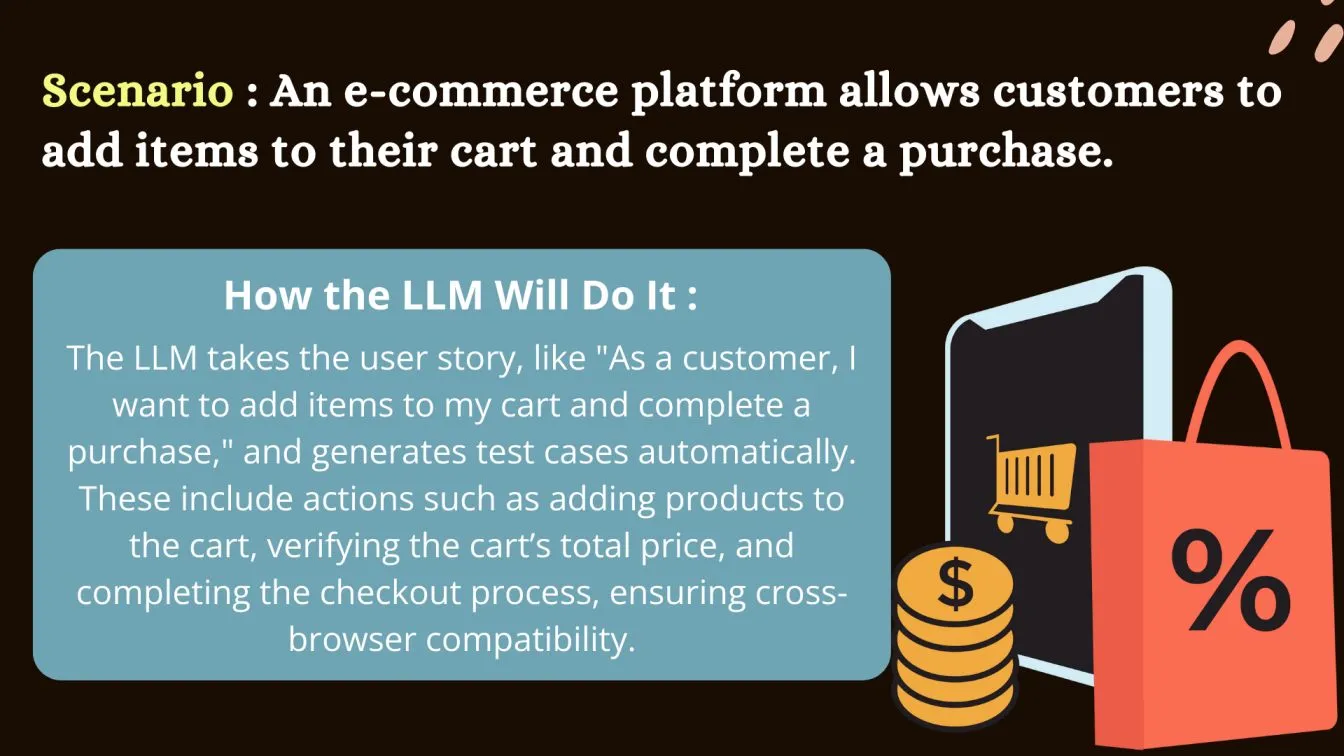

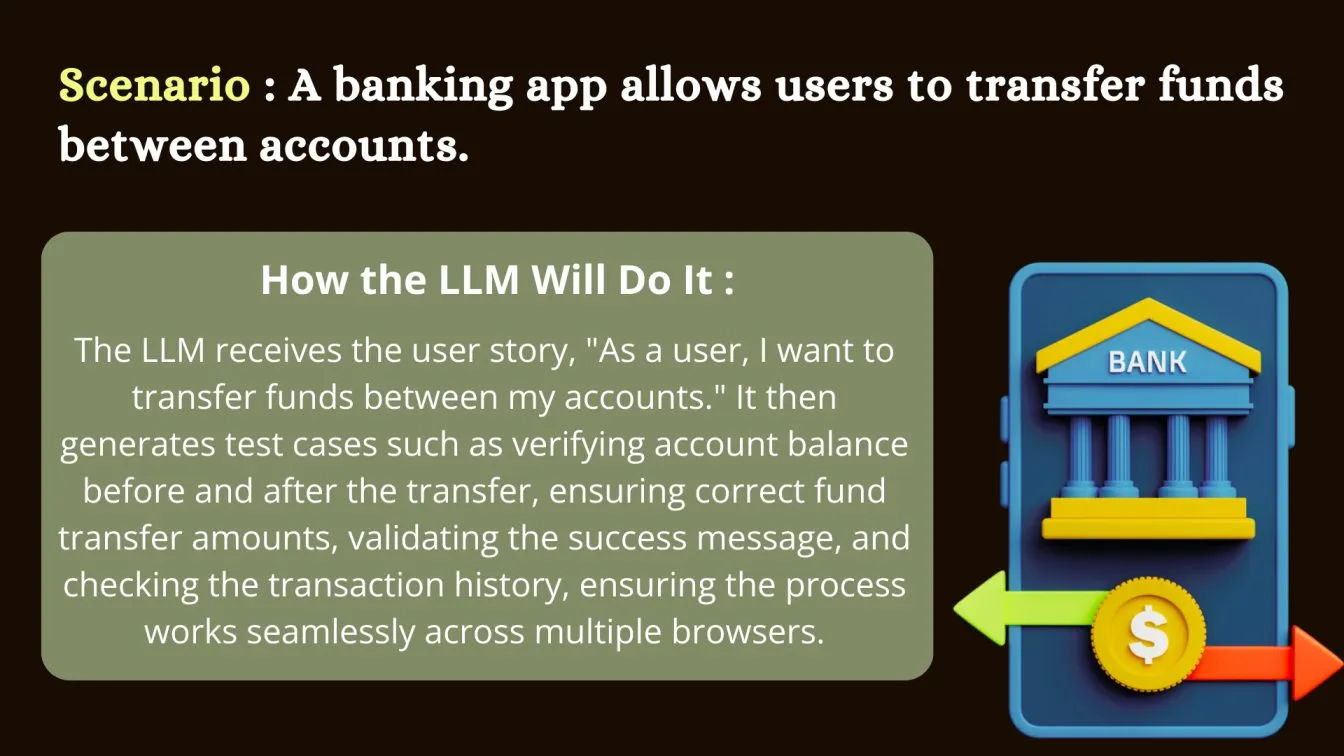

Integrating Large Language Models (LLMs) into the software testing process enhances AI-driven test automation by using natural language processing (NLP) to automatically generate test cases.

These models can analyze documentation and user stories to create relevant test scenarios, covering a wide range of use cases, including edge cases. With generative AI, LLMs proactively generate tests, reducing the need for human oversight and speeding up the process while ensuring accuracy.

Moreover, LLMs improve product quality by providing data-driven insights and detailed analytics. They help identify areas that require more testing and support Self-Healing Tests—automatically adjusting to changes in the code. This proactive approach increases testing efficiency and ensures the application remains aligned with the latest updates, ultimately boosting product quality.

AI in Predictive Test Analysis and Bug Detection

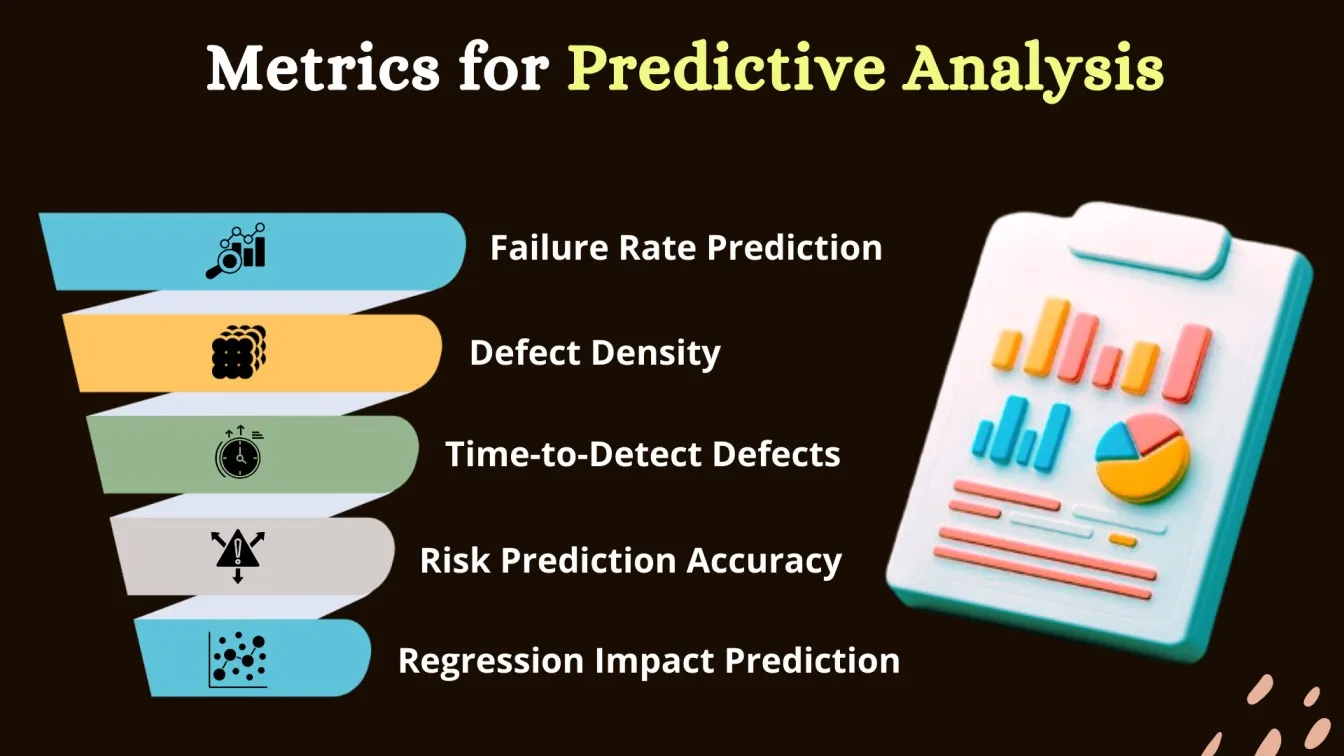

Predictive Analysis in AI

AI's predictive capability plays a crucial role in enhancing the efficiency of test automation. By analyzing vast amounts of historical testing data, AI can identify patterns and trends that help predict potential issues before they arise. This allows teams to focus on areas with a high likelihood of failure, optimize resource allocation, and ensure that the most critical areas are tested first.

AI-driven test automation leverages these insights to prioritize test cases based on predicted risk factors, improving test coverage and efficiency. By understanding user behavior and historical performance, AI provides valuable insights that help improve code quality and contribute to user satisfaction. This predictive analysis empowers teams to catch critical issues early in the development cycle, leading to faster releases and better product quality.

Bug Detection with AI

AI plays a pivotal role in bug detection, helping testers identify critical issues that might otherwise be overlooked. With AI-generated test cases, the system can simulate a variety of real-world scenarios based on high-quality data and historical patterns.

AI tools can detect subtle bugs that may be hard to spot through manual testing or traditional automated methods. AI’s ability to analyze vast amounts of test data helps pinpoint not only obvious bugs but also non-obvious issues, ensuring comprehensive coverage and reducing the risk of post-release bugs.

This proactive approach to bug detection minimizes downtime and enhances overall product quality, delivering long-term benefits for both technical and non-technical users.

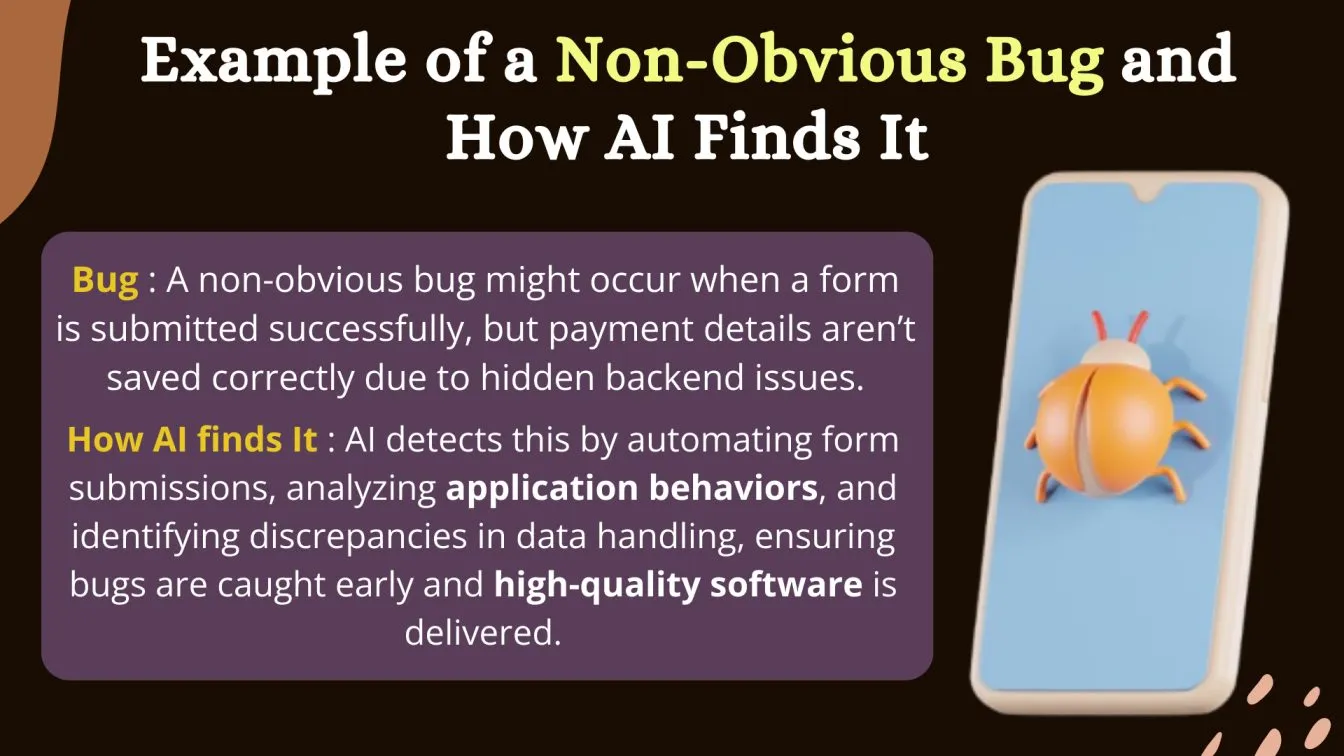

AI for Detecting Non-Obvious Bugs in Complex Applications

AI is revolutionizing the way application testing is conducted, especially in complex testing scenarios. By automating repetitive tasks, AI allows testers to focus on more strategic tasks that require human expertise. It analyzes application behaviors in detail, identifying non-obvious bugs that might go unnoticed with traditional testing methods.

With the ability to simulate real user interactions, AI detects subtle issues that arise from intricate systems, ensuring that high-quality software is delivered consistently. This reduces the time spent on manual testing efforts and improves overall testing efficiency.

In modern applications, where the complexity of the system is continuously increasing, AI is essential for uncovering bugs that are not immediately apparent. It can predict and spot complex tasks that might cause potential issues, ensuring that every aspect of the application is thoroughly tested.

By leveraging AI to detect non-obvious bugs, teams can improve the robustness of the software and guarantee its reliability under various real-world conditions, ultimately leading to the release of high-quality software with fewer defects and faster deployment cycles.

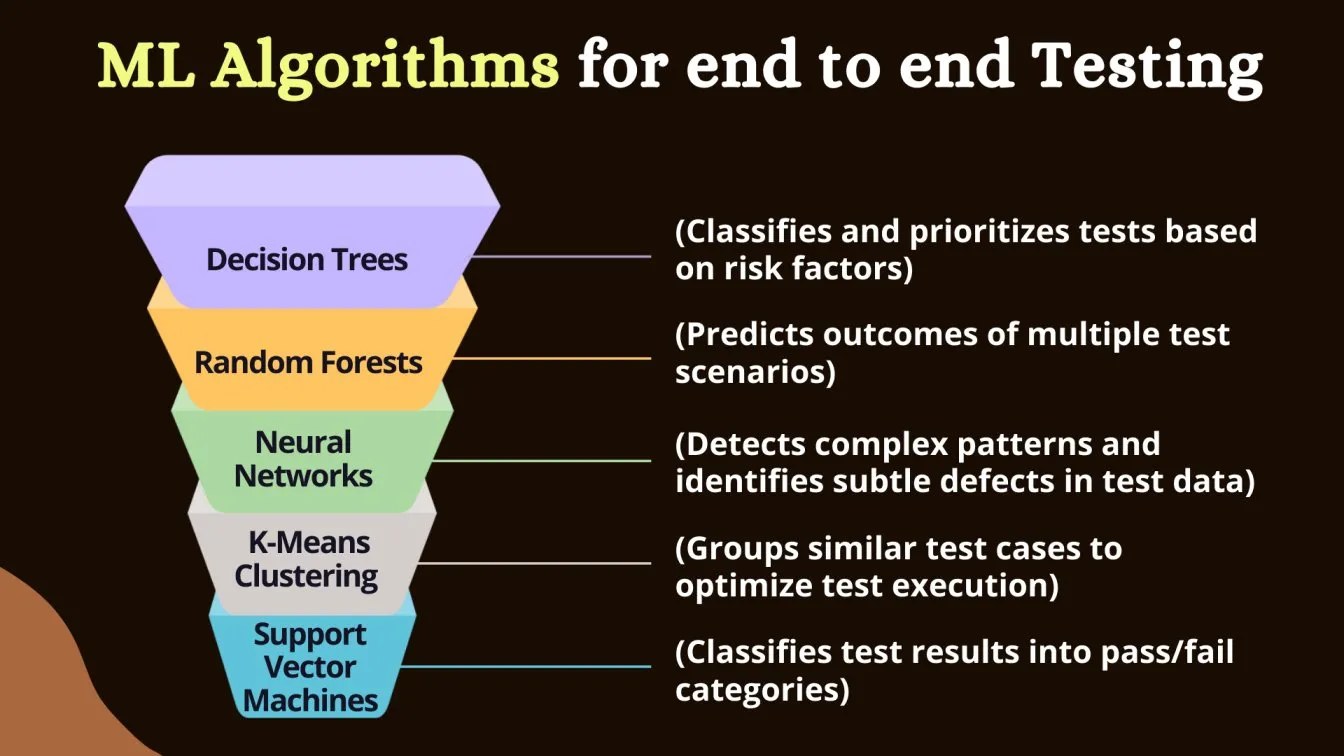

Machine Learning for Test Optimization

Machine learning (ML) is transforming test optimization by using intelligent algorithms to predict, analyze, and optimize test cases in real time. AI-powered testing tools leverage AI-driven predictive analytics to analyze vast amounts of test data and identify patterns or weaknesses that may require additional focus.

These AI-driven insights help testers optimize their test suites, prioritize high-risk areas, and reduce unnecessary testing efforts. By utilizing advanced analytics and deep learning, ML models can continuously adapt to changes in the application, ensuring that the most relevant tests are always executed to improve coverage and efficiency.

In real-world applications, AI-driven testing tools automate repetitive tasks, allowing human testers to focus on more complex scenarios. Machine learning for test optimization ensures that scripting languages are used effectively to generate more efficient test cases, reduce false positives, and ensure that all critical paths are tested.

The key benefits of using ML for test optimization include better resource allocation, faster testing cycles, and the ability to handle complex, large-scale applications with ease.

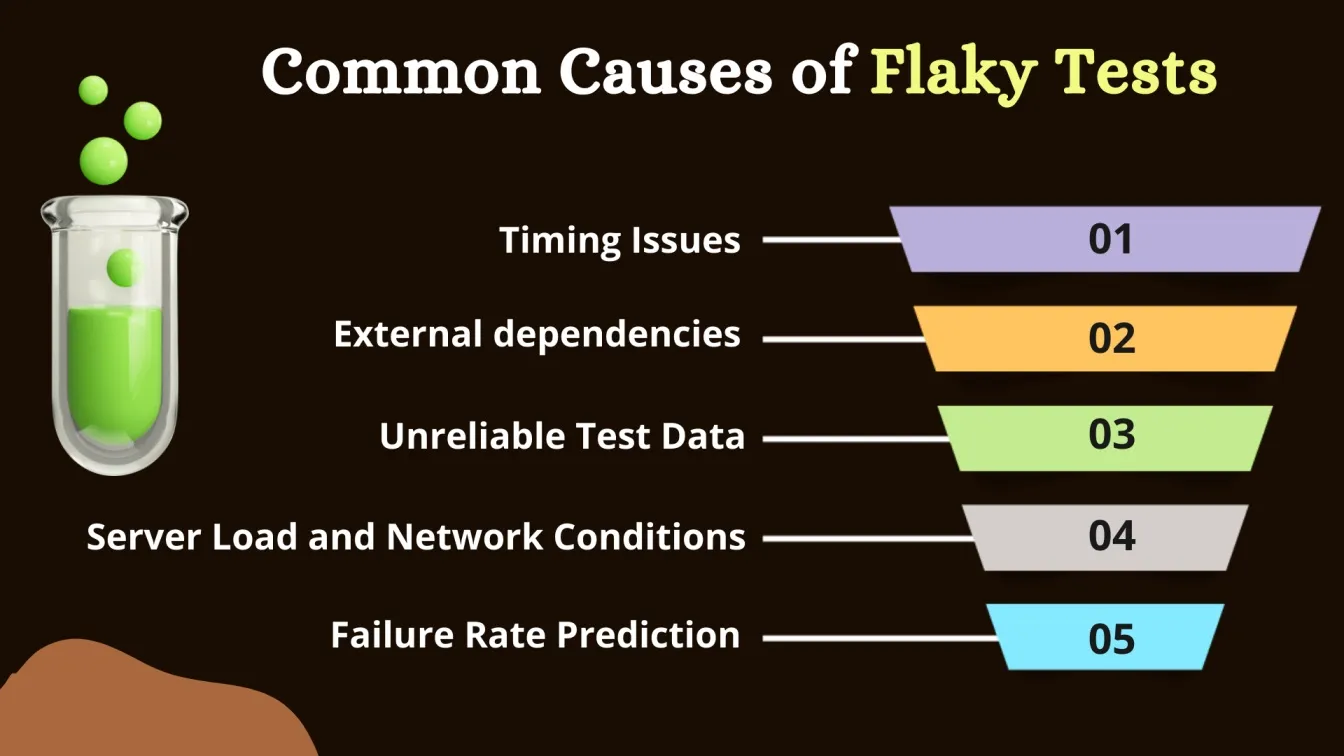

AI-Powered Test Execution: Reducing Flaky Tests and Improving Stability

AI-powered test execution offers numerous benefits by addressing performance issues that often lead to flaky tests. Traditional testing methods can struggle with inconsistent results, but AI-driven approaches ensure that tests are executed reliably across different environments and conditions.

AI-based test automation tools leverage advanced algorithms to identify and handle scenarios that typically cause instability, such as timing issues, slow loading, or asynchronous behavior, leading to more accurate and stable results.

By using an AI-based tool, teams can enhance the overall stability of their testing process. Key features of AI-driven test execution include predictive analytics, real-time adjustments, and the ability to recognize and mitigate common causes of instability in test scripts.

These key aspects help reduce flaky tests, allowing for faster feedback and a more consistent and reliable testing process, ultimately leading to higher-quality software.

AI-Based Data-Driven Testing for Better Decision Making

AI-based data-driven testing leverages large datasets and AI-driven approaches to generate insights that improve the testing process. By analyzing historical data and real-time test results, AI tools can identify patterns, predict potential risks, and optimize test coverage.

This helps testing teams make more informed decisions, prioritize high-risk areas, and allocate resources effectively, leading to more efficient and accurate testing.

With the ability to adapt and learn from past testing data, AI-based test automation tools can continuously refine the testing process. This results in better decision-making, as teams can focus on critical test scenarios, reducing time spent on unnecessary tests and improving overall test accuracy. By using data-driven insights, AI ensures that testing efforts are aligned with the application's behavior and risk factors, ultimately enhancing software quality.

Scaling Test Automation with AI-Powered Cloud Services

AI-powered cloud-based testing is revolutionizing the way organizations scale their test automation efforts. By leveraging the vast computing power of the cloud, teams can run thousands of tests concurrently across various environments and configurations. AI tools integrated into the cloud automate and optimize the testing process, ensuring that applications are thoroughly tested without the need for extensive infrastructure investments.

The use of cloud-based testing also offers flexibility and cost-effectiveness. AI-driven testing tools in the cloud can dynamically adjust resources based on testing needs, making it easier to scale up or down as required. This not only improves the speed of testing but also enhances the overall quality of software by providing consistent and comprehensive test coverage.

Getting Closer!!!

In this blog, we’ve explored how AI-powered test automation can transform end-to-end testing, making it faster, more efficient, and more accurate. We have seen how technologies like machine learning, generative AI, and predictive analytics help streamline the testing process.

By automating tasks such as test case generation and bug detection, AI reduces manual effort while ensuring comprehensive test coverage, leading to high-quality software and faster release cycles.

We’ve also covered how AI-driven tools improve quality assurance by simulating real-world user scenarios and handling complex testing workflows. As AI evolves, its role in scaling test automation with the help of cloud-based services will only grow stronger. QA testing companies are leveraging AI to optimize testing workflows, ensuring better efficiency.

Additionally, organizations now compare Loggly pricing models to choose the best log management solutions for improved debugging and performance monitoring in test environments.

Ultimately, we’ve learned that AI-powered testing ensures we deliver better, more reliable software, enabling teams to release products that meet user expectations with greater speed and accuracy.

People Also Ask

👉What could be an application of artificial intelligence machine learning in testing?

AI and machine learning can be used to automate test case generation, predict potential defects, and optimize test execution by identifying patterns and high-risk areas in the application.

👉Which test can be supplemented by end-to-end testing?

Unit tests and integration tests can be supplemented with end-to-end testing to verify that all components of the application function as expected when integrated together.

👉What is end-to-end integrated theory?

End-to-end integrated theory refers to the approach of testing an entire system from start to finish, ensuring that all components and their interactions work together seamlessly in real-world scenarios.

👉What is the difference between QA and E2E?

QA (Quality Assurance) is a broad process aimed at ensuring software quality throughout the development cycle, while E2E (End-to-End) testing specifically focuses on verifying the full flow of an application from start to finish.

👉What is the difference between deep learning and end-to-end deep learning?

Deep learning involves training models to perform specific tasks such as classification or regression, whereas end-to-end deep learning refers to training models to perform a complete task, from input to output, without requiring separate steps or manual intervention.

%201.webp)