Hey tech enthusiasts and innovators! Welcome to an exciting journey into Reinforcement Learning and Generative AI for Software Testing. This blog is your entry point to learn how these cutting-edge technologies are changing software testing, regardless of your level of experience as a tester or interest in the fascinating nexus between artificial intelligence and quality assurance.

We'll cover the fundamental ideas, real-world applications, and potential future developments to make sure you leave with a thorough understanding of this ever-evolving field. Prepare to discover how generative AI and reinforcement learning can transform your testing approaches!

Why you shouldn't miss this blog!

📌 Introduction to Generative AI: Understanding its essence and application in software testing.

📌 Advantages of Generative AI: Unveiling the benefits that revolutionize software testing.

📌 Enhancing Automation: How Reinforcement Learning elevates testing automation to new heights.

📌 Practical Insights: Real-world applications of Generative AI that are transforming testing.

📌 Optimization Techniques: Advanced Reinforcement Learning strategies for perfecting test case design.

📌 Implementation Challenges: Navigating the complexities of adopting Generative AI in software testing.

📌 Integration Tactics: Seamlessly incorporating Reinforcement Learning into existing test frameworks.

📌 Best Practices: Tips and strategies for integrating AI effectively in your testing workflows.

📌 The Big Picture: Concluding thoughts on the transformative impact of Generative AI on software testing.

Introduction to Generative AI in Software Testing

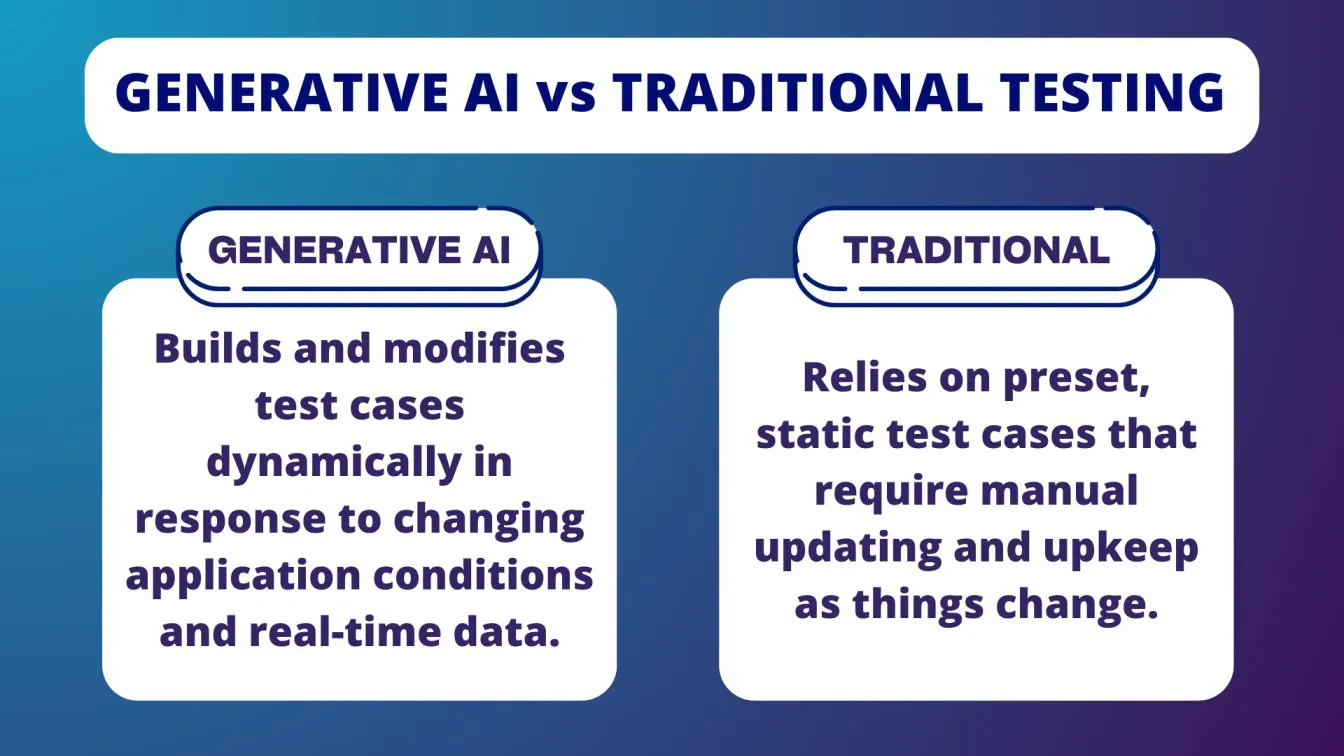

A new era in software testing is being ushered in by generative AI, which combines creativity and automation to improve test coverage and efficiency. This novel method creates sophisticated and varied test cases that simulate a range of user behaviors and possible software interactions by utilizing machine learning models, especially those taught to generate data and scenarios. Generative AI allows tests to be dynamically generated depending on the changing conditions and requirements of the application, in contrast to traditional approaches that mostly rely on human input and predefined scripts.

This feature greatly increases the testing process's thoroughness while simultaneously accelerating it. Generative AI assists in identifying problems that may escape traditional testing frameworks by automatically generating tests that can investigate edge cases and unexpected program behavior.

What is Reinforcement Learning and Its Role in Software Testing?

Reinforcement Learning (RL) is a machine learning technique that teaches algorithms to make decisions by experimenting with different actions and seeing the results; it's similar to a character in a video game that improves with each play.

When it comes to software testing, reinforcement learning (RL) is similar to having an extremely intelligent testing partner that continuously enhances and optimizes the testing procedure based on every test run.

This is how it operates: Reactive Learning (RL) algorithms execute test scenarios, obtain feedback from their actions (e.g., test pass/fail), and then utilize this feedback to improve their decision-making over time. Because of this, reinforcement learning (RL) is especially useful for automating complicated test settings where it is essential to constantly explore and adapt to different conditions.

By identifying and concentrating on the most influential tests, RL can expedite the software testing workflow by cutting down on the time and resources required for the less successful tests. It can handle situations where a thorough comprehension of the behavior of the application is necessary with effectiveness, adjusting its testing methods in response to real-time outcomes. This adaptive strategy ensures that even the most elusive bugs are found, expedites the testing cycle, and improves the quality of the software product.

Benefits of Using Generative Al in Software Testing

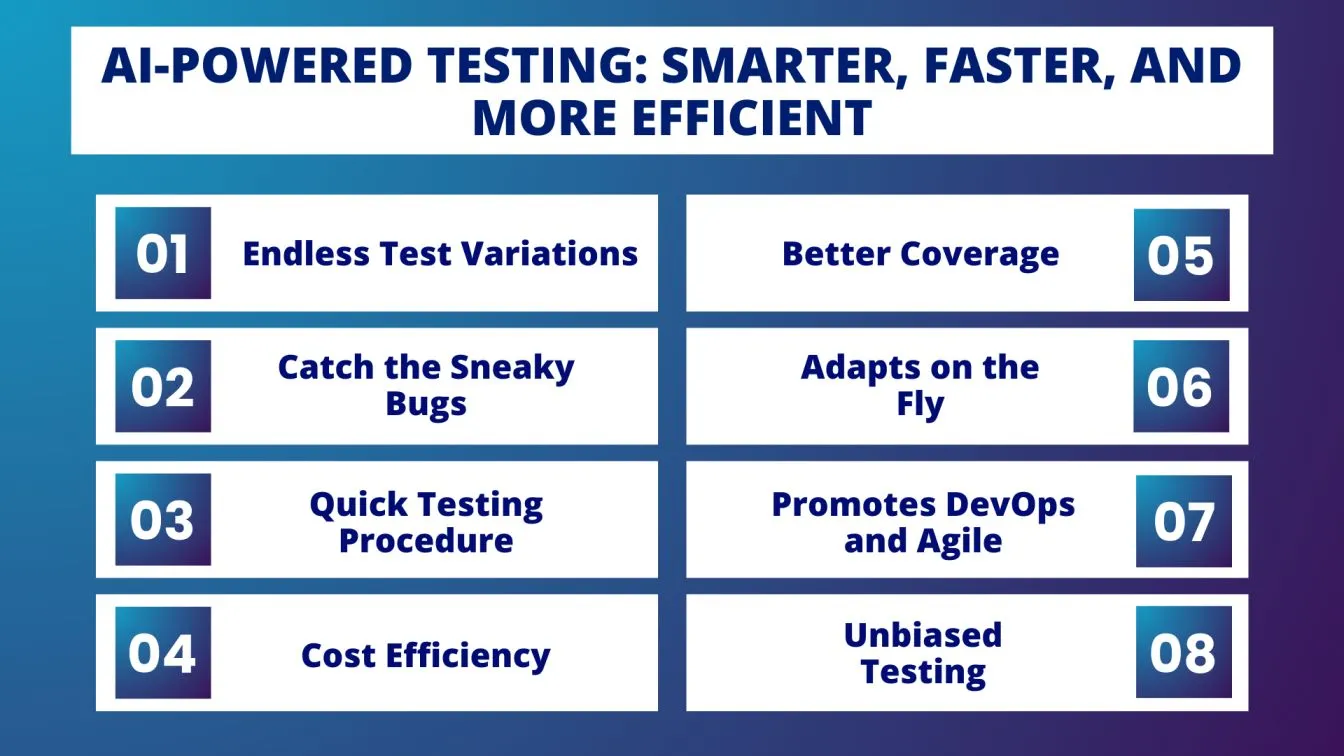

Endless Test Variations 🌀: Picture a tool that continuously injects fresh test cases into your program, similar to an endless creative brainstorming session. Your software will be pushed to its limits and made to be able to handle everything users throw at it thanks to the wide range of test data and scenarios that generative AI can generate.

Catch the Sneaky Bugs 🕵️♂️: Like ninjas, some bugs are extremely clever and challenging to identify. By creating odd or edge-case scenarios that conventional testing could overlook, generative AI is particularly good at identifying them. Less shocks following deployment is a good thing, so let's celebrate!

Quick Testing Procedure ⚡: Quickness is the key to success. The entire development cycle can be accelerated by using generative AI to build and execute tests far more quickly than human testers.

Cost Efficiency 💸: Generative AI lowers costs over time by automating a significant portion of the testing process, even if initial setup may require considerable expenditure. You can devote more of your human resources to intricate or innovative work when there is less need for manual testing.

Better Coverage 🔍: This AI is thorough in addition to being quick. It makes sure that every little detail of your program is tested, which raises user happiness and software quality overall.

Adapts on the Fly 🔄: The tests should change as your software does. As the application changes, generative AI adjusts and keeps producing tests that accurately reflect the software as it is right now. It is therefore a versatile ally in your continuous development process.

Promotes DevOps and Agile🚀: It works well in the fast-paced environments of DevOps and Agile, where continuous delivery and continuous integration are essential. Generative AI keeps development and testing moving quickly without sacrificing quality.

Unbiased Testing 🤖: Even with the greatest of intentions, human testers can have blind spots or biases. Generative AI provides an unbiased, new perspective on testing that is free of preconceptions.

How Reinforcement Learning Enhances Testing Automation

By incorporating RL, testing automation becomes smarter, adapting its strategies based on performance outcomes to maximize efficiency and effectiveness

Defining Generative AI 🔍: Gain an understanding of what generative AI refers to—AI systems that can generate dynamic, complex test scenarios and data, which are essential for comprehensive testing.

AI-Driven Test Optimization 🚀: AI software testing is improved by reinforcement learning, a type of machine learning, that allows systems to learn from their surroundings and get better over time, thus streamlining the testing procedure.

Applying AWS Generative AI ☁️ : Make use of AWS's capabilities to implement generative AI models that enhance software testing, presenting real-world examples of generative AI in action within the AWS ecosystem.

Learning Opportunities 🎓 : To obtain in-depth knowledge and practical experience in AI-based software testing, enroll in generative AI courses provided by websites such as Udemy.

AI in Action 💡 : Discover how generative AI is used to create intricate, varied test cases that put software robustness to the test in real-world software testing scenarios.

Reinforcement learning with TensorFlow 🦾 : Apply advanced models for reinforcement learning in software testing with TensorFlow to enhance test results via automated learning procedures.

Reward Function in RL 🏆 : To ensure that AI behavior is directed toward obtaining the most advantageous testing outcomes, incorporate customized reward functions into reinforcement learning models.

Advanced Reinforcement Learning Techniques 📈 : Explore Q-learning, a kind of RL that makes AI software testing efficient in complex, variable testing environments.

Deep Learning Integration 🧠 : Process and analyze enormous volumes of test data using reinforcement deep learning techniques to produce more accurate and effective testing automation

Key Differences Between Generative AI and Traditional Testing Methods

Recognizing the primary distinctions between Generative AI and conventional testing techniques demonstrates how AI-driven methods improve software testing in comparison to manual, script-based testing techniques by automating test generation, increasing test coverage, and improving efficiency.

Real-World Applications of Generative AI in Testing

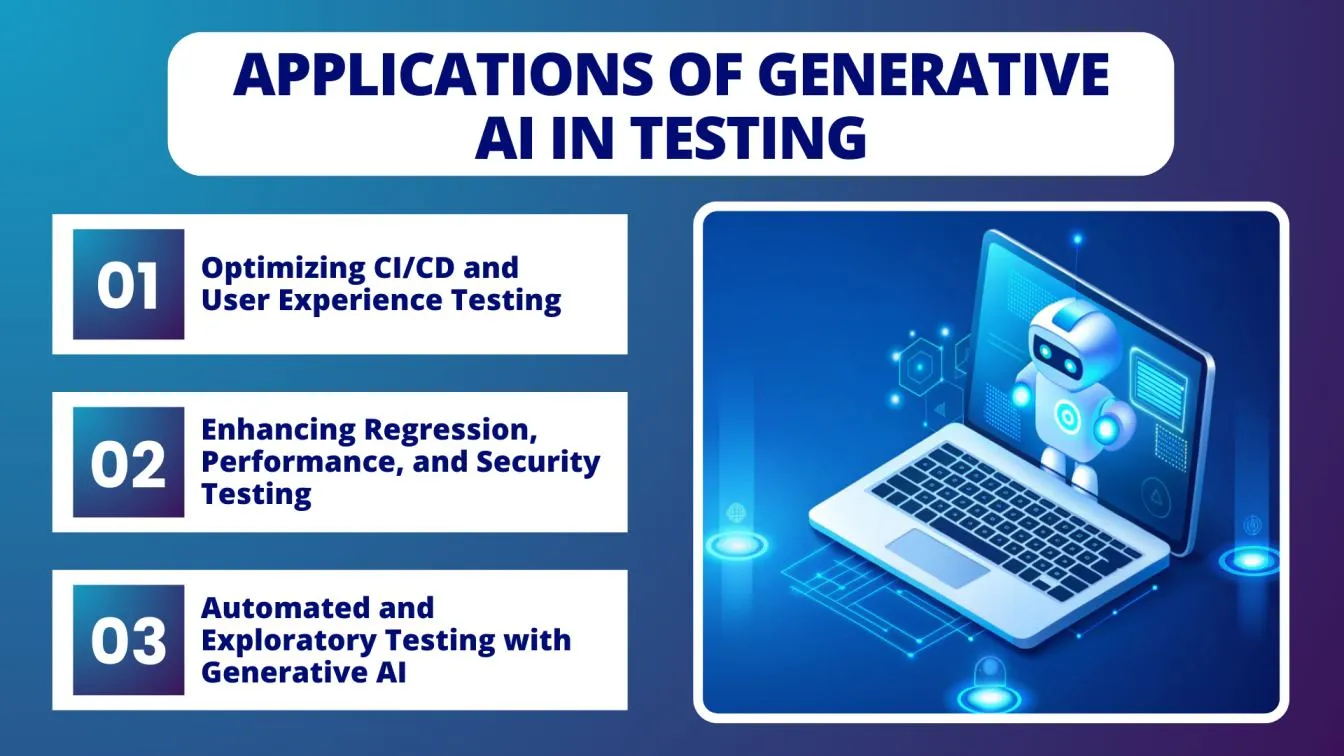

The field of software testing is being revolutionized by generative AI, which offers practical, cutting-edge applications that improve the effectiveness and caliber of testing procedures. Here are a few important applications for it:

- Automated Test Case Generation 🤖📊: Using user data and software specifications, generative AI may automatically generate a variety of test cases that cover a wider range of scenarios than conventional techniques.

Example:- Game Development: AI generates complex game scenarios to test under varied conditions.

- Automotive Software: AI automates test case creation for different driving conditions and sensor inputs.

- Exploratory testing 🕵️♂️🔍: By simulating user behavior, artificial intelligence (AI) may investigate how the software reacts to different inputs and activities, perhaps revealing problems that human testers would overlook.

Example:- Web Applications: AI simulates user interactions to identify issues like broken links and functionality errors.

- Consumer Electronics: AI tests smartphones and tablets by mimicking consumer interactions.

- Regression Testing 🔄✅: Generative AI reduces the time and effort needed for manual checks by rapidly running thousands of regression tests to make sure that new changes haven't broken current features.

Example:- E-commerce Platforms: AI ensures that updates do not disrupt existing functionalities like the checkout process.

- Healthcare Software: AI tests updates to healthcare apps, safeguarding critical functionalities.

- Performance Testing 🚀📈: Generative AI assists in stress-testing programs to evaluate their performance under various conditions, guaranteeing robustness, by producing realistic load scenarios.

Example:- Cloud Storage Services: AI tests scalability and performance under peak conditions.

- Financial Trading Platforms: AI simulates high-volume trading to ensure platform reliability.

- Security testing 🔒🛡️: By simulating possible cyberattacks, AI algorithms can create test cases that identify weaknesses and improve the security posture of the application.

Example:- Banking Applications: AI simulates cyberattacks to identify and fix vulnerabilities.

- IoT Devices: AI tests for potential security weaknesses in smart home devices.

- Continuous Integration/Continuous Deployment (CI/CD) 🔄🔧: Generative AI allows automated testing across the entire development process in CI/CD pipelines, guaranteeing dependable and timely software delivery.

Example:- Software Development Firms: AI integrates into CI/CD pipelines for real-time code testing.

- Mobile App Development: AI automates app testing across devices and operating systems.

Reinforcement Learning Techniques for Test Case Optimization

For software testing, Reinforcement Learning (RL) provides effective methods such as Q-Learning, SARSA, and Deep Q-Networks (DQN) for test case optimization.

Q-Learning: This reinforcement learning technique makes use of a Q-table to determine the relative merits of specific actions in particular states. During software testing, Q-Learning maximizes the rewards (bugs, for example) over time to help determine the most efficient test paths.

SARSA (State-Action-Reward-State-Action): SARSA is more useful in dynamic environments because, in contrast to Q-Learning, it takes into account the action that was actually taken rather than the best course of action. By adjusting test strategies in response to real-time results, this method enhances the testing procedure.

DQN (Deep Q-Networks): DQN uses neural networks to handle complex and high-dimensional data by combining Q-Learning and deep learning. It allows more complex software systems with a multitude of possible states and interactions.

Challenges in Implementing Generative Al for Software Testing

There are many fascinating opportunities when using generative AI for software testing, but there are drawbacks as well. The following are the main challenges encountered when incorporating generative AI into testing procedures:

Data Dependency 📊: In order for generative AI models to learn and provide appropriate test scenarios, they need to be fed enormous volumes of high-quality data. Biased or insufficient data might cause testing to be ineffective, miss important flaws, or mistakenly indicate problems.

Complexity of Setup and Maintenance ⚙️: Generative AI systems might require a lot of resources to set up and maintain. It requires constructing sophisticated machine learning algorithms, which call for knowledge of software testing and artificial intelligence. It can be difficult to keep these models up to date over time, particularly when user behavior or program features change.

Integration with Current Frameworks 🔄: It can be challenging to integrate AI-based testing with current CI/CD pipelines or automation frameworks. There might be incompatibilities, in which case the existing workflows and tools would need to be significantly changed.

Interpretability and Trust 🔍: The "black box" aspect of generative AI's decision-making processes is one of the main causes for concern. Trust and validation depend on being able to understand why the AI makes specific testing decisions, but this is frequently not the case.

Cost and Resource Constraints 💰: Generative AI implementation requires a significant time and financial commitment. Budget restrictions or a lack of infrastructure can prevent organizations from properly utilizing AI-driven testing solutions.

Integrating Reinforcement Learning with Existing Test Frameworks

Enhancing software testing methods can be done innovatively by combining Reinforcement Learning (RL) with existing testing frameworks, but this strategy requires meticulous planning and execution.

Reinforcement Learning, a branch of machine learning, focuses on refining testing approaches by training algorithms through experimentation.

To blend RL into established testing frameworks, companies need to modify their existing development processes to include RL components. These components interact with the software under test, learning from the feedback (rewards) they receive based on their performance.

The ability for RL components to access relevant test data and communicate with the testing environment effectively is a key challenge in this process.

To guide the RL components towards achieving specific testing goals, such as increasing code coverage or reducing the time taken for testing, it's crucial to establish a robust reward system.

Additionally, to adapt to updates in the software, it's important to continuously monitor and refine the RL components. Despite these challenges, integrating RL with current frameworks can significantly improve test automation by enabling intelligent, adaptive testing that evolves with the software, leading to improved software releases and more efficient testing processes.

Tools and Platforms for Al-Powered Testing Automation

- Test.ai: Make use of this tool as an intelligent team member tester! Test.ai automates testing with artificial intelligence (AI), emphasizing self-healing and visual testing. It's excellent for spotting user interface modifications and making sure your software functions and looks great across various browsers and devices.

- Applitools: Applitools is your best bet if you want to find every little visual glitch. Because it focuses on visual AI testing, it can detect even the smallest variations in the UI of your app. Moreover, cross-browser testing is supported, so you're covered everywhere!

- Mabl: Mabl is ideal for comprehensive testing. You will do less manual labor because it combines visual testing with AI-based test maintenance. It also facilitates effective management of test cases, which streamlines the testing procedure as a whole.

- Functionize: This tool uses artificial intelligence (AI) to create and run tests intelligently. It is made to work with dynamic test scripts, which is very useful if your software is ever changing. Consider it your versatile, adaptive testing partner!

- Testim.io: Well-known for its AI-driven stability analysis and test creation, Testim.io facilitates the rapid creation of reliable, automated tests. It adapts to changes in the application by using self-healing tests, which lowers the need for maintenance.

- Sauce Labs: Sauce Labs provides AI-driven automated testing solutions that seamlessly integrate with well-known continuous integration and delivery (CI/CD) tools, making it easier to maintain continuous integration and delivery for those in need of a cloud-based platform.

These tools are here to make your life easier by automating repetitive tasks and improving test accuracy, so you can focus on what really matters: delivering great software!

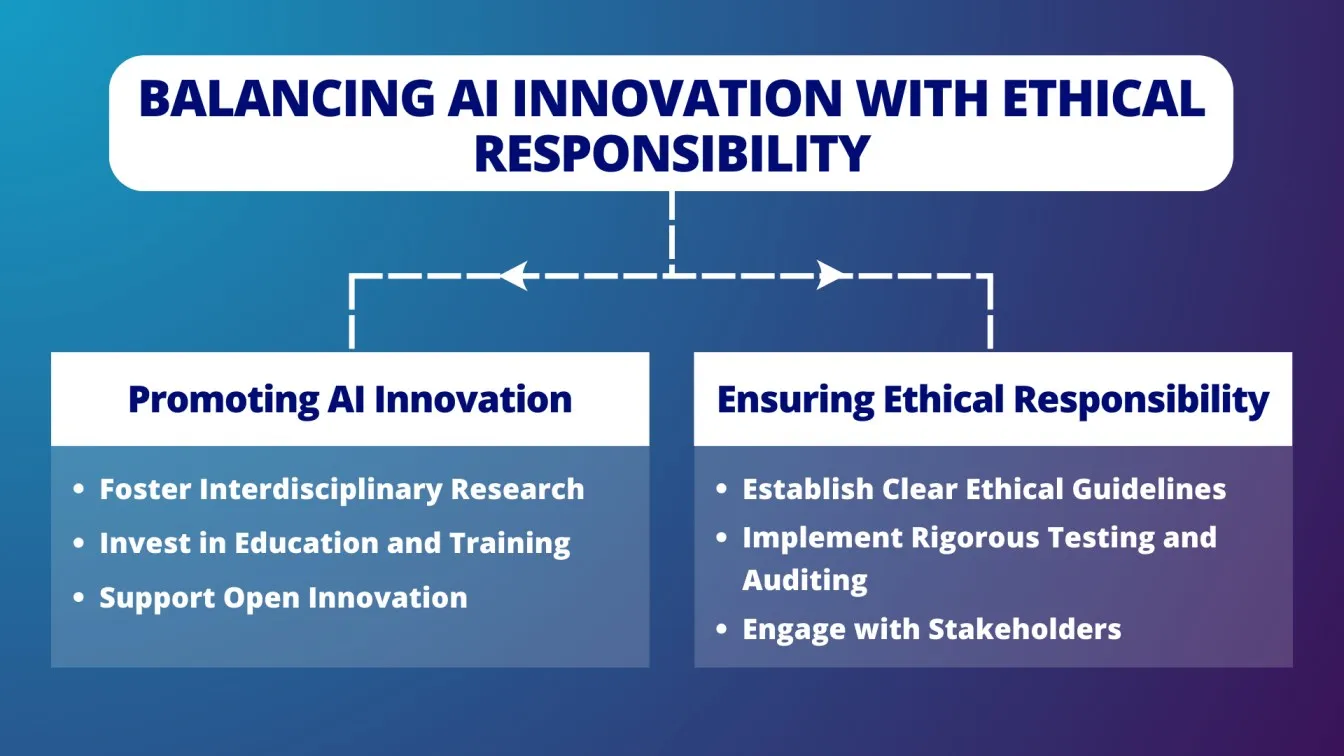

Ethical Considerations in Al-Driven Software Testing

To preserve transparency and confidence in AI-driven software testing, ethical issues must be taken into account. Despite their strength, AI tools have the potential to introduce biases from training data, producing unfair results in testing scenarios. AI algorithms may access sensitive user data, raising privacy concerns and necessitating stringent data protection protocols.

Another difficulty is ensuring accountability; in order to prevent "black box" problems, it is crucial to comprehend AI's decision-making process. Finally, in order for testers to effectively collaborate with AI tools, they must be upskilled due to the possibility of job displacement. By addressing these moral issues, AI-powered testing can improve software quality without jeopardizing privacy, fairness, or human rights.

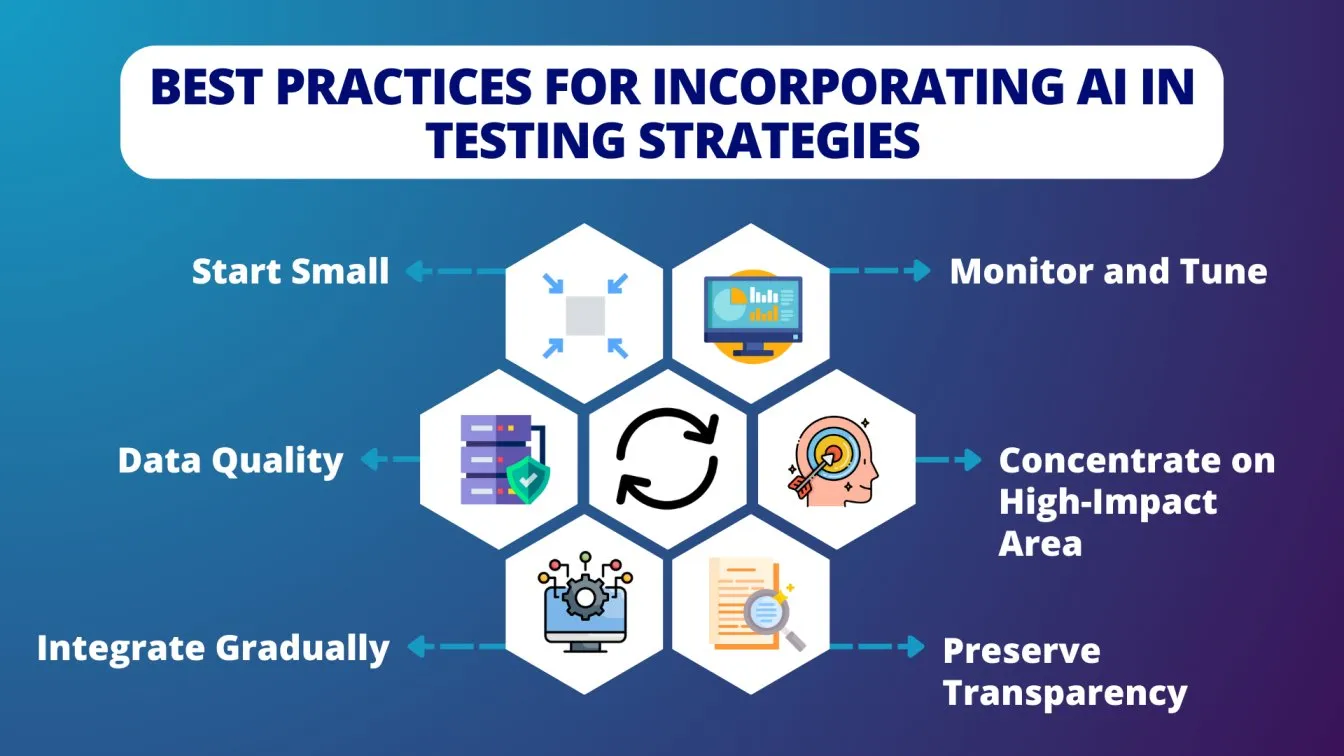

Best Practices for Incorporating Al in Testing Strategies

Start Small: Before expanding, start with small, manageable AI projects to gain an understanding of the technology's potential and constraints.

Data Quality: To prevent erroneous or skewed test results, make sure that the high-quality, unbiased data used to train AI models is available.

Integrate Gradually: To create a hybrid approach that takes advantage of both automated and manual strengths, integrate AI tools with current testing frameworks.

Monitor and Tune: To increase accuracy and dependability, continuously track the performance of AI systems and adjust models in response to user feedback.

Concentrate on High-Impact Areas: To increase productivity and decrease manual labor, apply AI to complicated, repetitive, or time-consuming tasks.

Preserve Transparency: To foster trust and guarantee accountability for test results, preserve transparency in the AI decision-making process.

Wrapping Up!

Generative AI is transforming software testing by automating test generation, optimizing test cases, and improving overall testing efficiency. It reduces manual effort, enhances coverage, and enables more thorough testing, leading to higher software quality and faster release cycles. By handling complex and repetitive tasks, AI allows testers to focus on strategic decision-making and creative problem-solving. However, successful integration requires careful planning, high-quality data, and continuous monitoring.

As AI technology continues to evolve, it will play an increasingly vital role in creating reliable, scalable, and efficient testing processes, ultimately contributing to more robust and user-friendly software solutions. Additionally, leveraging AI for bug detection and natural language processing can enhance software quality assurance by identifying potential issues early and automating resource allocation for more efficient testing workflows.

People Also Ask

👉 Is GPT a generative model?

Yes, GPT (Generative Pre-trained Transformer) is a generative language model designed to produce human-like text.

👉 Can GPT generate test cases?

Yes, GPT can generate test cases based on the provided software requirements and scenarios.

👉 Which is the best way to reinforce learning?

The best way to reinforce learning is through continuous feedback loops that adapt the model's actions to maximize rewards over time.

👉 What kind of data is needed for reinforcement learning?

Reinforcement learning requires data on actions, states, rewards, and policies to learn optimal behaviors.

%201.webp)