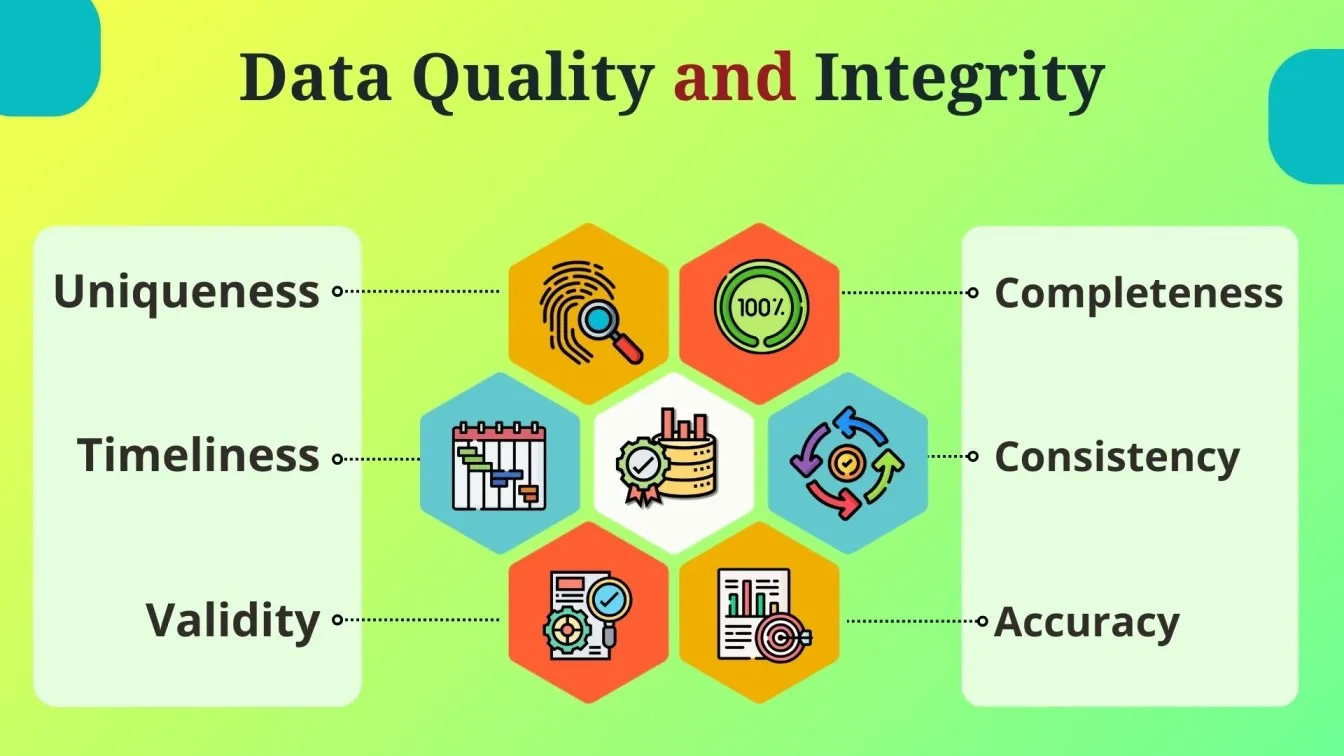

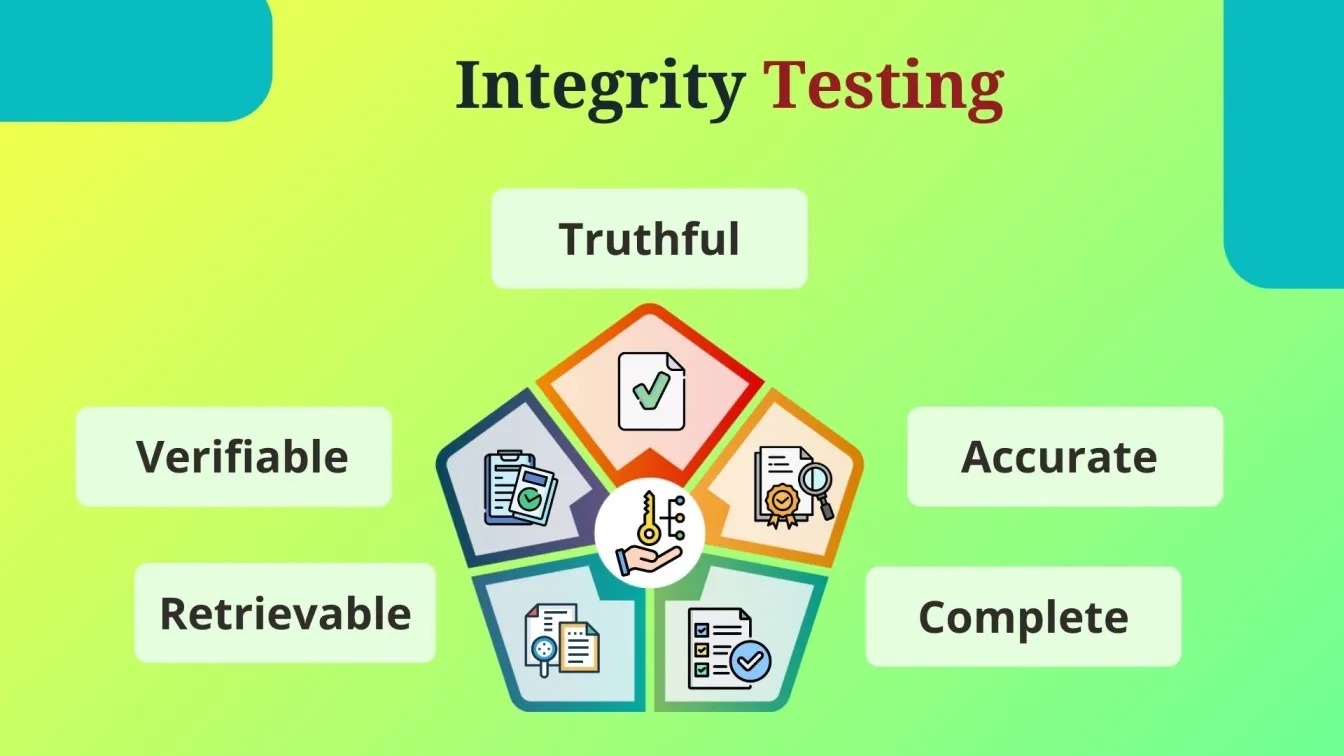

Data Integrity Testing is crucial for ensuring data accuracy, consistency, and security. Effective data integrity checks help verify data integrity, minimizing errors and preventing unauthorized access. Organizations use data integrity validation processes and data integrity monitoring to safeguard information and comply with regulations.

Regular database integrity checks and checking data integrity prevent corruption and maintain reliable databases. Businesses must implement data integrity software to automate integrity testing and enhance security. Understanding how to check data integrity and how to verify data integrity strengthens decision-making and operational efficiency.

By testing integrity proactively, companies ensure data integrity checking remains effective. What is an integrity test? It is a structured method to check data integrity and confirm accuracy. Verifying data integrity is essential for compliance, security, and performance. Robust data integrity validation safeguards business success, ensuring reliable, high-quality data.

Why You Should Read This Blog:

📌 Ensure Data Reliability: Learn how data integrity testing safeguards data accuracy and consistency.

📌 Master Data Quality Assurance: Discover best practices to maintain high-quality data.

📌 Understand key integrity types: Explore entity integrity, domain integrity, and their role in data integrity validation processes.

📌 Prevent data corruption: Identify and mitigate risks using data integrity monitoring and checking data integrity techniques.

📌 Boost Compliance & Security: Perform a database integrity check to meet industry standards.

📌 Optimize Testing Strategies: Learn how to check data integrity, implement data integrity software, and enhance testing integrity in your systems.

What is Data Integrity Testing?

Data Integrity Testing ensures the accuracy, consistency, and reliability of data throughout its lifecycle. It verifies entity integrity, domain integrity, and referential integrity, preventing unauthorized modifications, human error, and integrity issues. This testing enforces business rules, predefined rules, and regulatory requirements, ensuring high-quality data for informed decisions. By maintaining data consistency vs data integrity reliability, it supports business requirements and enhances customer satisfaction.

Why Data Integrity is Crucial for Businesses?

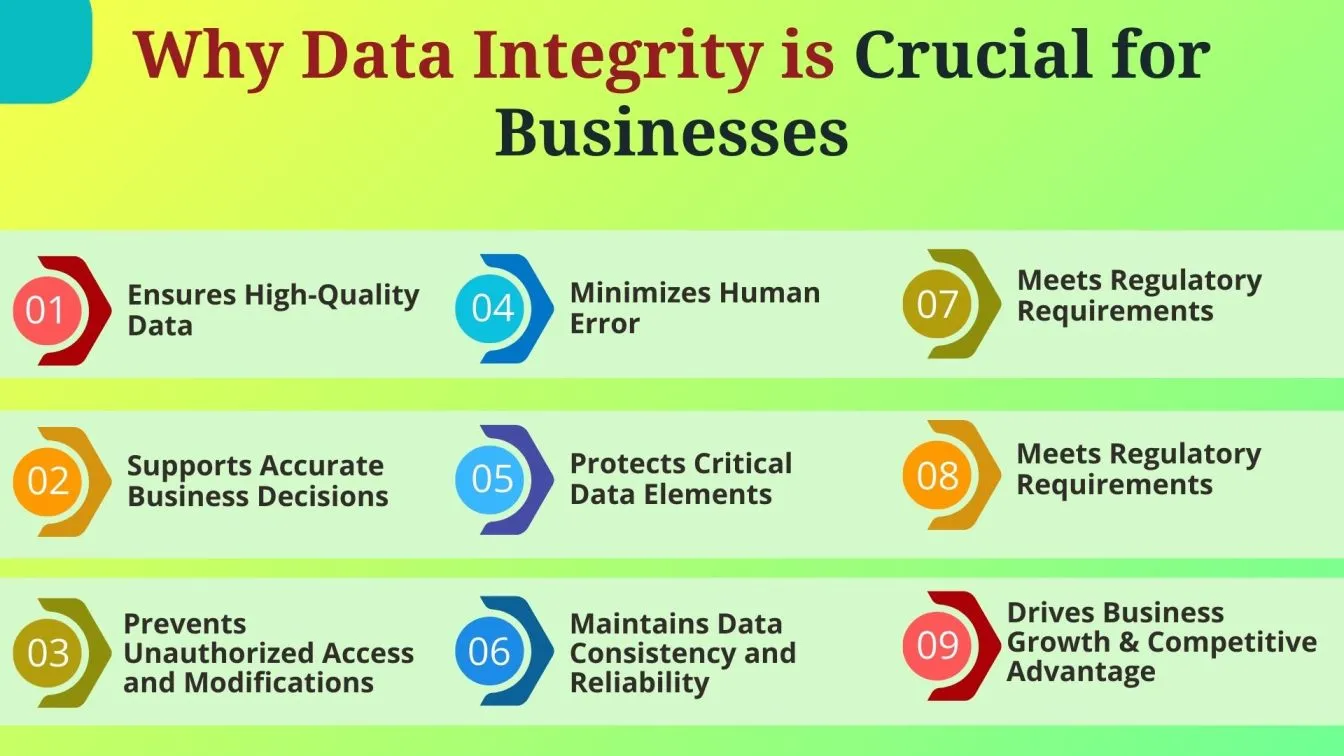

Data integrity plays a crucial role in ensuring high-quality data, which is vital for accurate business decisions and informed decisions. It safeguards against unauthorized access, unauthorized modifications, and human error, protecting critical data elements and maintaining data reliability.

By preserving entity integrity, referential integrity, and data consistency, businesses can meet regulatory requirements, avoid integrity issues, and enhance customer satisfaction. Reliable data drives business growth, supports business requirements, and provides a competitive advantage in decision-making and strategic planning.

Types of Data Integrity in Information Systems

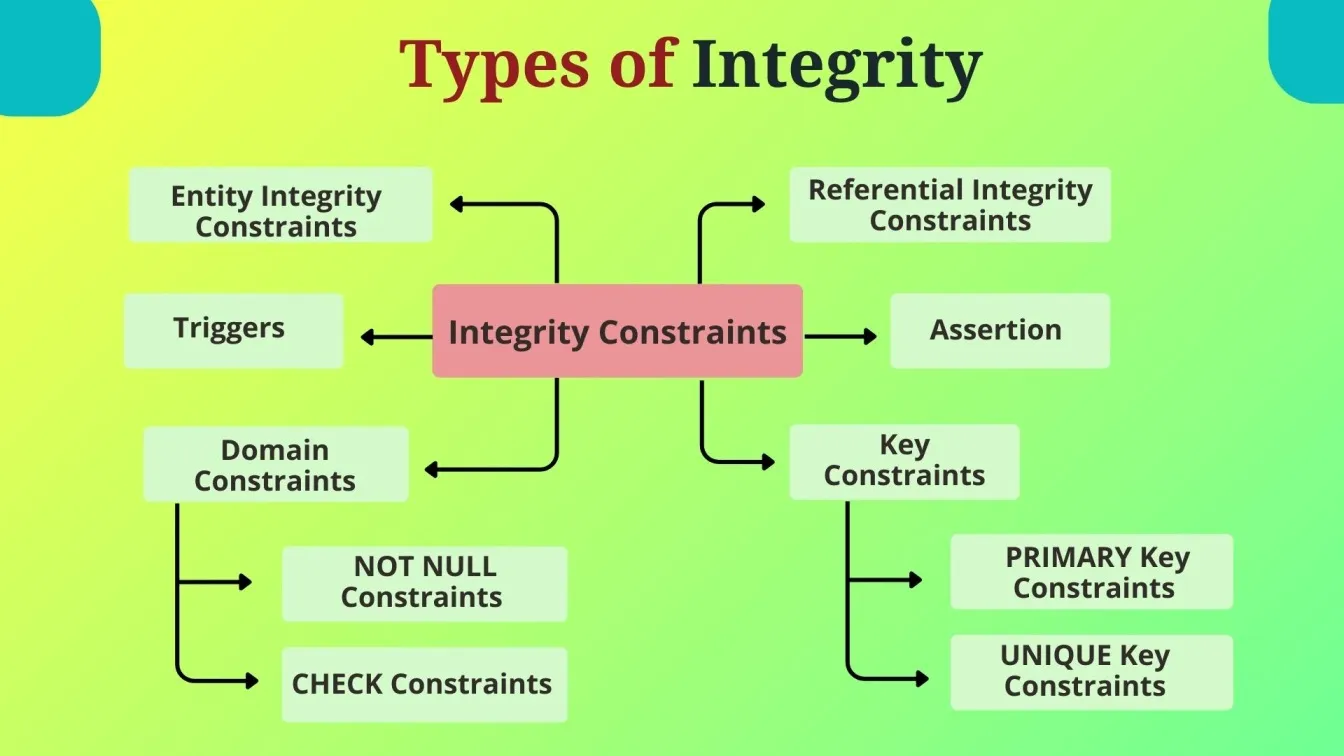

The four key types of data integrity—entity, referential, domain, and logical integrity—ensure data consistency and reliability.

1. Entity Integrity

- Ensures: Unique, identifiable records via primary keys.

- Example: A customer database uses unique Customer IDs to prevent duplicates.

- Testing:

- Validate primary keys for uniqueness and non-null values.

- Attempt duplicate entries; system should reject them.

- Run SQL queries to detect missing or duplicate keys.

2. Referential Integrity

- Ensures: Relationships between tables remain consistent via foreign keys.

- Example: A product in an order system can’t be deleted if linked to an active order.

- Testing:

- Check foreign key constraints and cascading updates/deletions.

- Try inserting orphan records; system should prevent them.

3. Domain Integrity

- Ensures: Fields accept only valid data types and values.

- Example: An age field should only accept positive integers within a set range.

- Testing:

- Verify data types match field requirements.

- Test boundary values and invalid inputs (e.g., text in numeric fields).

4. Logical Integrity

- Ensures: Data remains accurate during processing, supporting business rules.

- Example: Financial transactions must maintain balanced debit and credit entries.

- Testing:

- Validate business rules and transaction accuracy.

- Perform concurrency testing to prevent data corruption.

Implementing these tests strengthens data integrity, ensuring compliance, security, and reliable decision-making.

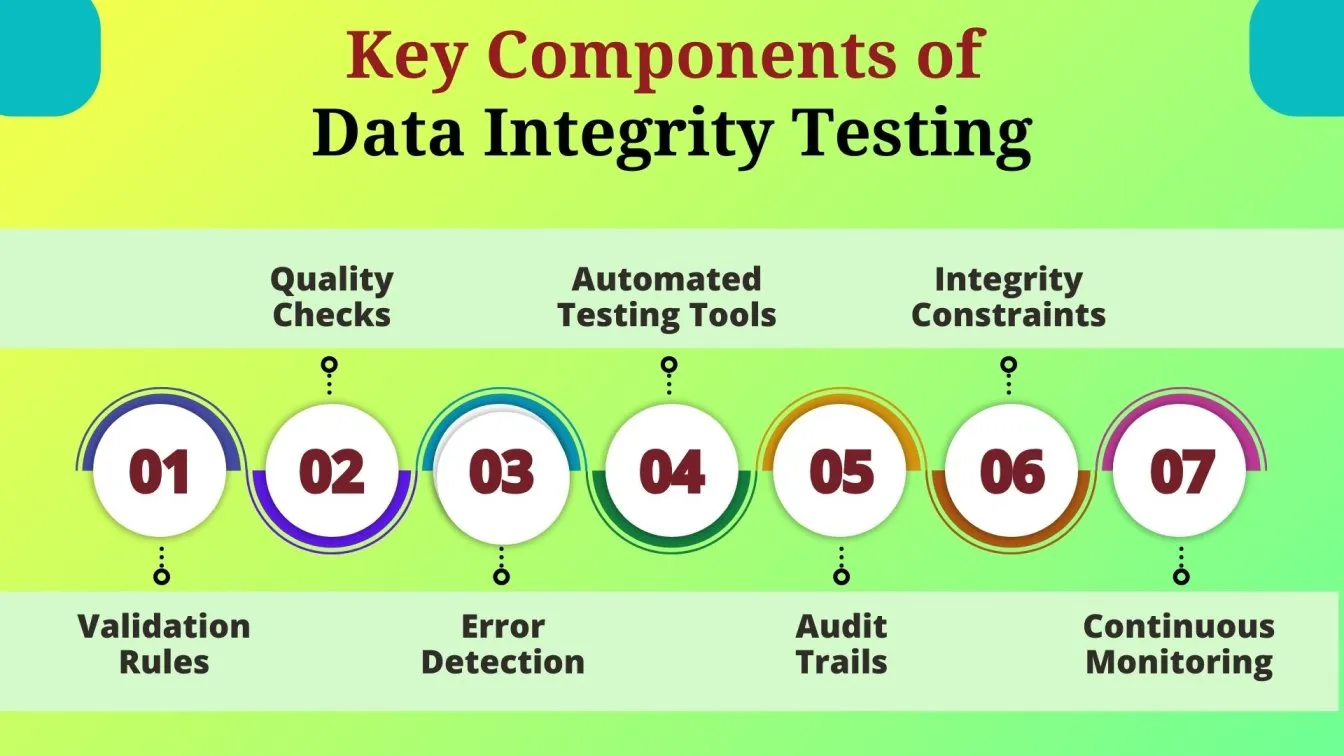

Key Components of Data Integrity Testing

Data Integrity Test involves several key components that ensure accuracy, consistency, and reliability of data within a system. These components include:

- Validation Rules:

Enforces predefined conditions to ensure data meets required standards, maintaining data consistency and preventing integrity issues. - Quality Checks:

Regular quality checks identify discrepancies, ensuring high-quality data and verifying adherence to business rules and regulatory requirements. - Error Detection:

Implements mechanisms for detecting and correcting errors, such as entry errors and unauthorized modifications, to ensure data reliability. - Automated Testing Tools:

Leverages advanced tools for continuous and automated data integrity testing, improving efficiency and reducing the likelihood of human error. - Audit Trails:

Maintains a detailed record of data changes to ensure traceability and accountability, preventing unauthorized access and modifications. - Integrity Constraints:

Defines rules like entity integrity and referential integrity that govern how data is related and maintained across the system. - Continuous Monitoring:

Implements ongoing monitoring to detect and address integrity issues in real-time, ensuring data remains reliable and consistent over time.

These components form the backbone of an effective data integrity testing strategy, supporting data-driven decision-making and ensuring data adheres to regulatory compliance and business requirements.

Common Challenges in Data Integrity Testing

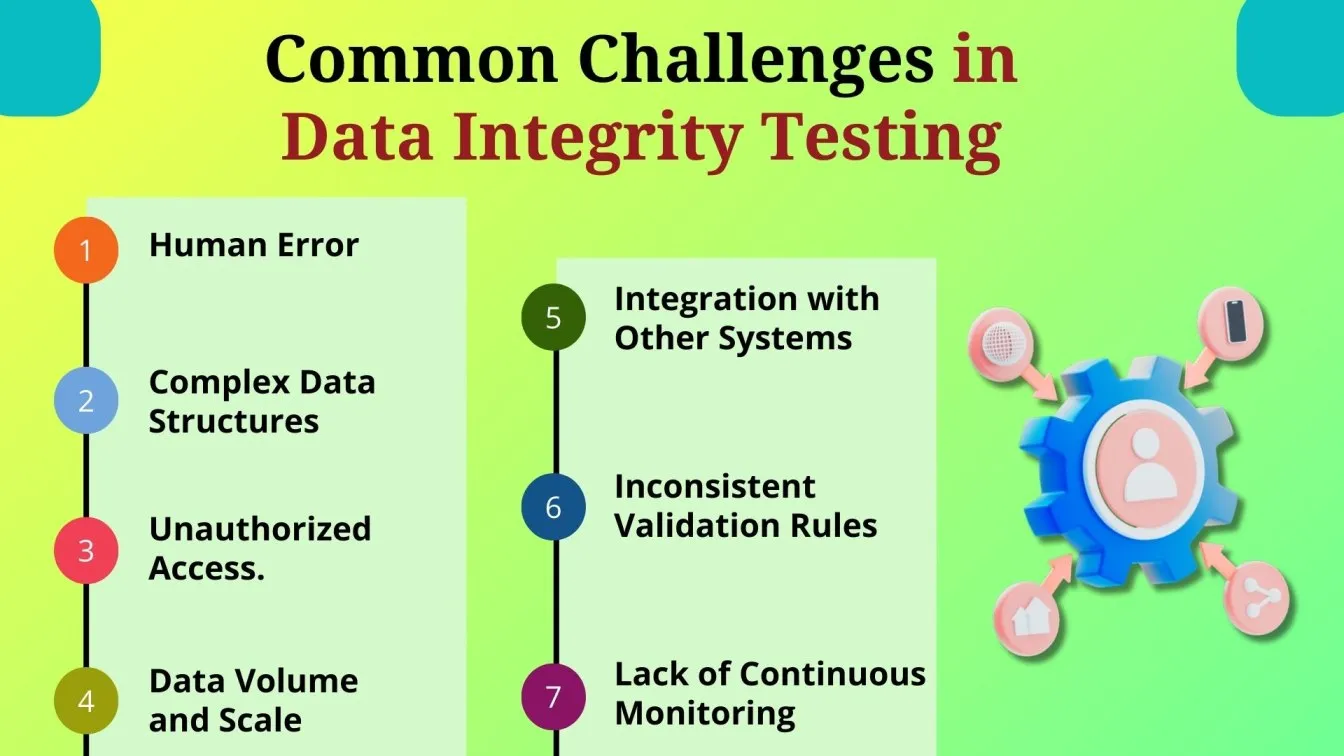

Data Integrity Testing faces several challenges that can impact the accuracy and reliability of data. Some of the most common challenges include:

- Human Error

Manual data entry and testing processes can lead to mistakes, compromising data accuracy and introducing integrity issues. - Complex Data Structures

Complex relationships between data can make it difficult to ensure referential integrity and domain integrity, leading to potential inconsistencies. - Unauthorized Access

Unauthorized access to sensitive data can alter or corrupt information, violating data integrity and affecting business decisions. - Data Volume and Scale

Large volumes of data can complicate the data integrity testing process, requiring more resources and time to ensure consistency and accuracy. - Integration with Other Systems

Integration with external systems can introduce inconsistencies, especially when data is transferred or transformed, potentially violating business rules and regulatory compliance. - Inconsistent Validation Rules

Inadequate or inconsistent validation rules across systems can lead to errors and discrepancies, compromising data reliability. - Lack of Continuous Monitoring

Without continuous monitoring, integrity issues can go undetected, affecting data quality over time and impacting informed decisions.

Addressing these challenges through regular audits, automated testing tools, and quality checks is crucial to maintaining data integrity and supporting business operations.

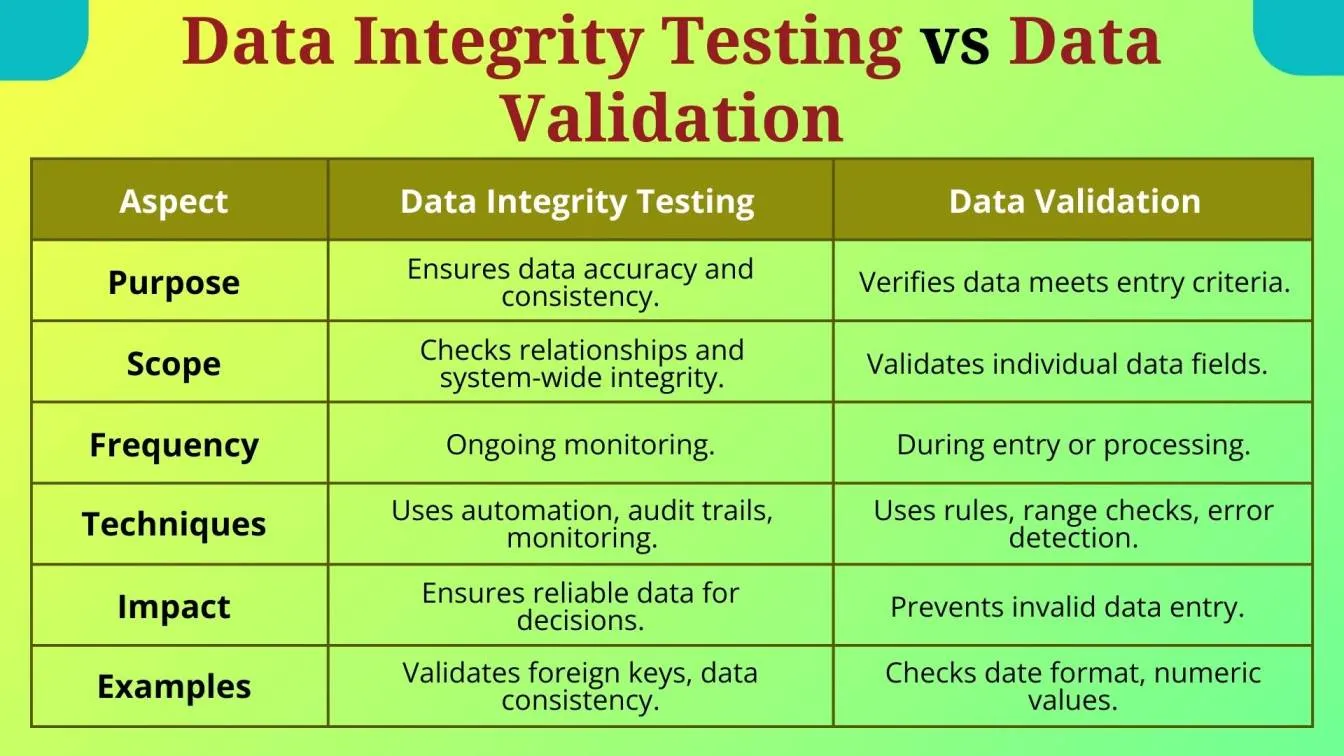

Data Integrity Testing vs. Data Validation

In data management, it's essential to distinguish between Data Integrity Testing and Data Validation. Both play crucial roles in maintaining high-quality data, but they serve different purposes and are applied at different stages of data processing. Understanding these differences helps organizations implement the right strategies for data consistency, reliability, and regulatory compliance.

Both Data Integrity Testing and Data Validation are essential for effective data management. While integrity testing ensures that data remains reliable and consistent throughout its lifecycle, validation focuses on ensuring data correctness at the point of entry. Together, they play a critical role in maintaining High-quality data, supporting accurate insights, and ensuring compliance with regulatory requirements.

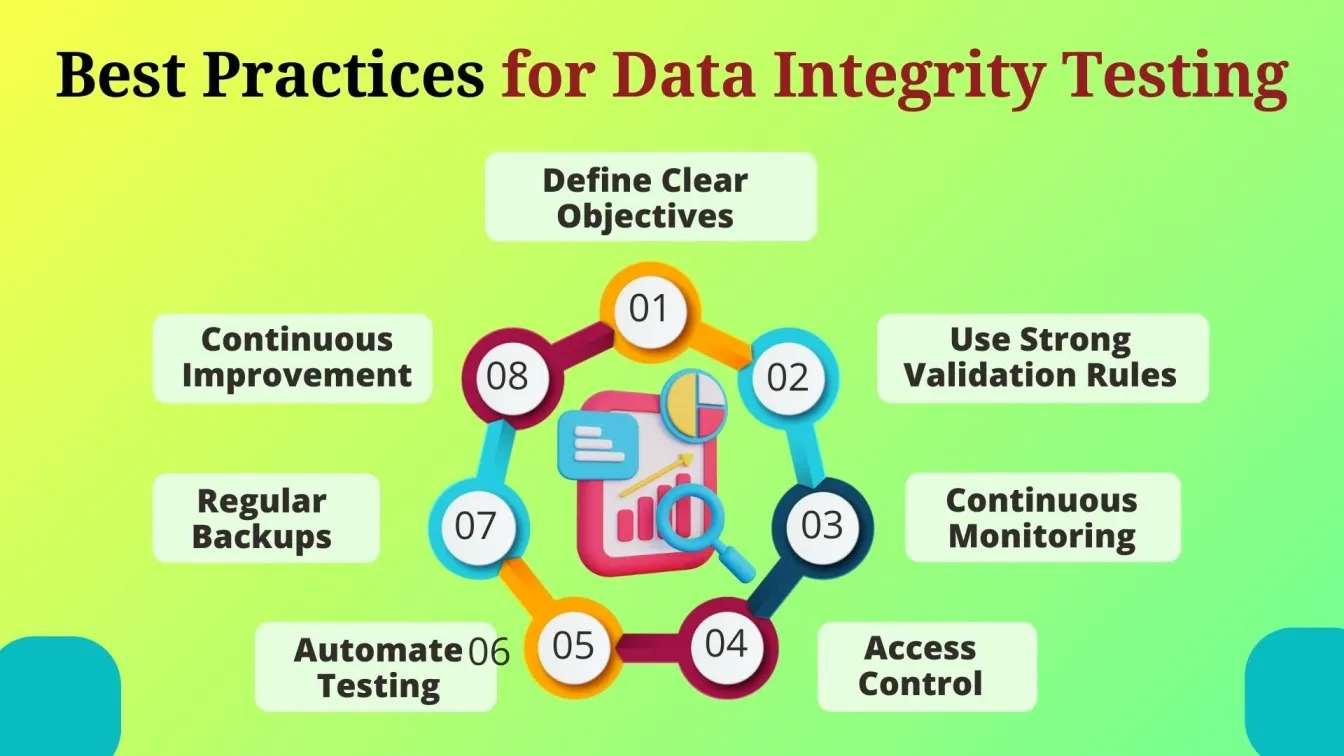

Best Practices for Data Integrity Testing

The key types of data integrity—entity integrity, referential integrity, domain integrity, and logical integrity—play crucial roles in maintaining data consistency and reliability.

1. Entity Integrity

- Definition: Ensures each record is unique and identifiable using a primary key, preventing duplicate entries.

- Example: In a customer database, each customer has a unique Customer ID, ensuring accurate tracking and preventing duplicate records, thus enhancing data reliability.

- Testing Methods:

- Primary Key Validation: Check if primary keys are unique and not null.

- Duplicate Entry Testing: Attempt to insert duplicate values into the primary key field and verify system rejection.

- Automated SQL Queries: Run queries to detect missing or duplicate primary keys.

2. Referential Integrity

- Definition: Maintains consistency between related tables using foreign keys, enforcing data consistency.

- Example: In an order management system, if a product is deleted from the catalog, existing orders linked to that product become invalid. Referential integrity prevents this by restricting deletion if related orders exist.

- Testing Methods:

- Foreign Key Constraints Testing: Verify that foreign key relationships are correctly enforced.

- Cascade Operations Testing: Test cascading updates or deletions to ensure dependent data updates correctly.

- Negative Testing: Try inserting orphan records (i.e., records with foreign keys that do not exist) and ensure they are rejected.

3. Domain Integrity

- Definition: Enforces valid data types and values within a field, adhering to predefined rules.

- Example: In an employee database, the "Age" field accepts only positive integers within a specific range, preventing invalid entries like negative numbers or letters.

- Testing Methods:

- Data Type Testing: Verify that fields accept only valid data types (e.g., integer for age, date for DOB).

- Boundary Value Testing: Check values at the lower and upper limits of defined constraints (e.g., age cannot be negative or above a certain threshold).

- Invalid Input Testing: Enter incorrect data (e.g., text in a numeric field) to ensure system rejection.

4. Logical Integrity

- Definition: Ensures data remains accurate and consistent during processing and usage, supporting business rules.

- Example: In financial transactions, total debit and credit entries must always balance, maintaining the integrity of accounting records.

- Testing Methods:

- Business Rule Validation: Check whether data transformations and calculations follow predefined rules.

- Transaction Testing: Ensure financial or data-related transactions are processed correctly without errors.

- Concurrency Testing: Test simultaneous access to records by multiple users to prevent data corruption or inconsistencies.

By implementing these testing methodologies, organizations can ensure high data quality, maintain regulatory compliance, prevent unauthorized access, and improve decision-making processes.

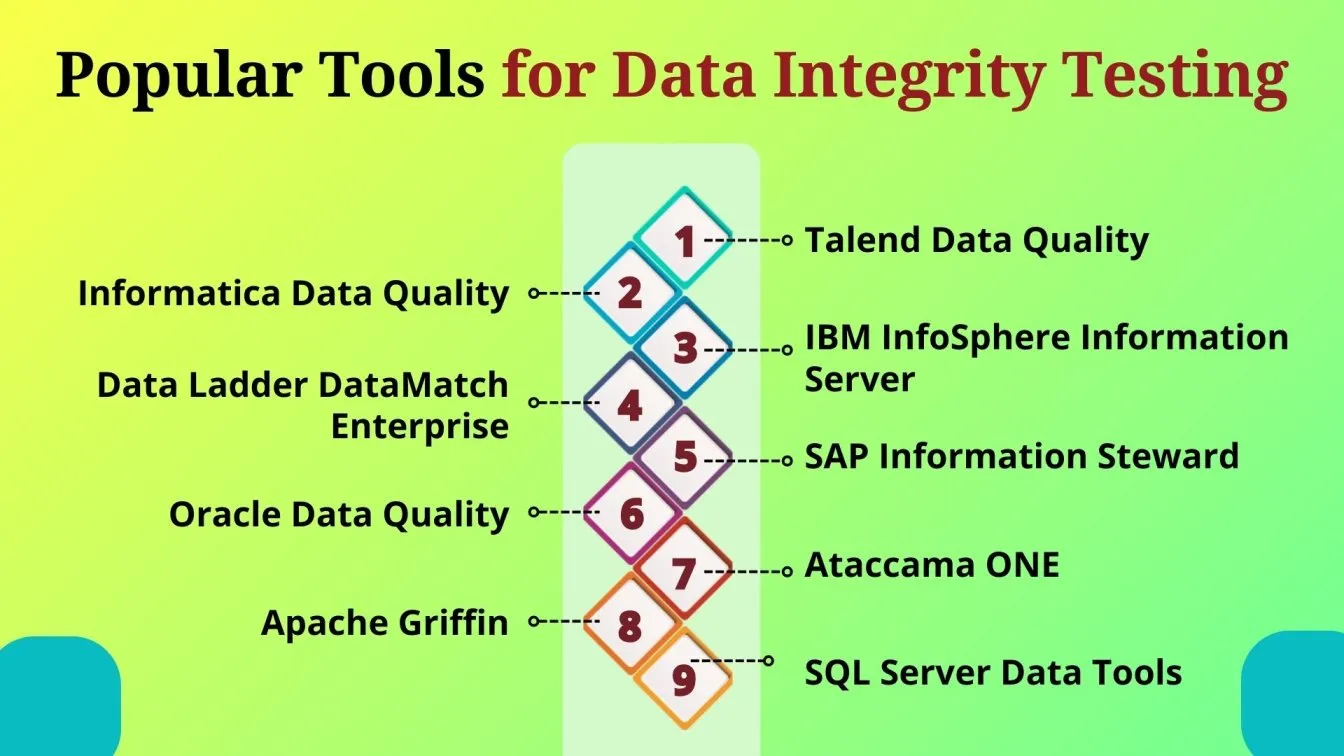

Popular Tools for Data Integrity Testing

Data integrity testing requires robust tools that ensure the accuracy, reliability, and consistency of data. The right tools can automate processes, minimize human error, and help meet regulatory requirements. Here are some popular tools used for effective data integrity testing:

- Talend Data Quality offers a comprehensive suite for managing data quality across various systems, providing data profiling, data cleansing, and data validation features.

- Informatica Data Quality ensures data accuracy and consistency through real-time monitoring and data validation and supports data cleansing with integration for automated testing.

- IBM InfoSphere Information Server provides a unified platform for data integration, data governance, and data quality, helping detect and correct data integrity issues through profiling, validation, and auditing.

- Data Ladder DataMatch Enterprise specializes in data matching and data cleansing to ensure entity integrity, automating data validation and reconciliation across multiple data sources.

- SAP Information Steward ensures data consistency, accuracy, and alignment with business rules, offering tools for data profiling, cleansing, and validation.

- Oracle Data Quality focuses on ensuring data integrity within Oracle-based databases, providing real-time data validation and cleansing to maintain high-quality data.

- Ataccama ONE provides a unified platform for data quality management and data governance, offering automated data profiling, cleansing, and integrity checks.

- Apache Griffin is an open-source tool for data quality and integrity testing, integrating with big data environments to provide continuous data monitoring.

- SQL Server Data Tools is used for integrity checks and data validation within SQL Server environments, providing tools for data profiling, validation rules, and error detection.

These tools enable businesses to efficiently manage data integrity testing, reduce error rates, and ensure that data meets regulatory standards and business requirements. They also support continuous monitoring and automated validation, driving improved business operations and data quality assurance.

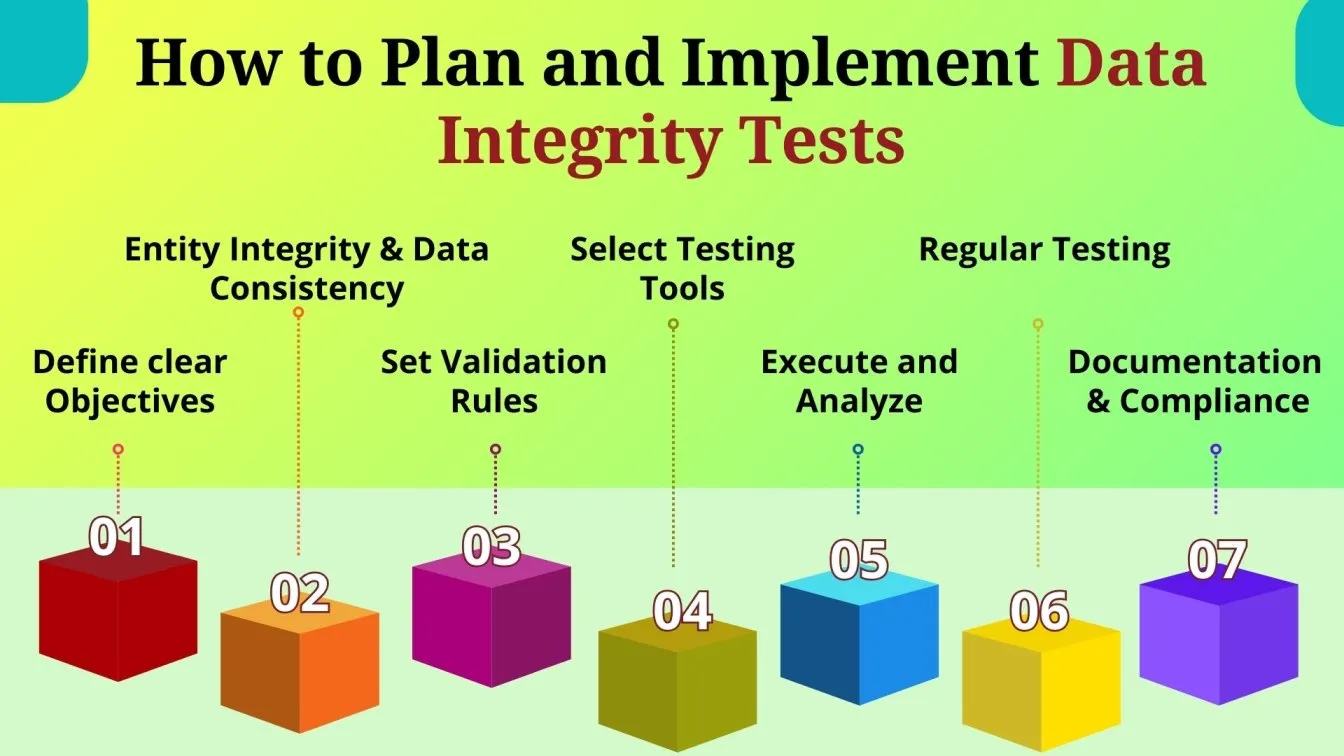

How to Plan and Implement Data Integrity Tests?

To plan and implement data integrity tests, begin by defining clear objectives based on business requirements and identifying critical data elements. Focus on ensuring entity integrity and data consistency across systems while adhering to business rules. Set specific validation rules and select the right data integrity testing tools for automation and continuous monitoring. Execute test cases, analyze results to identify issues like duplicate entries or data corruption, and perform regular tests to maintain high-quality data.

Lastly, document all findings and corrective actions to ensure compliance and support future improvements. This approach helps maintain reliable, consistent data for decision-making and business operations.

Real-World Examples of Data Integrity Failures

Data integrity failures can have significant consequences across industries. One example is the 2012 Knight Capital Group incident, where a software error led to the accidental trading of millions of shares, resulting in a loss of $440 million in just 45 minutes. The issue stemmed from faulty data integration, causing incorrect trading data to be processed.

Another example is the Volkswagen emissions scandal, where inaccurate data was used to falsely report vehicle emissions, leading to a massive loss in customer trust, regulatory penalties, and reputational damage. These examples highlight the critical role of maintaining data integrity for financial, legal, and operational success.

In healthcare, a hospital system data breach where patient data was corrupted led to misdiagnoses and improper treatments. This compromised patient safety and resulted in legal actions and a loss of patient trust. These real-world failures underscore the importance of robust data integrity measures to avoid financial losses, legal ramifications, and reputational harm.

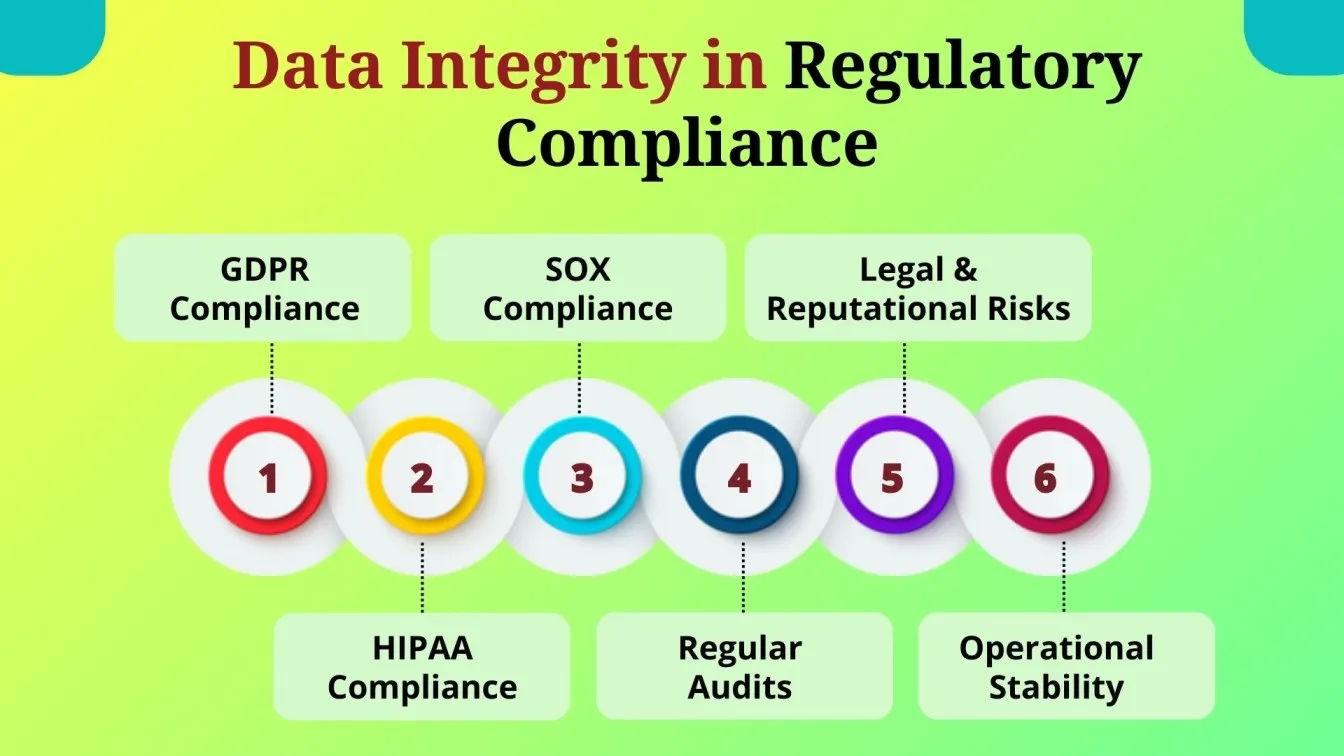

Data Integrity in Regulatory Compliance

Data integrity plays a crucial role in ensuring regulatory compliance across various industries. Organizations must maintain accurate, consistent, and reliable data to meet regulatory requirements set by authorities such as the GDPR (General Data Protection Regulation), HIPAA (Health Insurance Portability and Accountability Act), and SOX (Sarbanes-Oxley Act). Compliance with these regulations often demands robust data integrity measures to ensure the protection, privacy, and accurate reporting of sensitive information.

For example, healthcare organizations must ensure that patient data is accurate and unaltered to meet HIPAA requirements, while financial institutions need to ensure their records are consistent and correct to comply with SOX and GDPR. Regular audits and data integrity testing are often required to confirm adherence to these regulations. Failure to maintain proper data integrity can result in legal penalties, fines, and damage to an organization’s reputation. Therefore, implementing stringent data integrity practices is not only a legal obligation but also critical for maintaining business trust and operational stability.

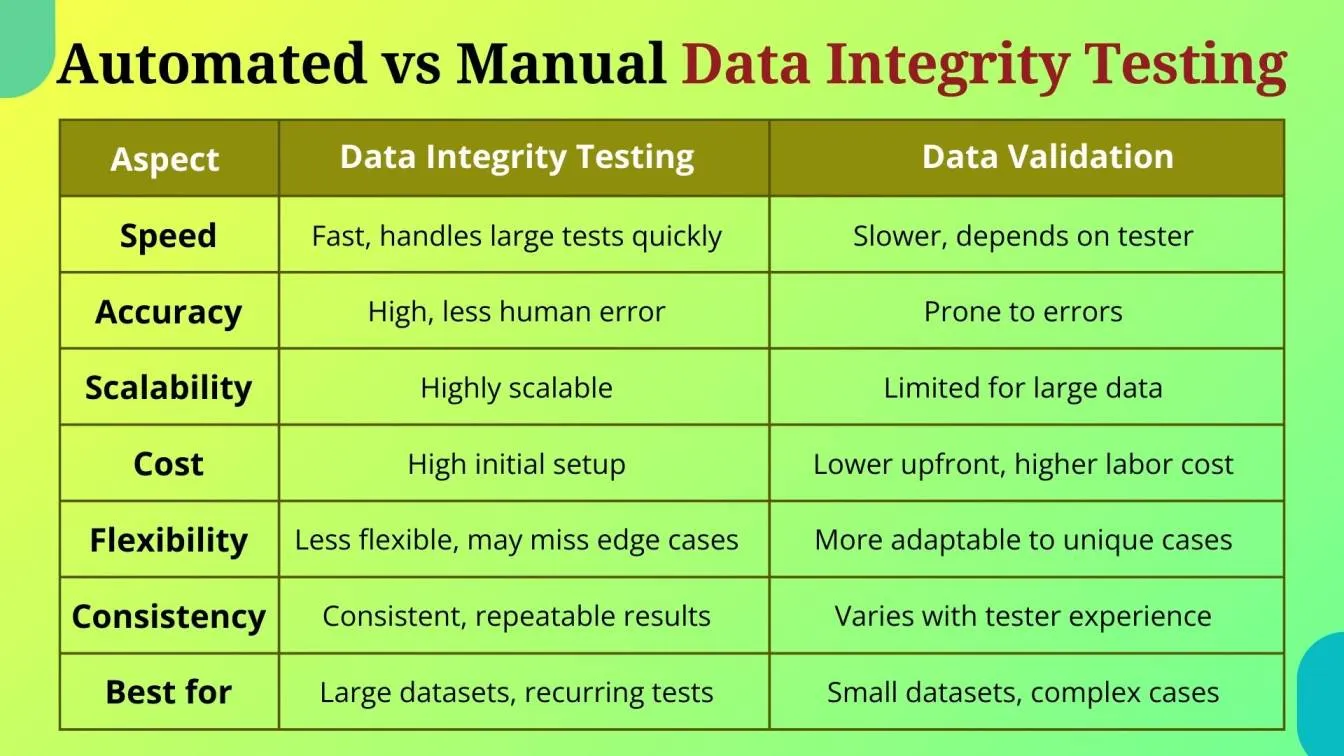

Automated vs. Manual Data Integrity Testing

When choosing between automated and manual data integrity testing, organizations need to consider factors like speed, accuracy, scalability, and resource availability. Each method has its advantages and limitations. Here's a quick comparison:

In conclusion, automated testing is more efficient and effective for large-scale data integrity checks, whereas manual testing provides flexibility and is better suited for specific, complex tasks. The best solution often involves using both methods in tandem to ensure comprehensive data integrity validation.

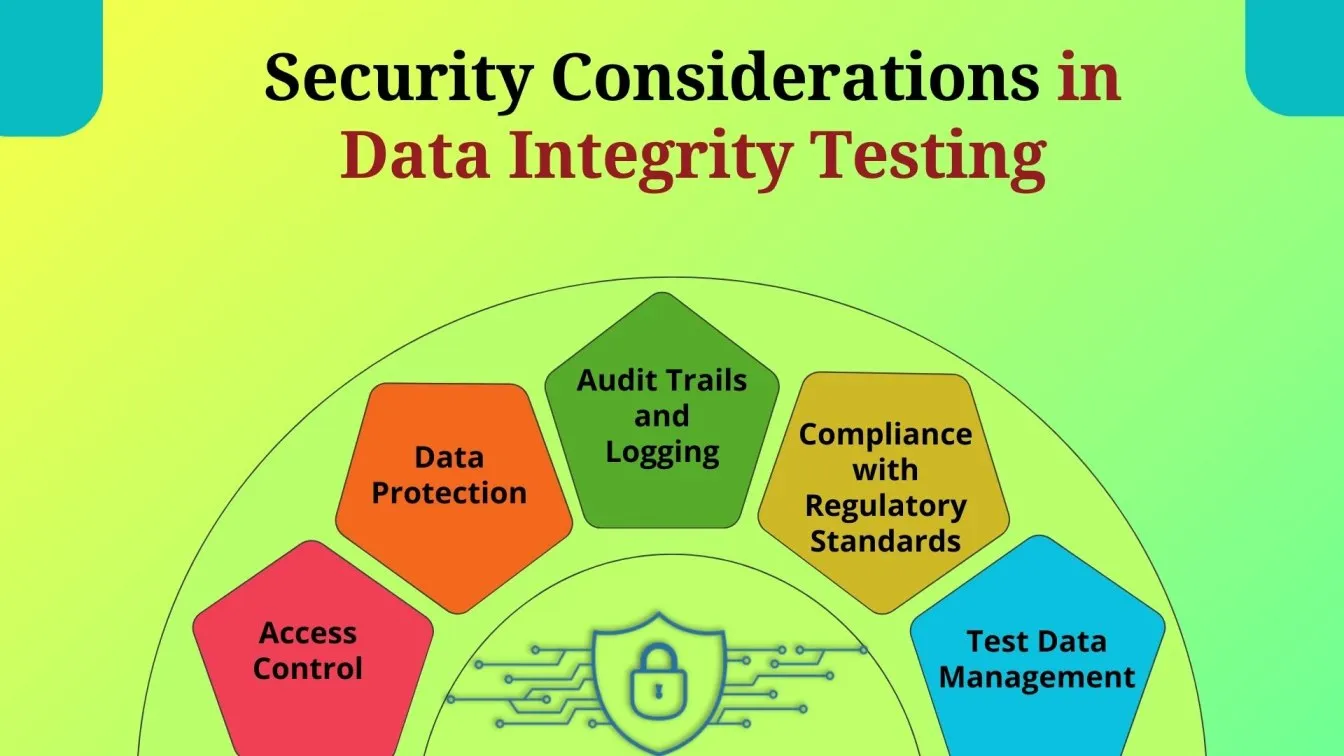

Security Considerations in Data Integrity Testing

Ensuring security during data integrity testing is essential to protect sensitive information from breaches, unauthorized access, and unauthorized modifications. Effective security measures not only safeguard data but also help organizations comply with regulatory requirements and maintain customer trust.

Here are key considerations:

- Access Control: Restrict access to testing environments and data only to authorized personnel to prevent unauthorized data access. Implement strong authentication methods and role-based permissions.

- Data Protection: Use encryption for data at rest and in transit to safeguard sensitive information from interception or theft.

- Audit Trails and Logging: Maintain detailed audit trails to track access and modifications during testing. This ensures accountability and aids in identifying any security breaches.

- Compliance with Regulatory Standards: Adhere to regulatory requirements such as GDPR, HIPAA, and SOX to ensure data security and privacy compliance.

- Test Data Management: Utilize anonymized or synthetic data for testing purposes to avoid exposing real customer data.

By implementing these security practices, organizations can conduct data integrity testing safely and effectively, ensuring data remains accurate, reliable, and compliant with regulatory standards.

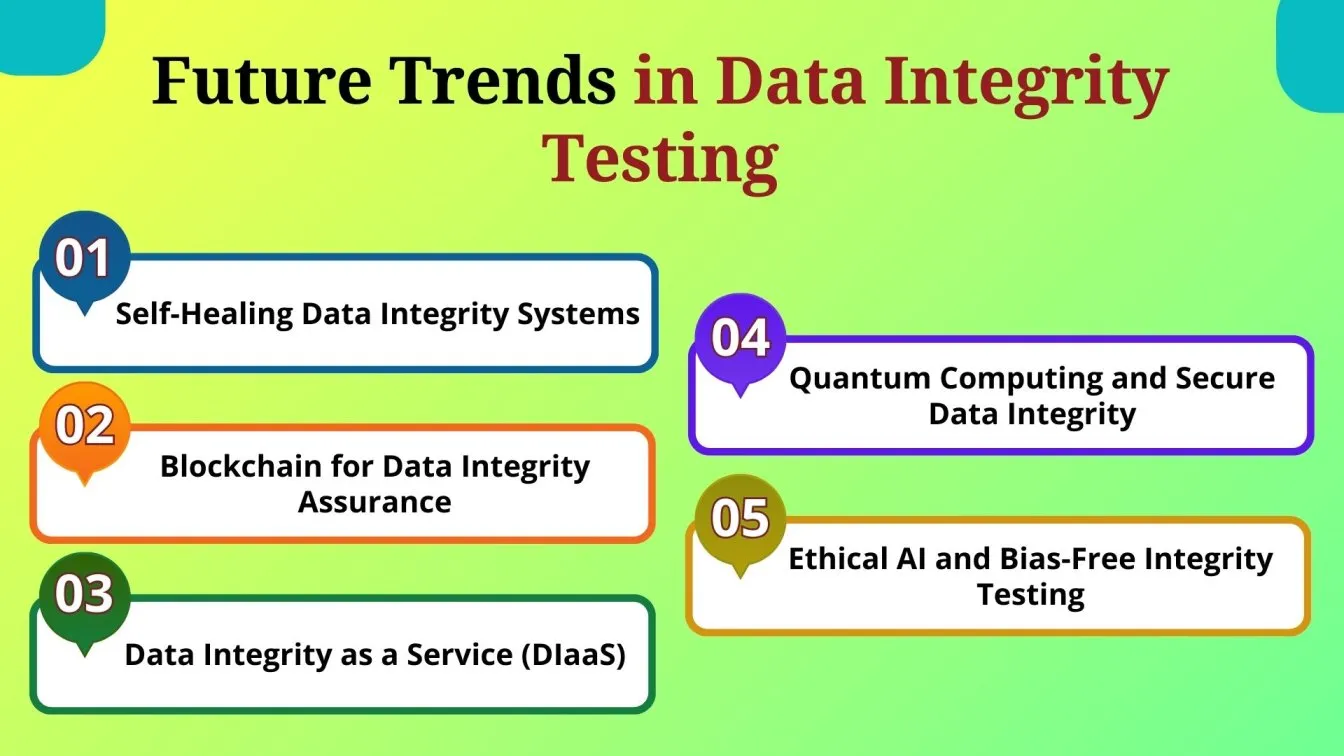

Future Trends in Data Integrity Testing

With the rapid expansion of data and evolving technology landscapes, data integrity testing is shifting towards more intelligent, adaptive, and proactive approaches. Here are some key emerging trends shaping its future:

1. Self-Healing Data Integrity Systems

- AI-driven systems will automatically detect, correct, and prevent data integrity issues without manual intervention.

- Advanced machine learning models will predict anomalies and trigger corrective actions in real time.

2. Blockchain for Data Integrity Assurance

- Decentralized and tamper-proof ledgers will enhance auditability and transparency in data transactions.

- Smart contracts will enforce integrity rules, ensuring data accuracy across distributed systems.

3. Data Integrity as a Service (DIaaS)

- Organizations will adopt third-party cloud-based integrity services for scalable, on-demand testing solutions.

- DIaaS platforms will offer real-time monitoring, automated validation, and compliance tracking.

4. Quantum Computing and Secure Data Integrity

- Quantum encryption techniques will fortify data security and prevent breaches affecting integrity.

- High-speed quantum processing will enable ultra-fast validation of massive datasets.

5. Ethical AI and Bias-Free Integrity Testing

- AI-driven integrity tests will be designed to identify and eliminate bias in data sets, ensuring fair and ethical AI decision-making.

- New regulations will enforce responsible AI governance, influencing integrity validation methods.

These trends signal a paradigm shift towards autonomous, decentralized, and next-generation data integrity solutions, ensuring trustworthy and resilient data ecosystems.

Conclusion: Ensuring Data Integrity for Reliable Systems

Effective data integrity testing is essential for maintaining accurate, consistent, and reliable data. Implementing quality tools and adhering to integrity standards ensures that data remains free from corruption. Regular range checks and statistical methods help validate data accuracy across real-world entities. Understanding standards of quality, such as enforcing entity integrity constraints and domain integrity constraints, prevents discrepancies. Conducting regular testing and regular checks ensures compliance with data governance policies. As data systems grow in complexity, leveraging AI-driven integrity solutions enhances data consistency vs. data integrity while maintaining high quality assurance. Businesses that prioritize data reliability and rigorous testing frameworks ensure long-term data integrity and trustworthiness.

Knowing what data reliability is, its definition, and the relationship between data reliability and validity supports precise decision-making. Enforcing entity integrity constraints and domain integrity constraints is crucial. A clear understanding of what entity integrity in a database is and what domain integrity means prevents data corruption.

As data grows in complexity, leveraging AI-driven data integrity testing helps businesses maintain data consistency vs data integrity, reliability, and quality assurance, ensuring long-term success.

People Also Ask:

What is the difference between data integrity and data security?

Data integrity ensures that data is accurate, consistent, and reliable, while data security focuses on protecting data from unauthorized access, breaches, and alterations.

How often should data integrity testing be performed?

Data integrity testing should be performed regularly, especially after system updates, data migrations, or changes in business processes to ensure continuous data quality and accuracy.

Can data integrity issues impact system performance?

Yes, data integrity issues can lead to system inefficiencies, slower processing speeds, and errors, ultimately affecting the performance and reliability of applications.

What industries require strict data integrity compliance?

Industries such as healthcare, finance, manufacturing, and pharmaceuticals require strict data integrity compliance to meet regulatory standards and ensure operational accuracy.

Is data integrity testing necessary for small businesses?

Yes, even small businesses should perform data integrity testing to ensure reliable, accurate data for decision-making, customer satisfaction, and regulatory compliance.

%201.webp)