Why do even well-tested systems fail in production, and how can end-to-end testing bridge the gap

End-to-End Testing (E2E) is a key mechanism to verify that software applications and all components are working properly together. Modern software systems have become more complex, leading developers and testers to struggle with issues such as complex environments 🔧 , flaky tests, and dependency management. Mastering these challenges is crucial for providing quality software testing tools that meet user specifications.

Throughout this blog, we will examine the typical end-to-end testing 🔎 problems and offer practical software testing solutions. This article will assist you in improving software quality and streamlining your testing process, regardless of whether you're new to E2E testing 🐣 or want to improve your software testing methodology.

Here's what’s coming your way:📖

- What End-to-end testing is and how it ensures the entire software application works as expected.

- The Importance of E2E testing in validating user interactions and ensuring software reliability.

- The Common Challenges in E2E testing, including various obstacles that can affect test execution, accuracy, and overall efficiency.

- Effective Strategies to tackle challenges and ensure robust E2E test execution.

- Best Practices for enhancing your E2E testing process and boosting efficiency

What is End-to-End Testing?

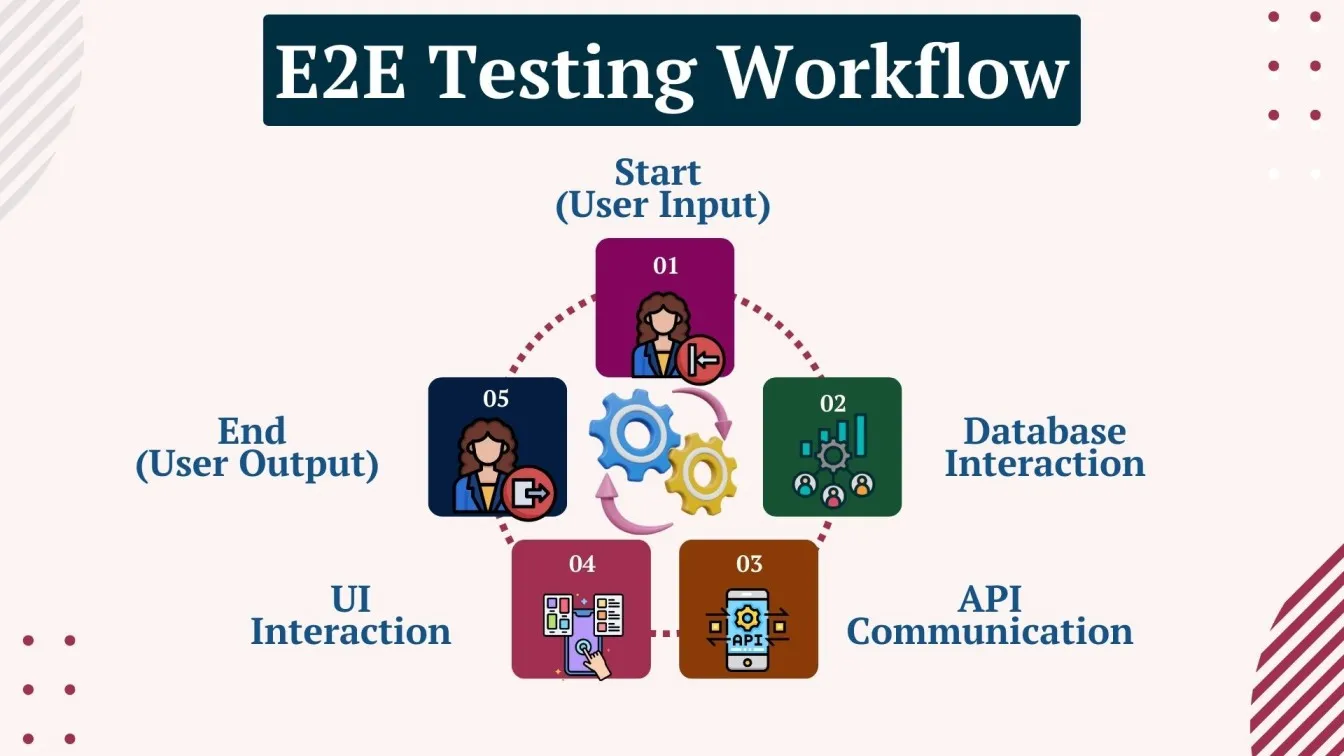

End-to-End Testing (E2E) is a type of software testing that makes sure all the parts work together as intended by analyzing the complete application from beginning to end. The integration of various system components, including databases, user interfaces, APIs, and other services, inside a software environment is validated through the simulation of real user scenarios in E2E testing 🖥️.

The user experience and general functionality of the product may be impacted by important problems and bottlenecks that are found using this testing methodology. E2E testing guarantees a faultless and seamless flow of data and actions through the application by testing the complete system 🔄.

Importance of End-to-End Testing in Software Testing

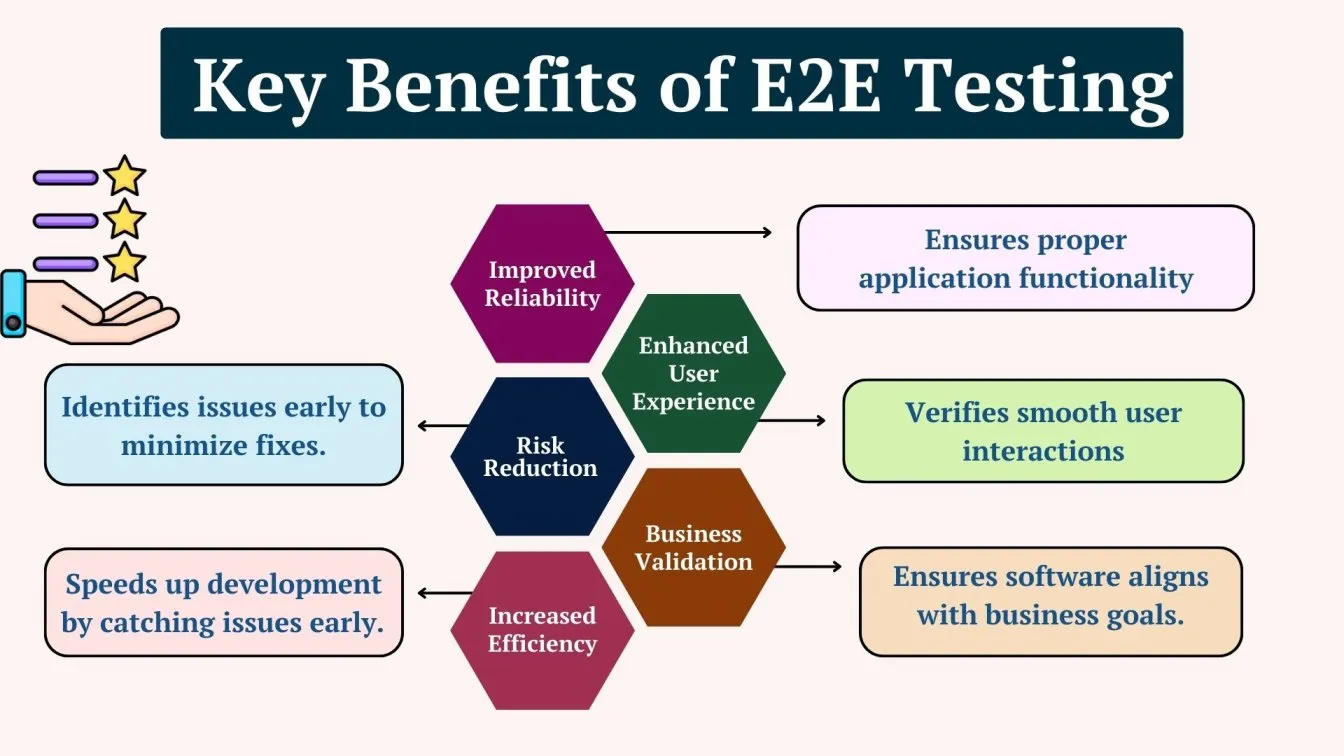

End-to-End Testing (E2E) is essential in ensuring that the entire software system functions smoothly, from user input to final output. 📤 It ensures that the entire workflow operates as intended by testing the interactions between various components, including databases, user interfaces, APIs, and external services.

Integrating E2E testing within the testing pyramid, it complements other software testing types like unit and integration tests, providing a comprehensive approach to cover all aspects of the application. This complete view helps in testing the entire process 🔄, ensuring high-quality software and user satisfaction.

E2E testing assists in detecting important integration problems that might not be apparent in unit or integration testing by mimicking actual user scenarios. This guarantees that every component of the system functions as a whole, reducing the possibility of unforeseen malfunctions and raising user happiness ✨.

Furthermore, E2E testing is essential for confirming the software's dependability in real-world scenarios and confirming the business logic. It guarantees that the program not only satisfies functional specifications but also offers end users a smooth and simple experience 🚀.

Common Challenges in End-to-End Testing

End-to-end (E2E) testing is critical for verifying that software applications 💻 perform as intended across all components, but it has its own set of issues. As software systems become more complicated, it becomes more difficult to verify that everything functions properly, including the user interface, APIs, databases, and third-party services.

These obstacles can have an impact on the effectiveness and efficiency of your testing operations, resulting in delays, errors, and reliability issues.

In this section, we'll look at some of the most typical challenges that E2E testers 👨💻 face, such as test environment difficulties, data consistency, and dependencies. Addressing these problems is critical to increasing the accuracy and general performance of your testing operations, resulting in seamless and trustworthy software releases.

Complex Test Environments in Modern Software Testing

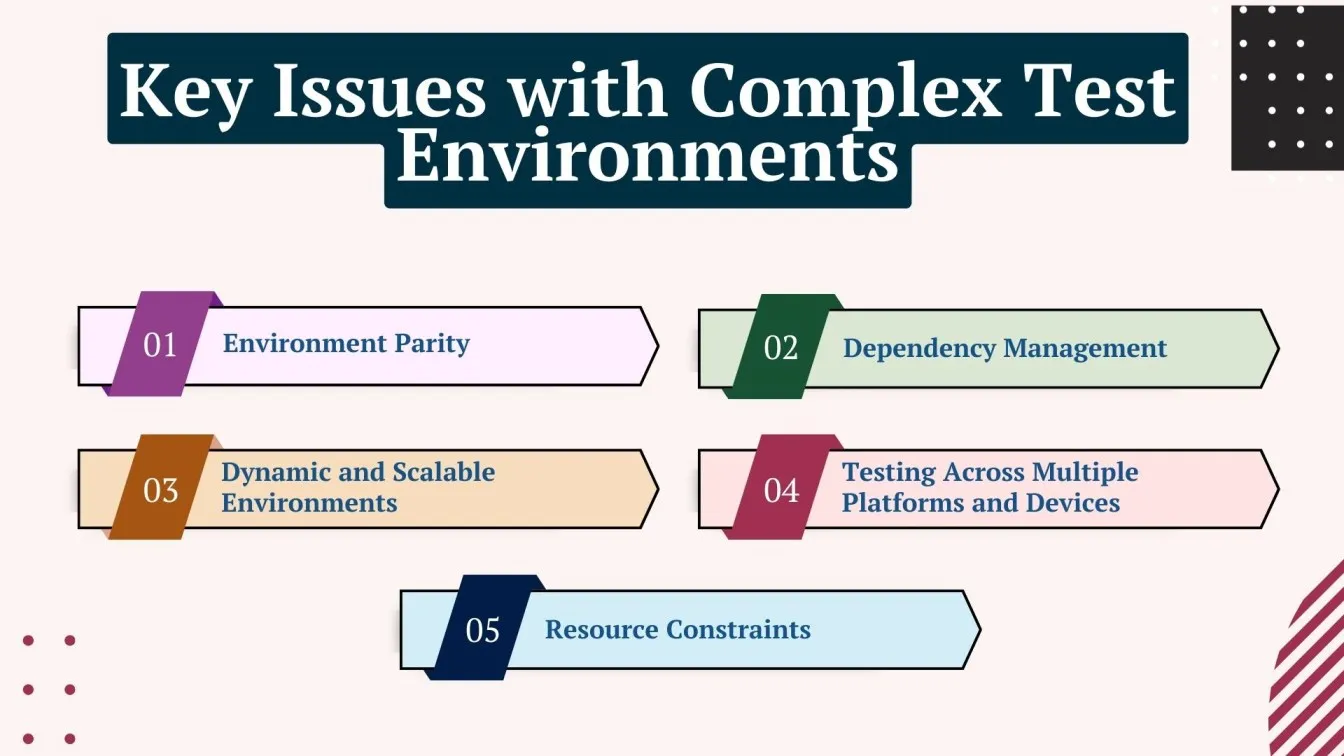

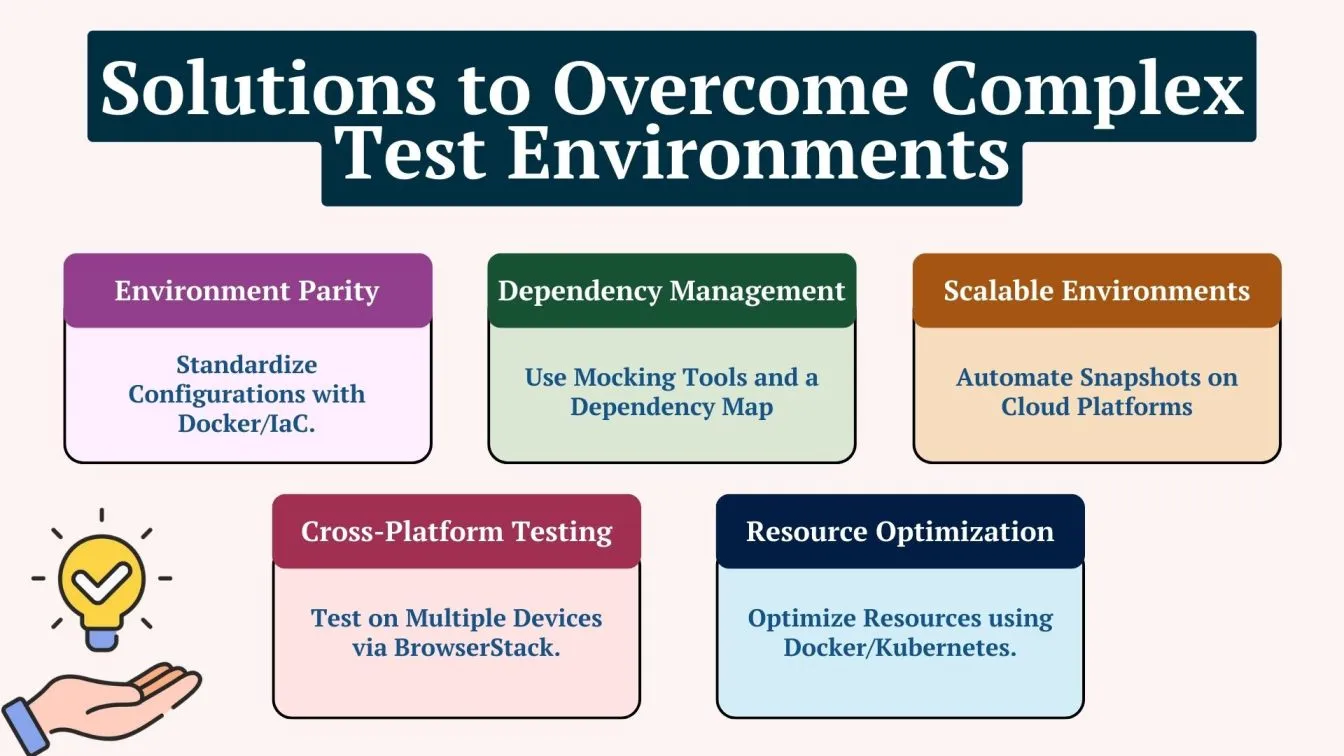

Complex test environments are a significant difficulty in modern software testing because they involve the interaction of numerous components such as front-end interfaces, back-end servers, APIs, databases, and cloud services.

These pieces may be hosted on multiple platforms and regions, making seamless integration challenging to achieve. Managing these dynamic, multilayered settings necessitates precise configuration and upkeep.

- Environment Parity: Consistency across development, staging, and production environments is crucial for obtaining accurate test results. Differences in settings can result in false positives or ignored issues.

- Dependency Management: Modern applications rely on interrelated components, making it difficult to manage dependencies effectively. Misconfigurations can lead to test failures and longer setup times.

- Dynamic and Scalable Environments: Dynamic scaling in cloud-based systems creates instability, making it difficult to maintain consistent test environments. This can lead to inconsistent test results and unexpected failures.

- Testing Across Multiple Platforms and Devices: Testing across several platforms and devices complicates matters because each has its own set of setups and behaviors. This can cause platform-specific difficulties and inconsistent software performance.

- Resource Constraints: Managing sophisticated test environments necessitates large resources, which might strain available infrastructure. This raises the expenses and time required to maintain effective testing facilities.

Managing Test Data Consistency, Privacy, and Volume Issues

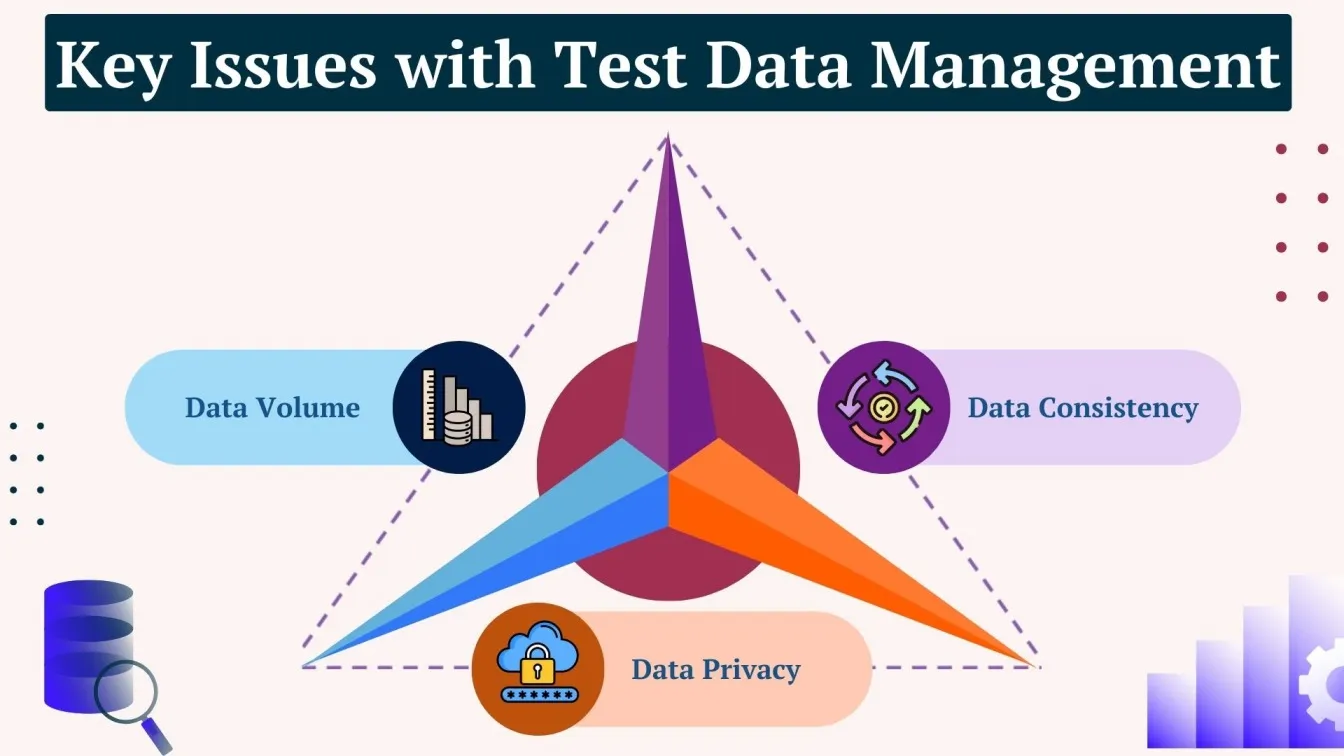

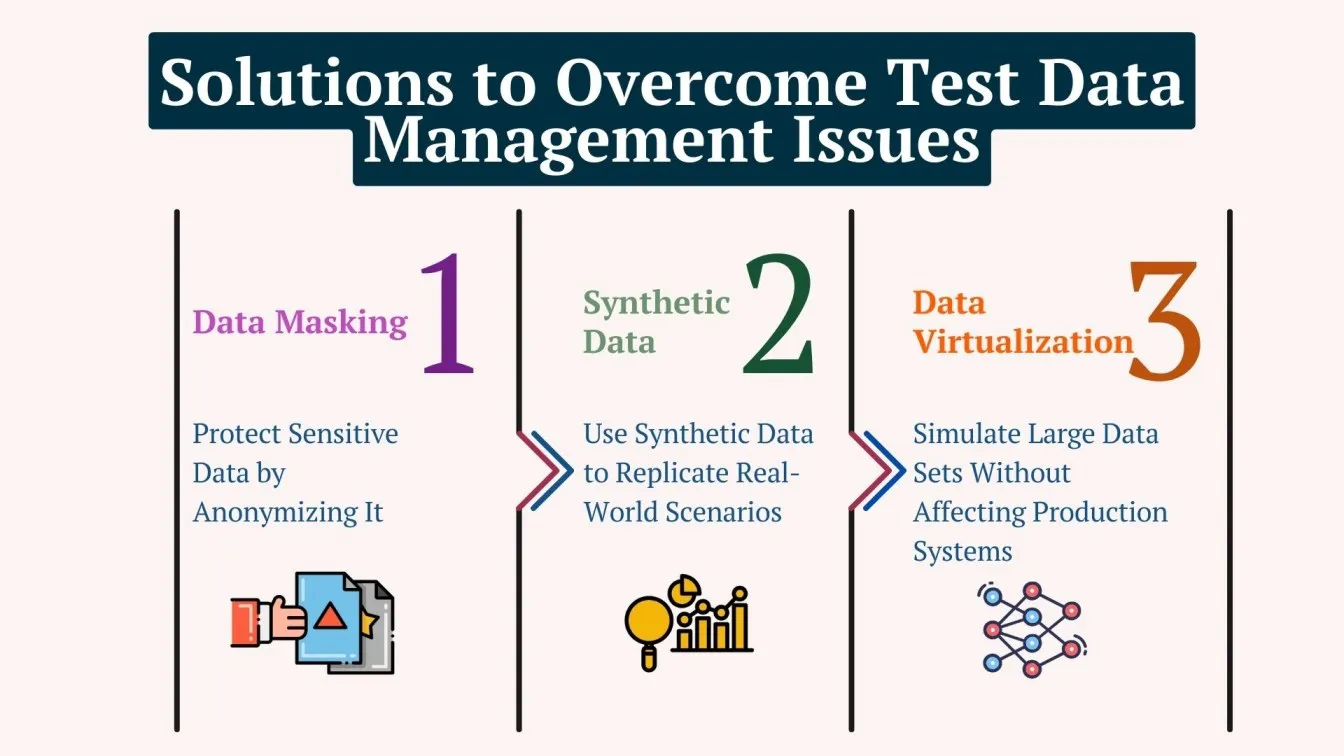

End-to-end (E2E) testing depends heavily on test data, which can be difficult to manage. Issues such as assuring consistency, protecting privacy, and dealing with vast amounts of data can lead to incorrect test results and slow down the testing process. It is critical to solve these issues adequately to ensure accuracy in testing.

- Data Consistency: Test data must accurately reflect real-world situations, necessitating synchronization across environments. Inconsistent data might result in false positives and overlooked faults.

- Data Privacy: With the development of data protection rules such as GDPR, test environments must safeguard sensitive information. Failing to do so can result in legal and reputational issues.

- Data Volume: As applications grow, so does the volume of data used in testing, making it harder to handle huge data sets efficiently and producing test performance concerns.

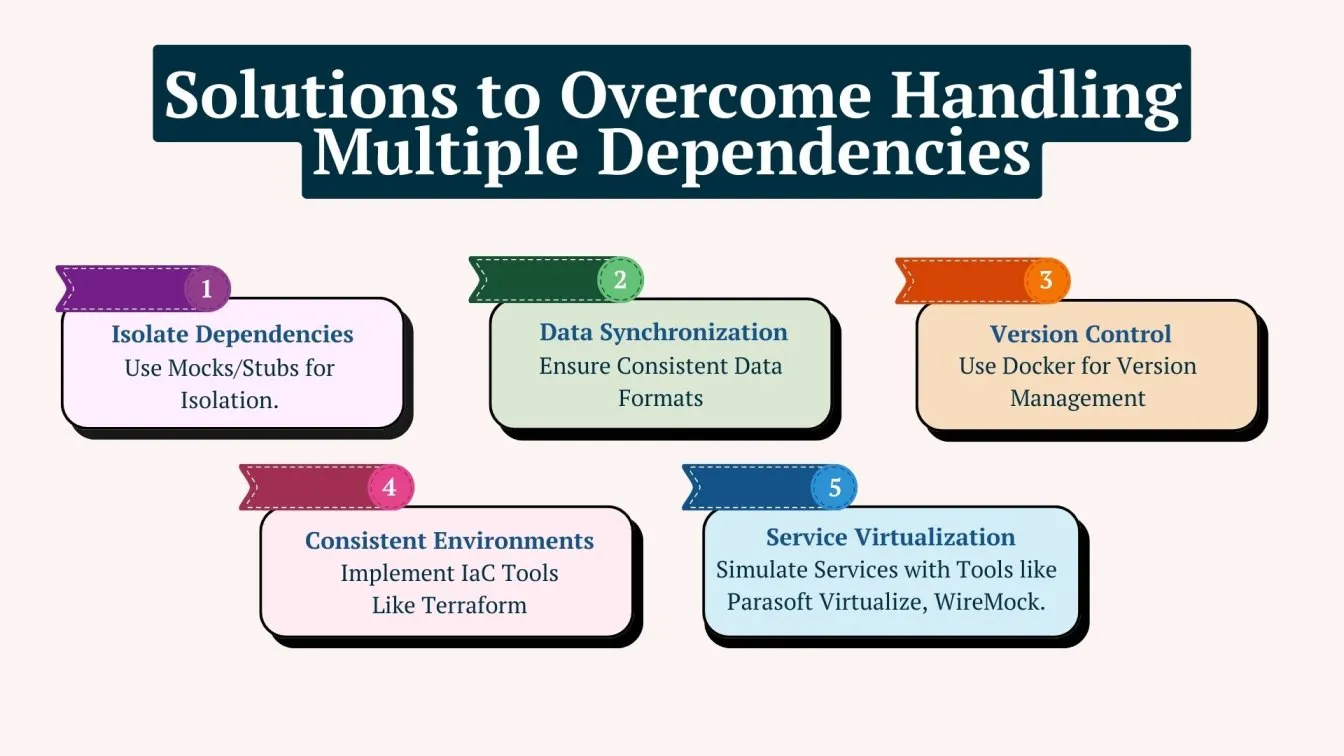

Handling Multiple Dependencies in Testing

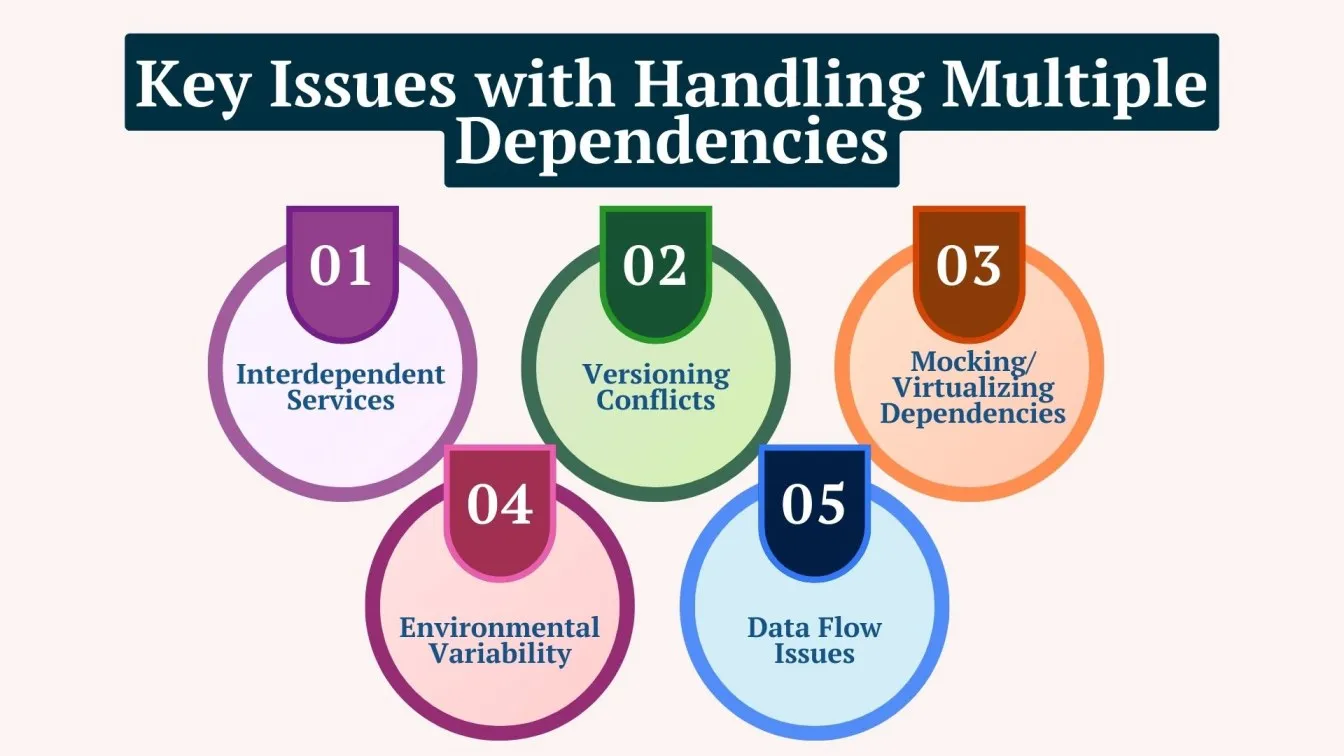

Databases, APIs, external services, and microservices are crucial components in modern software programs that rely on one another. These interconnected components complicate testing, as a single failure in one part can impact the entire system. Managing many dependencies efficiently is critical for producing accurate and reliable test results.

- Interdependent Services: Testing interconnected services can be difficult when one failure propagates throughout the system, making it harder to pinpoint and fix flaws.

- Versioning Conflicts: Different versions of a dependency might cause incompatibility, resulting in test failures or incorrect results.

- Mocking/Virtualizing Dependencies: Without accurate mocks or virtualized services, tests may not reflect real-world behavior, resulting in insufficient test coverage.

- Environmental Variability: Dependencies may behave differently across development, staging, and production settings, resulting in inconsistent outcomes.

- Data Flow Issues: If data does not flow correctly through dependent systems, tests may fail, even if the individual components are functioning normally.

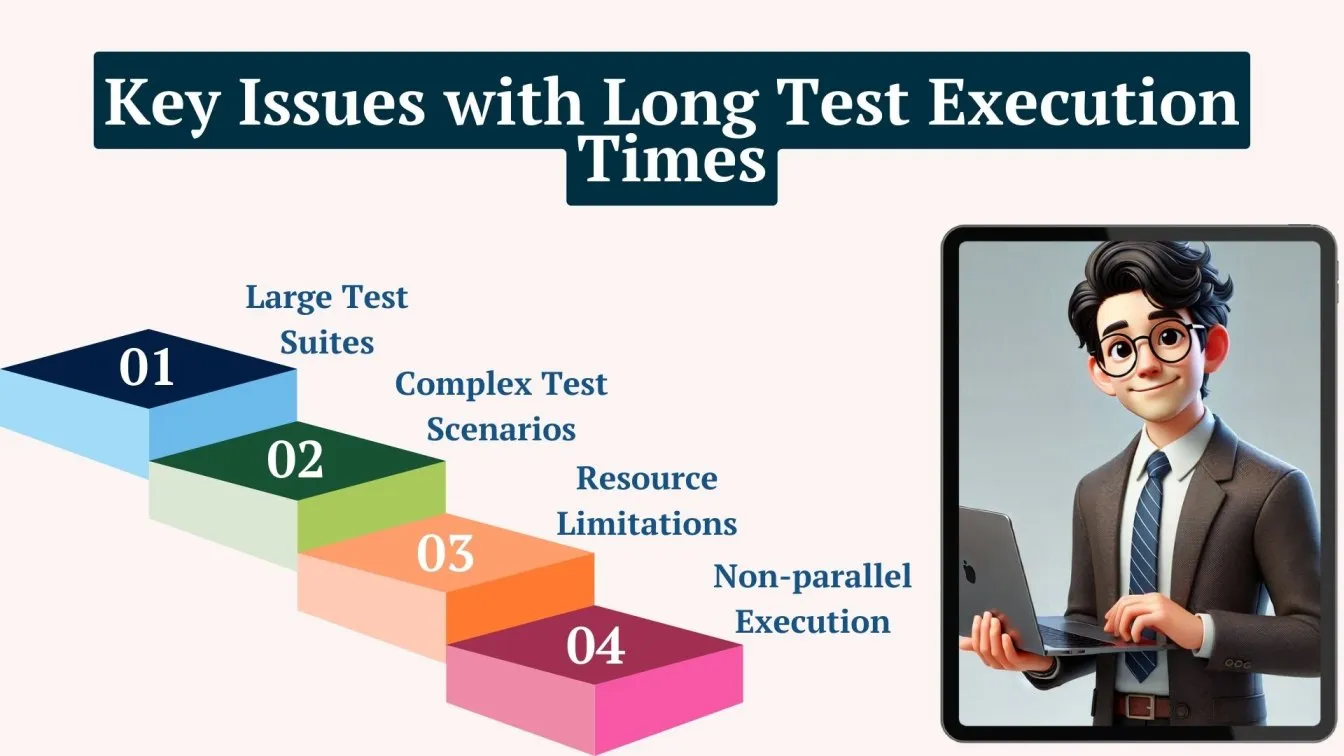

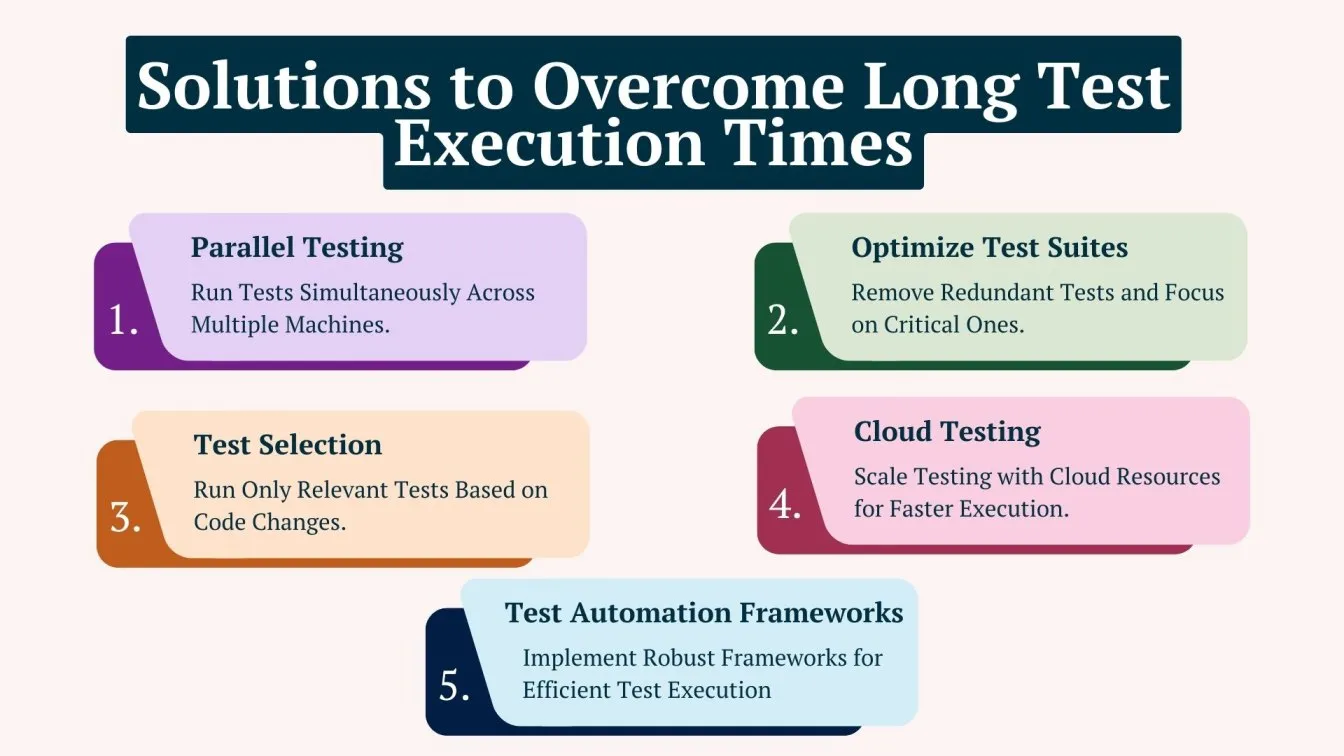

Long Test Execution Times That Slow Development Cycles

Long test execution times represent a significant bottleneck in the software testing lifecycle. They can slow down development cycles, cause delays in releases, and reduce overall productivity.

This difficulty becomes more apparent when tests need to cover big systems or perform exhaustive testing on complex functions, which frequently causes considerable delays in continuous integration/continuous delivery (CI/CD) pipelines.

- Large Test Suites: Running a large number of tests increases overall execution time, especially if they are not optimized.

- Complex Test Scenarios: Complex test scenarios and interactions between components may necessitate lengthy execution times to validate.

- Resource constraints: Insufficient resources (e.g., hardware or cloud infrastructure) might slow down the testing process.

- Non-parallel Execution: Tests that run sequentially rather than concurrently increase the overall test execution time.

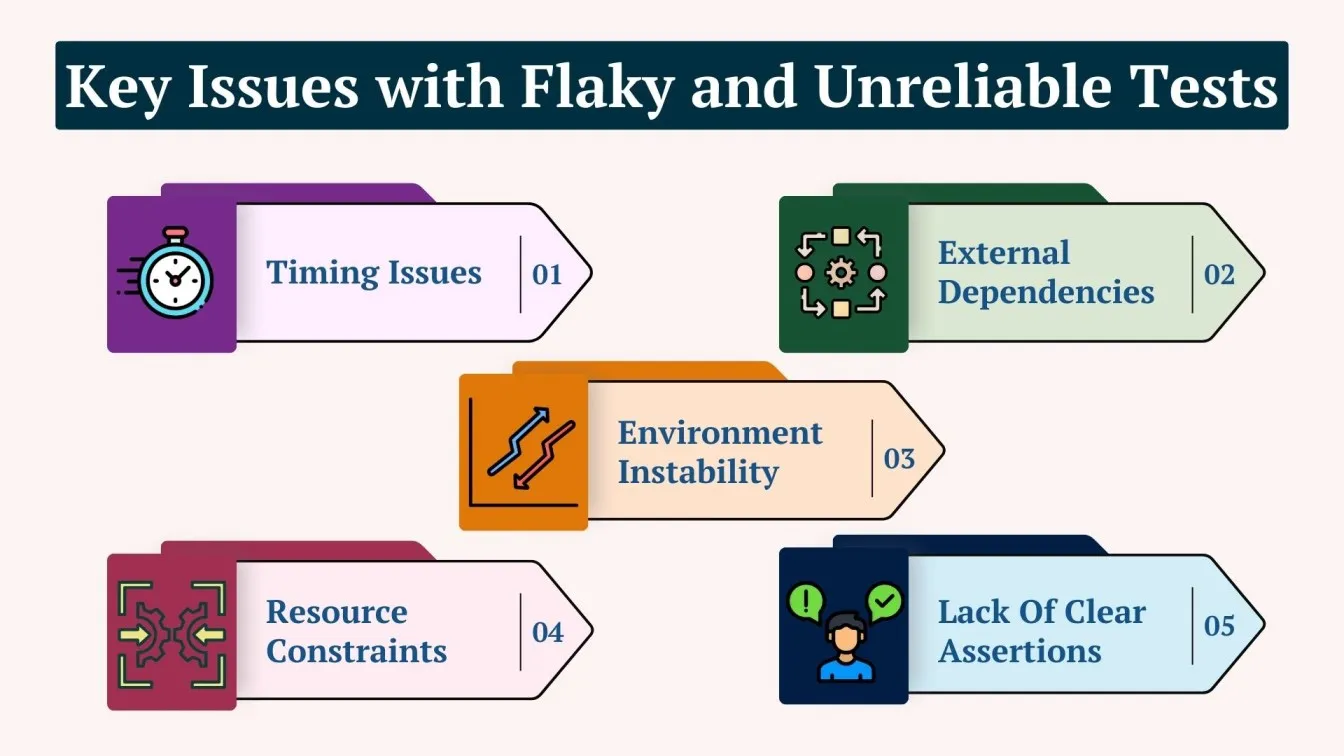

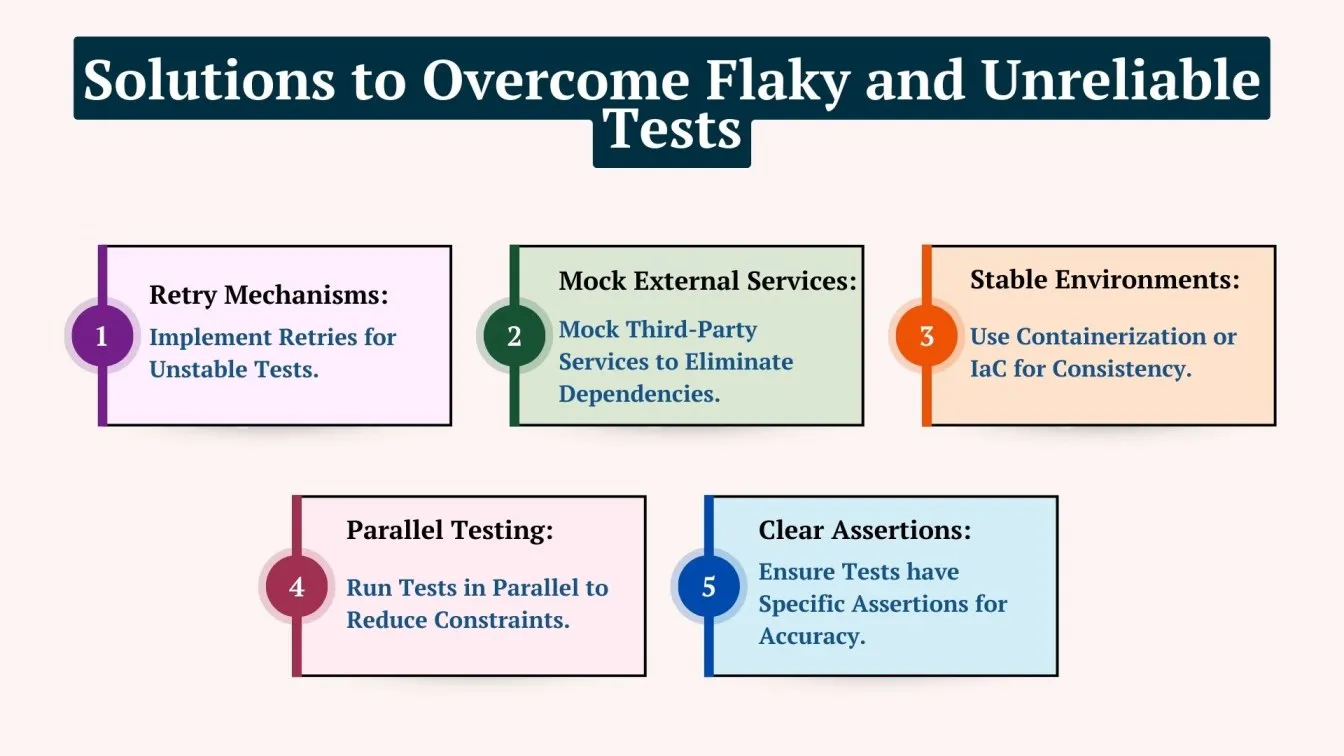

Addressing Flaky and Unreliable Tests That Undermine Confidence

Flaky and unreliable tests pose a substantial obstacle to the end-to-end testing process. These tests can pass or fail in an inconsistent manner, making it difficult to trust the results. They can occur owing to scheduling difficulties, external dependencies, or environmental instability. Such discrepancies weaken tester trust, impede the development cycle, and make it difficult to identify serious concerns. Addressing these flaky tests is critical to ensuring the testing process's integrity and efficiency.

- Timing Issues: Race events or delays in asynchronous activities might render testing unreliable.

- External Dependencies: When tests are dependent on third-party services or APIs, any disruption in such services can cause tests to fail intermittently.

- Environment Instability: Fluctuations in test settings, such as system configurations or network difficulties, can lead to flaky tests.

- Resource Constraints: Limited hardware or network bandwidth might impair test performance, resulting in failures or sluggish execution.

- Lack of Clear Assertions: Tests without clear and sufficient assertions to validate expected outcomes may pass or fail unexpectedly.

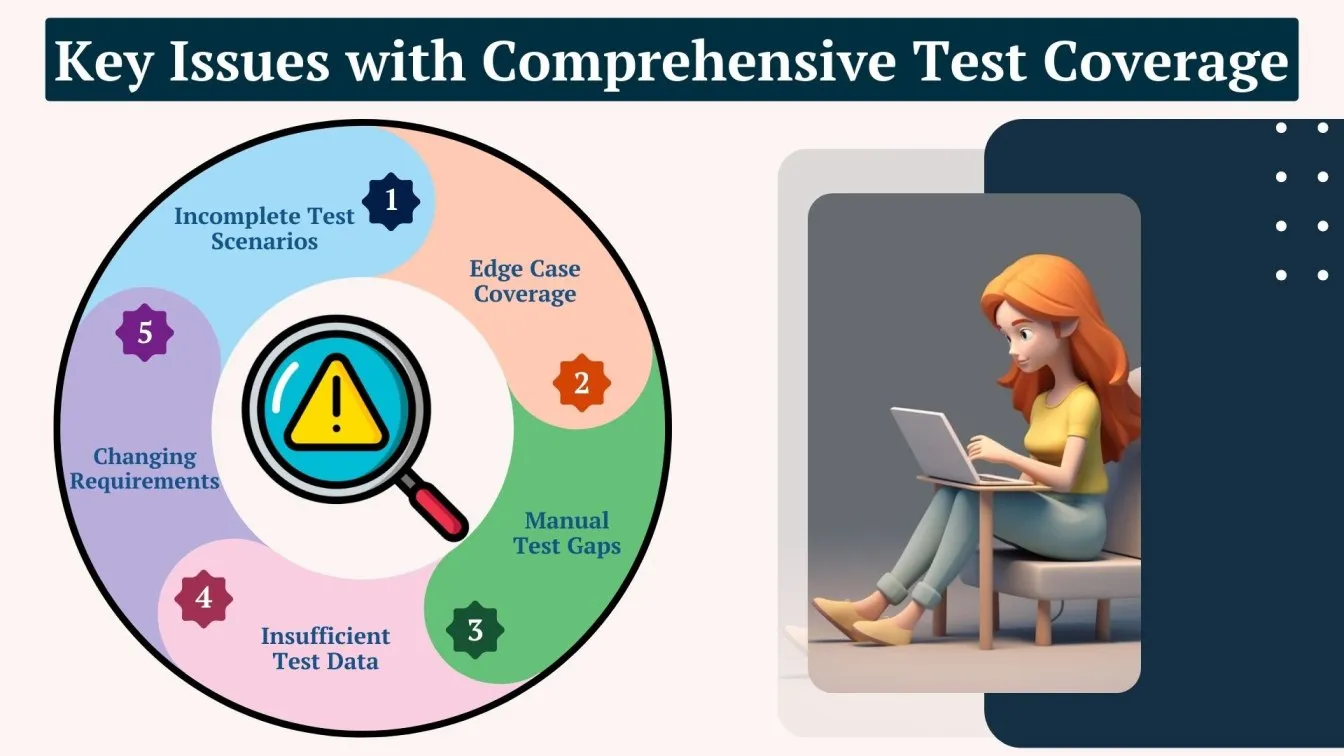

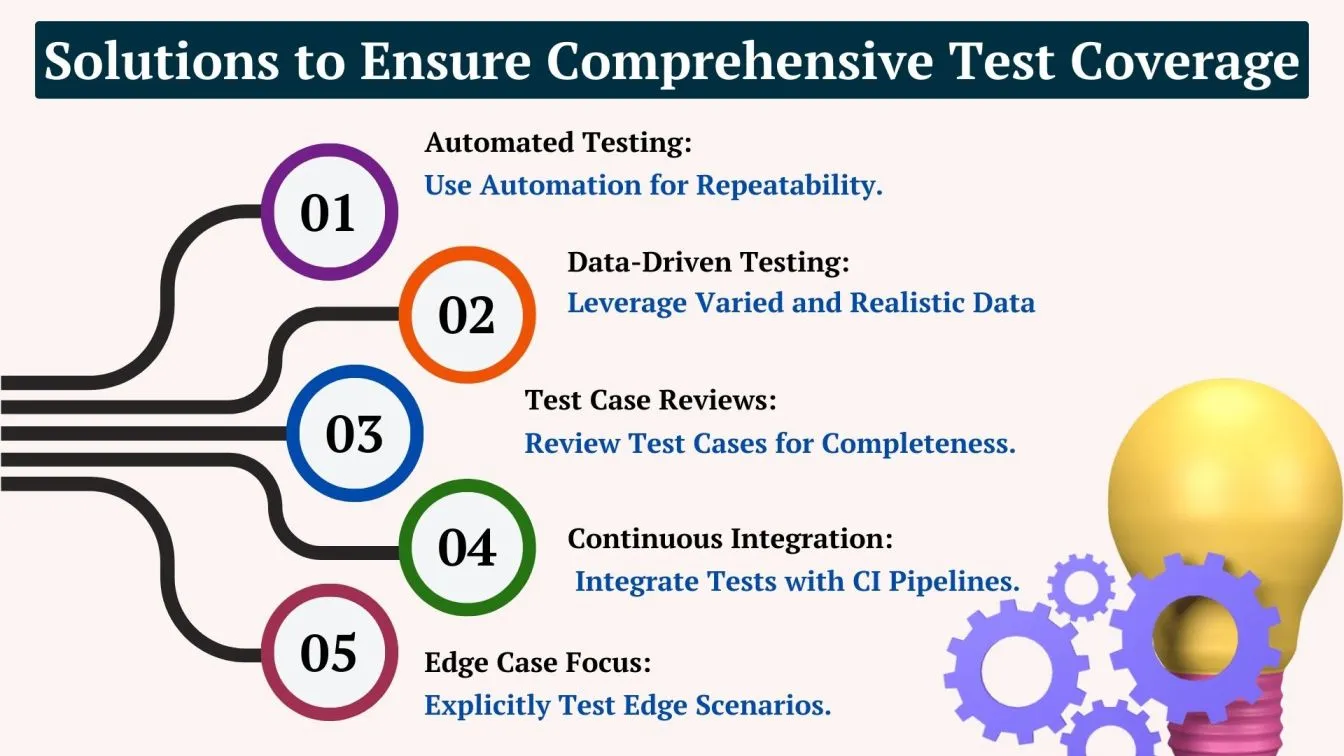

Ensuring Comprehensive Test Coverage for All Critical Scenarios

Comprehensive test coverage is required to verify that all significant components of a software program have been adequately tested. However, ensuring complete coverage across all scenarios, particularly complicated procedures, edge cases, and unanticipated user behavior, can be difficult. This is especially critical for testing the software's general functionality, security, and performance across many contexts.

- Incomplete Test Scenarios: Critical workflows or user journeys may be missed during test design, resulting in insufficient coverage.

- Edge Case Coverage: Software behavior in edge circumstances, such as error handling or low traffic, may not be fully tested.

- Manual Test Gaps: Manual testing may lead to overlooked edge cases or human errors in test execution.

- Insufficient Test Data: If there isn't enough actual data, testing may fail to adequately cover real-world scenarios.

- Changing Requirements: As requirements change, test cases may not be updated, resulting in gaps in testing coverage.

Handling Integration Challenges with Third-Party Services and APIs

Integrating third-party services and APIs is an important aspect of modern software systems. However, it frequently introduces new obstacles, such as versioning conflicts, network failures, dependency management, and data inconsistencies, which can lead to testing issues. Overcoming these obstacles is critical to ensuring that integrations run well and do not negatively affect the application.

- Version Incompatibility: Conflicting versions of third-party services or APIs can introduce breaking changes, causing integration difficulties.

- Network Instability: Unstable network conditions might render tests unreliable, resulting in sporadic connectivity failures or timeout issues.

- Limited Service Access: Some APIs may be rate-limited or unavailable during testing, making it difficult to conduct consistent tests.

- Data Inconsistencies: Incorrect or inconsistent data from external services can have an impact on the validity of test results.

- Dependency Failures: Test execution can fail unexpectedly if external services go down or change.

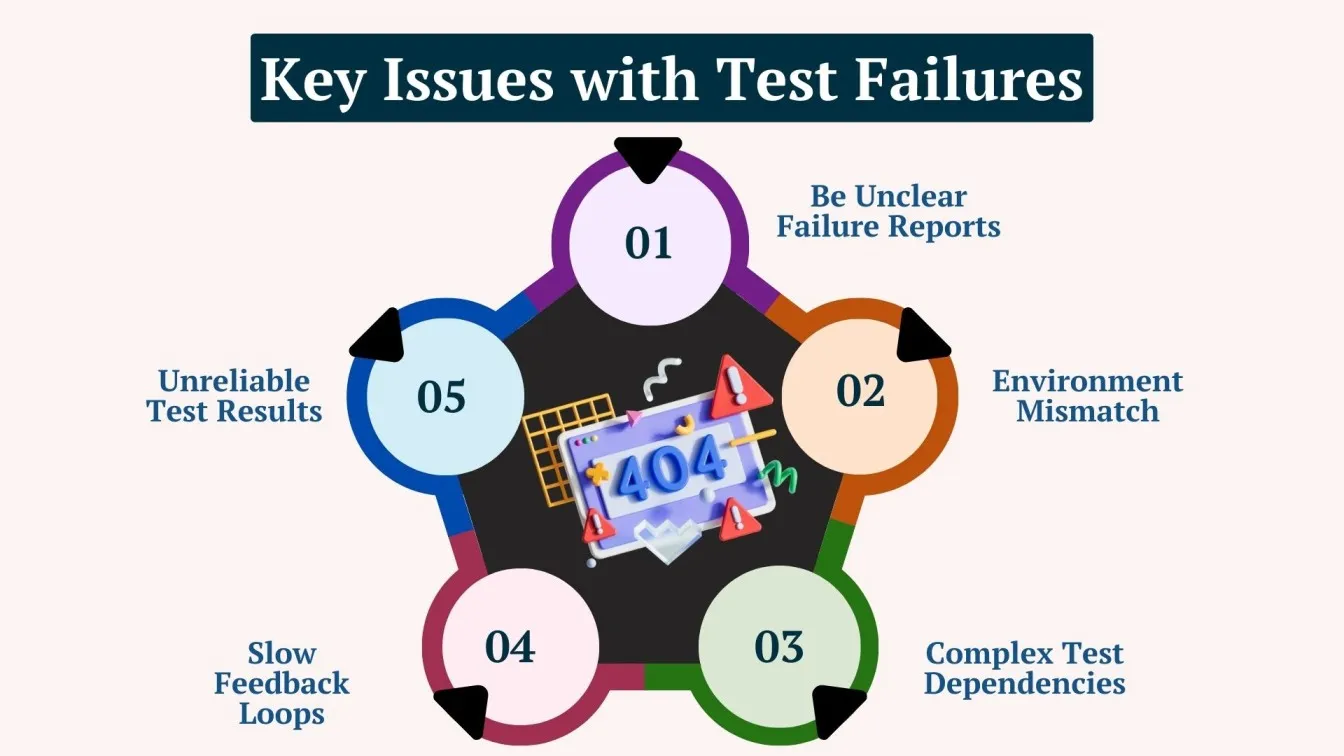

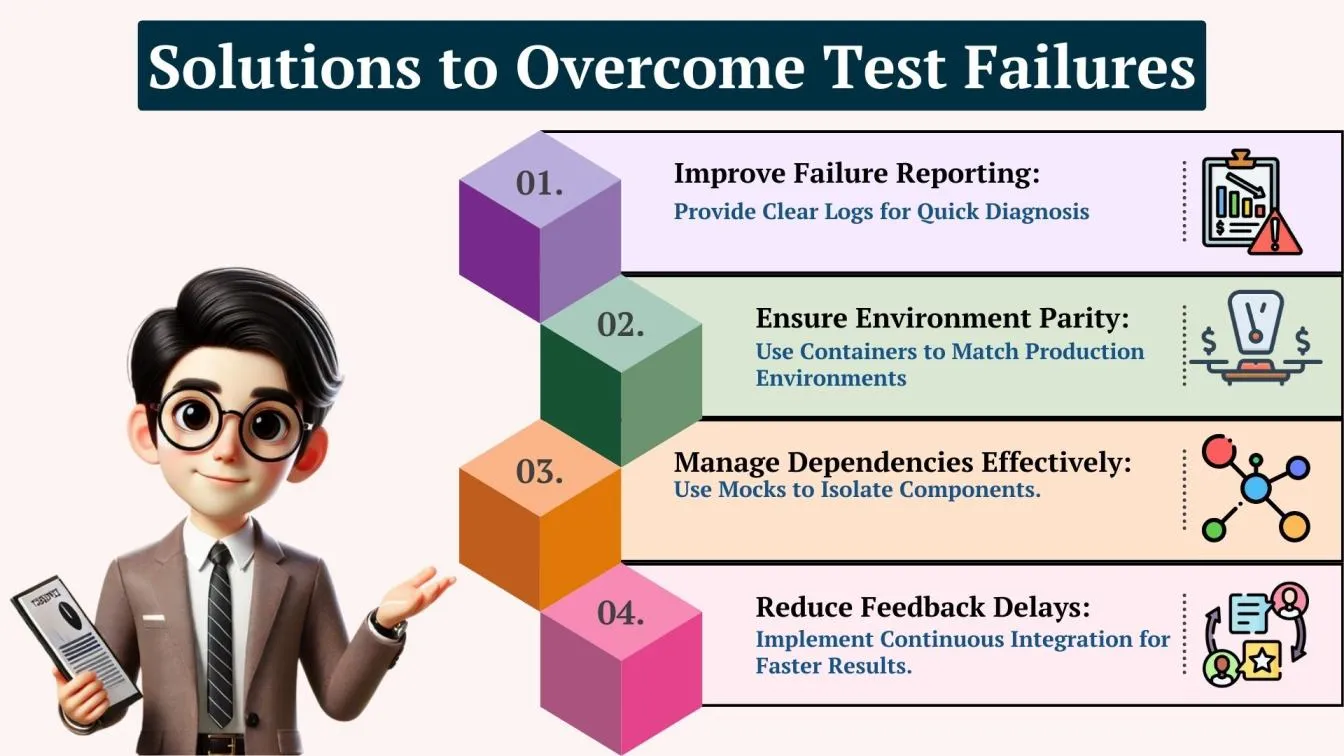

Managing Test Failures and Efficiently Debugging Root Causes

Test failures are a typical occurrence in software testing, but the problem is to rapidly identify and treat the main reasons. Without a structured approach to debugging, teams can spend too much time on failure analysis, resulting in delays and dissatisfaction. Teams can boost productivity and receive faster feedback on their testing efforts by successfully managing test failures.

- Unclear Failure Reports: The lack of thorough failure reports makes it difficult to pinpoint the actual problem, increasing the time spent debugging.

- Environment Mismatch: When test environments differ from production environments, anomalies, and errors may arise that are only visible in certain configurations.

- Complex Test dependencies: Tests with interdependent modules frequently fail because a change in one component disrupts others, complicating the root cause analysis.

- Slow Feedback Loops: Delays in obtaining feedback on failed tests impede timely issue resolution, extending the development process.

- Unreliable Test results: Flaky tests that pass or fail intermittently perplex teams and waste precious debugging time.

Best Practices for Successful End-to-End Testing

End-to-End (E2E) testing ensures the entire application functions correctly by simulating real user behavior across different environments. 🌍 Following best practices enhances coverage, accuracy, and reliability, leading to high-quality software and an improved customer experience.

- Define Clear Testing Objectives: Focus on testing critical workflows and core functionality, ensuring that they align with user expectations and the entire application's purpose.

- Test Across Multiple Environments: Ensure tests are conducted across various operating systems, browsers, and devices, leveraging real devices to ensure compatibility in diverse testing environments.

- Automate Repetitive Tests: Use automation tools to handle repetitive unit testing and integration tests, boosting efficiency and ensuring consistent test results.

- Incorporate Continuous Testing: Integrate tests into the testing cycle via continuous testing tools, providing rapid feedback and ensuring alignment with software development timelines.

- Collaborate with Development Teams: Involve testing teams early, ensuring that they understand testing scenarios and potential issues, fostering collaboration for better test results.

- Maintain a Risk-Based Approach: Prioritize functional tests and tests for high-risk areas to ensure comprehensive coverage, improving both user satisfaction and customer experience.

Final Thoughts 📝

End-to-End (E2E) testing is essential for ensuring the seamless functioning of an entire application. Overcoming common challenges such as complex test environments, managing test data consistency and privacy, handling multiple dependencies, addressing long test execution times ⏳, and tackling flaky tests are crucial for efficient and reliable testing.

Ensuring comprehensive test coverage ✅ for all critical scenarios, overcoming integration challenges with third-party services, improving entire team collaboration, and managing test failures effectively also play a vital role in maintaining quality.

By adopting best practices like continuous testing, utilizing software testing automation tools 🤖, and fostering collaboration, software teams can successfully address these challenges and deliver high-quality applications that meet user expectations and provide an exceptional customer experience 🌟.

People also asked

👉What are the examples of E2E testing?

Examples include testing a user login flow, online payment transactions, or the entire checkout process in an e-commerce app.

👉What tools are best suited for end-to-end testing?

Tools like Selenium, Cypress, and Playwright are commonly used for automating E2E testing across different browsers and platforms.

👉What metrics should I track to measure the success of end-to-end testing?

Track metrics such as test execution time, test pass rate, defect detection rate, and coverage of critical scenarios to measure the success of end-to-end testing.

👉How do I balance manual and automated testing in an end-to-end testing strategy?

To balance manual and automated testing, use automation for repetitive, time-consuming tasks and manual testing for complex scenarios, edge cases, and user experience validation.

👉How do I handle dynamic content during end-to-end testing?

To handle dynamic content, use techniques like dynamic waits or assertions to ensure elements load correctly before interaction.

%201.webp)