As Large Language Models (LLMs) continue to advance, evaluating their effectiveness has become increasingly complex. These models, powered by massive datasets and intricate algorithms, can generate highly coherent and contextually relevant text. However, determining their actual performance involves a multifaceted process that takes into account several metrics like accuracy, efficiency, and bias.

Thus, the evaluation of LLMs must include both technical capabilities and also their potential for application, scalability, and ethical concerns. It is necessary to study how the models work under different conditions and can be improved and effectively integrated, through the detection of bias to human evaluation techniques.

- We'll dive deep into what LLM evaluation entails, providing a full scope of how these models are assessed in different contexts.

- In this blog, we will be discussing the essential metrics used to evaluate LLMs, including accuracy, fluency, coherence, and more, along with widely adopted best practices followed by researchers and AI practitioners.

- We will explore various methods used in LLM evaluation, such as human evaluation, automated metrics, and benchmarking against real-world tasks.

- You'll learn about the common challenges faced during LLM evaluation, including bias detection, handling ambiguous outputs, and scaling evaluation methods.

An Overview of Large Language Model

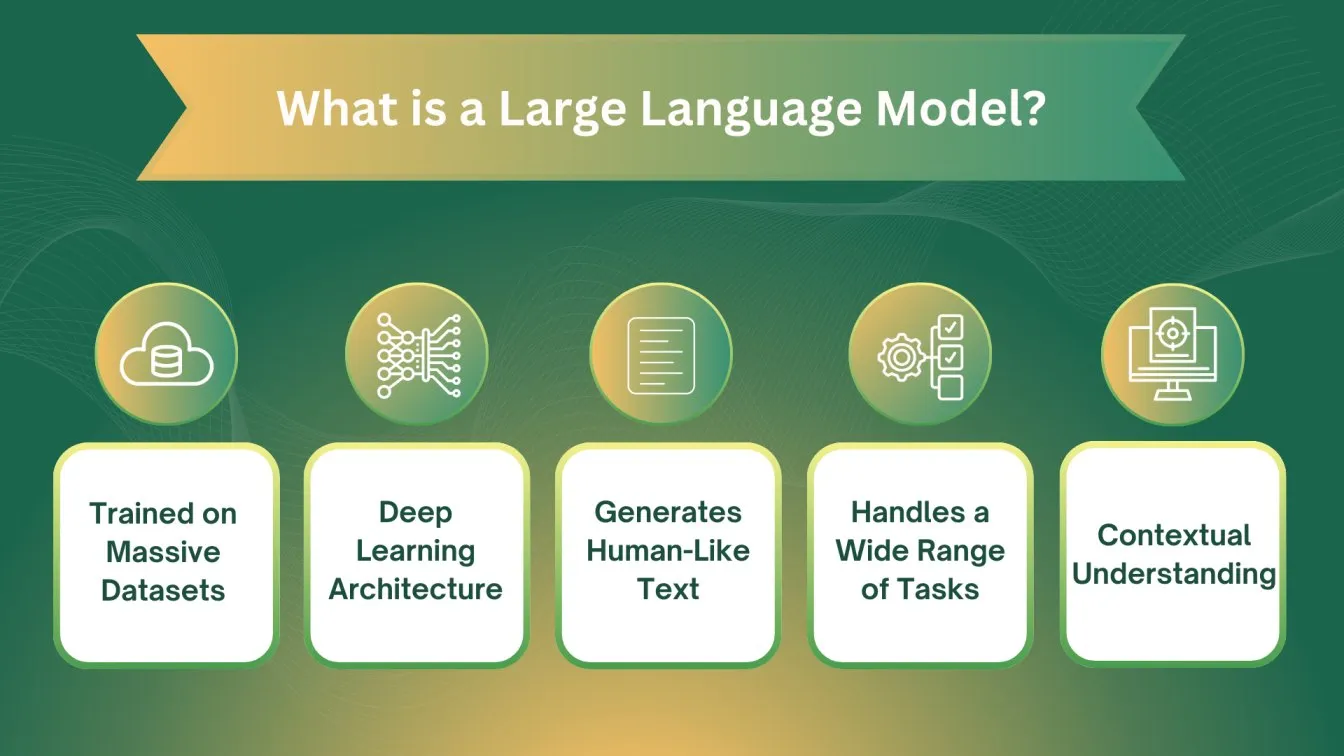

At its core, a Large Language Model (LLM) is a type of machine learning model designed to understand, generate, and manipulate human language. These models are trained on massive amounts of text data using deep learning algorithms, enabling them to predict and generate language outputs based on the input they receive.

LLMs excel in a variety of tasks like text generation, translation, summarization, and answering questions, making them highly versatile in the realm of natural language processing (NLP).

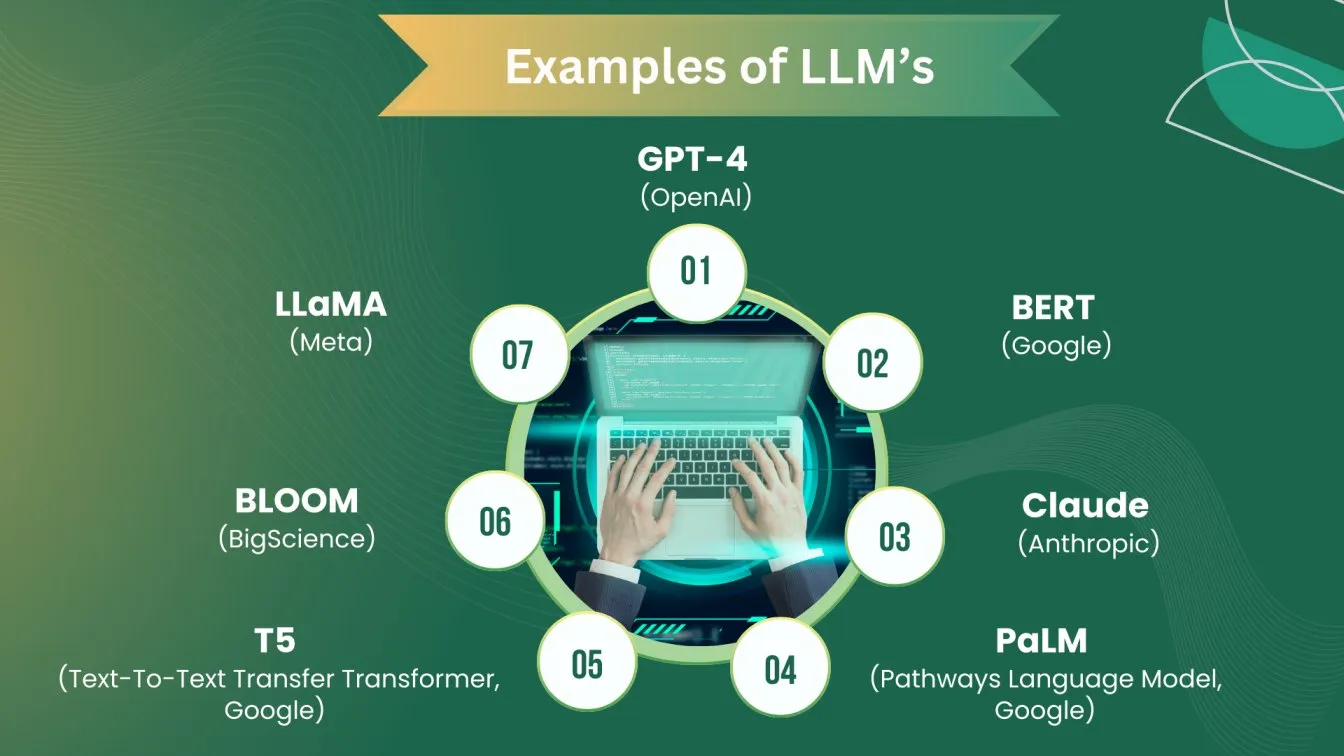

Large Language Models (LLMs) are a significant development in natural language processing (NLP), machine learning, and artificial intelligence (AI).. These models, trained on vast datasets, can understand, generate, and even interact with human language in a way that mirrors human communication. Built on deep learning techniques, LLMs like GPT and BERT leverage enormous neural networks to process language and predict text outputs.

Various open-source LLM models, such as GPT-Neo and BLOOM, have made this technology more accessible to developers and researchers. These models are powering innovations across industries, from LLM AI models that support automation to top LLM models in research that push the boundaries of what AI can achieve.

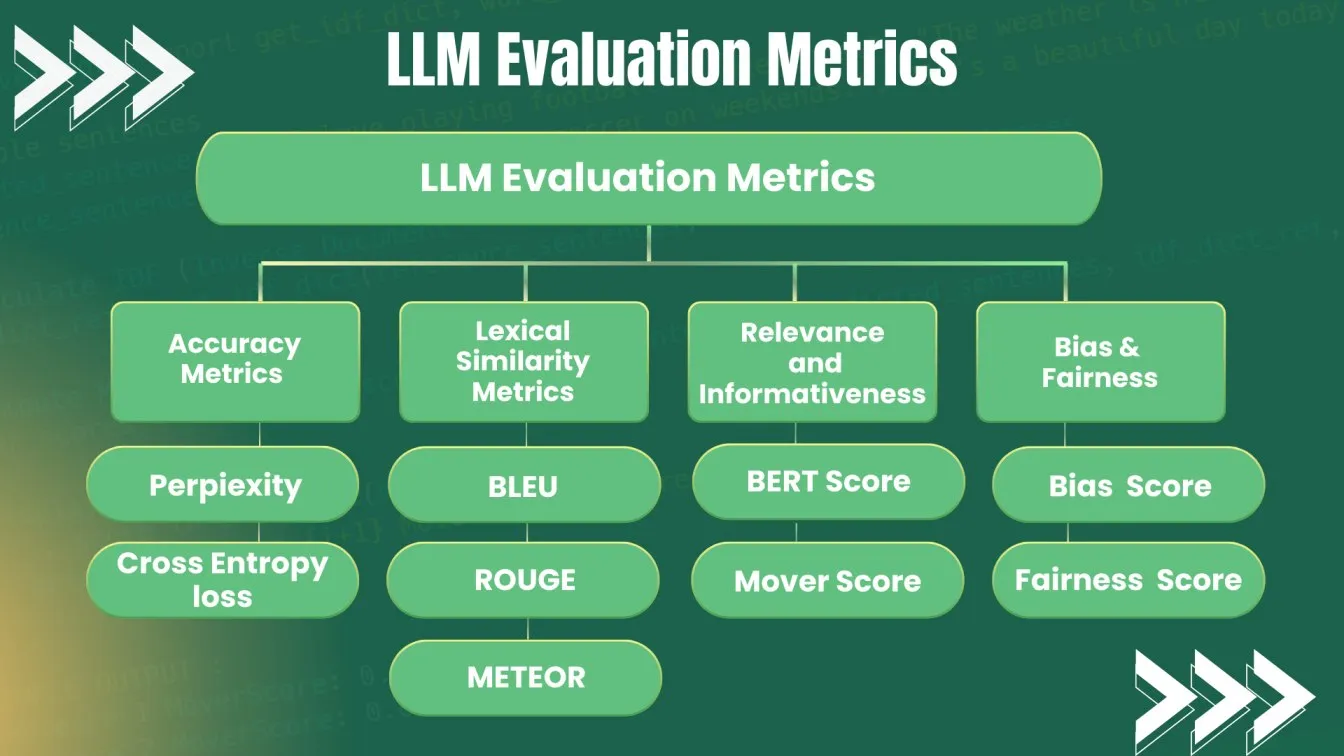

Key Metrics for Evaluating LLM

When evaluating the performance of Large Language Models (LLMs), several key metrics are essential to ensure their effectiveness in various applications, including artificial intelligence, generative artificial intelligence, and chatbot artificial intelligence.

Accuracy Metrics

- Perplexity:

Perplexity is a key metric used to evaluate the performance of language models by measuring how well a model predicts a sequence of words. It is defined as the exponential of the average negative log-likelihood of the predicted probability for each word in the test dataset. A lower perplexity indicates better performance, as the model is more confident in its predictions.

.webp)

- Cross Entropy Loss:

Cross-entropy loss is a key metric in training machine learning models, particularly in tasks involving classification, like in large language models (LLMs). It quantifies how far off the predicted probabilities are from the actual target values. The function essentially compares two probability distributions: the true distribution (ground truth) and the predicted distribution output by the model.

The loss increases as the predicted probability diverges from the actual label, meaning if the model predicts a very low probability for the correct class, it is penalized more heavily. This makes cross-entropy crucial for helping the model learn to make more accurate predictions over time.

Lexical Similarity Metrics

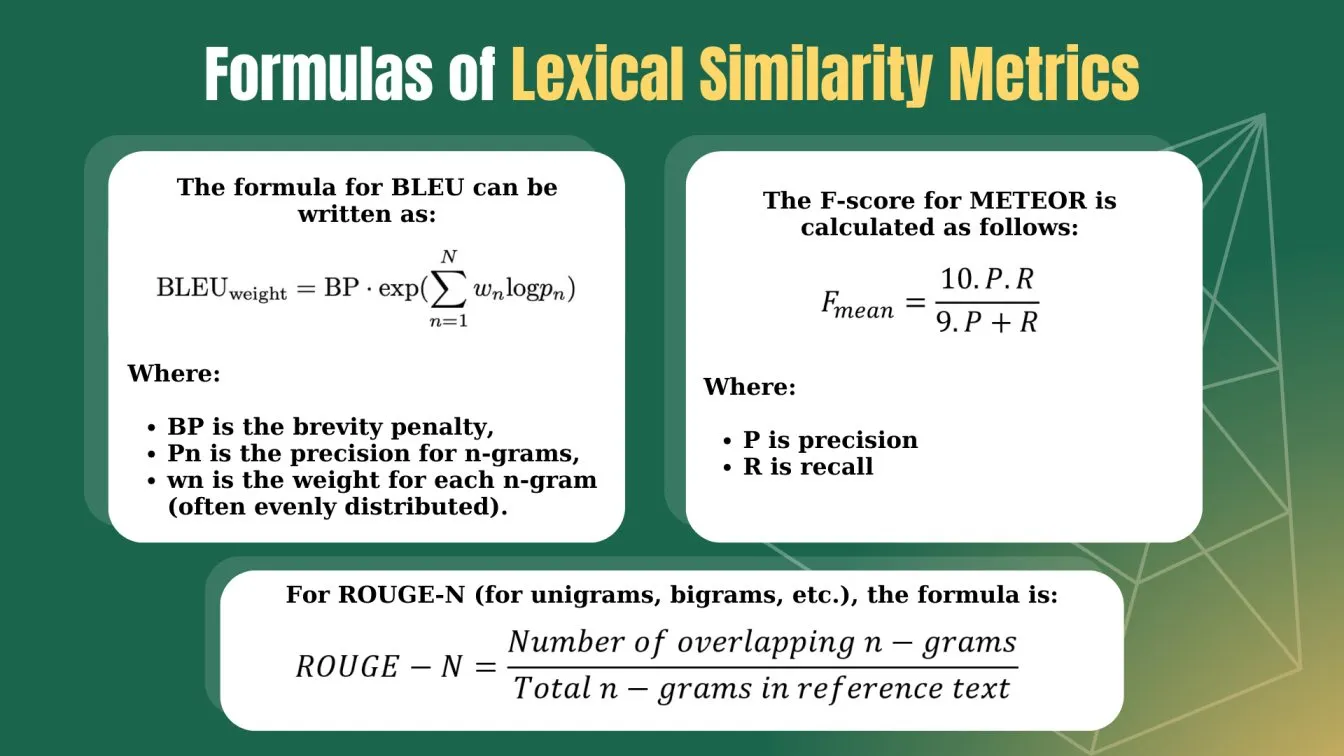

- BLEU:

BLEU (Bilingual Evaluation Understudy) is a metric used to evaluate the quality of machine-generated text, especially in tasks like machine translation, text summarization, and more. It compares the generated text to one or more reference texts and calculates how closely they match based on overlapping n-grams (sequences of words).

Here’s how BLEU works:

- N-gram Overlap: BLEU calculates the proportion of overlapping n-grams (e.g., single words, pairs of words, etc.) between the machine-generated text and the reference text. It typically considers 1-grams (single words), 2-grams, 3-grams, and 4-grams.

- Precision: The precision is calculated for each n-gram, which is essentially the fraction of overlapping n-grams between the machine output and the reference.

- Brevity Penalty (BP): BLEU applies a brevity penalty to prevent the system from generating overly short sentences that might have high precision but low overall quality. If the machine-generated sentence is too short compared to the reference, a penalty is applied.

- ROUGE:

ROUGE (Recall-Oriented Understudy for Gisting Evaluation) is a set of metrics used to evaluate the quality of text generated by natural language processing (NLP) models, especially in tasks like text summarization. Unlike BLEU, which focuses on precision, ROUGE is primarily focused on recall, making it particularly useful when measuring how much of the reference text's content is captured by the generated text.

Here are the main ROUGE metrics:

- ROUGE-N: Measures the overlap of n-grams between the reference text and the generated text.

- ROUGE-1: Evaluate the overlap of single words (unigrams).

- ROUGE-2: Measures the overlap of bigrams (two consecutive words).

- ROUGE-L: Focuses on the longest common subsequence (LCS), assessing how well the generated text captures the longest shared sequence of words.

- ROUGE-L: Unlike ROUGE-N, this metric is based on the longest common subsequence (LCS), which helps evaluate how well the generated text preserves the structure of the reference text.

- ROUGE-W: Puts more weight on longer continuous matches, meaning that longer-matched sequences of words between the generated text and the reference receive higher scores.

- ROUGE-S: Also called skip-bigram, it measures the overlap of bigrams that are not necessarily consecutive, capturing more flexible matches between the two texts.

- METEOR:

METEOR (Metric for Evaluation of Translation with Explicit ORdering) is an evaluation metric designed for machine translation and natural language generation tasks, improving upon BLEU by considering both precision and recall. It addresses some of BLEU's limitations by incorporating factors like synonymy, stemming, and word order to better align with human judgment.

Key Features of METEOR:

- Precision and Recall: Unlike BLEU, METEOR balances precision and recall to ensure that more of the reference content is captured in the generated text.

- Synonym and Stem Matching: It allows for matches based on synonyms and word stems, recognizing variations in language.

- Word Order Sensitivity: METEOR applies a penalty for incorrect word order, promoting fluent and meaningful translations.

Alignment-Based: It aligns unigrams between the generated and reference texts, rewarding translations that maintain meaning.

Relevance and Informativeness

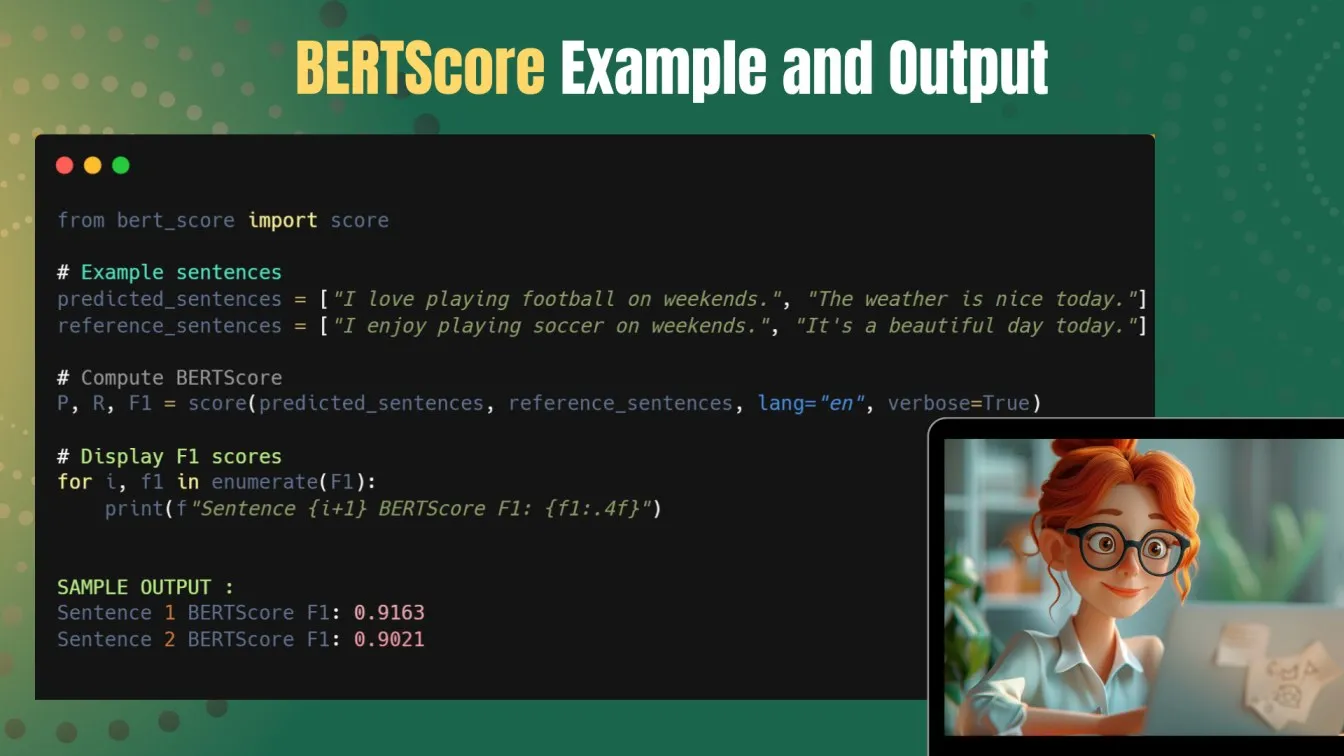

- Bert Score:

BERTScore is a newly very popular quality evaluation metric for text generated by language models, using contextual embeddings from the pre-trained BERT, Bidirectional Encoder Representations from Transformers, language model. Unlike traditional metrics, it relies instead on cosine similarity between the embeddings of the generated text and the reference text.

The BERTScore is calculated using the following steps:

- Generate embeddings: For each word of the candidate and reference texts, apply a pre-trained BERT model to generate their embeddings.

- Calculating cosine similarity: Calculate the cosine similarity of every token in the candidate with the nearest token in the reference.

- Aggregate scores: Sum up the similarity scores, including precision, recall, and F1 score.

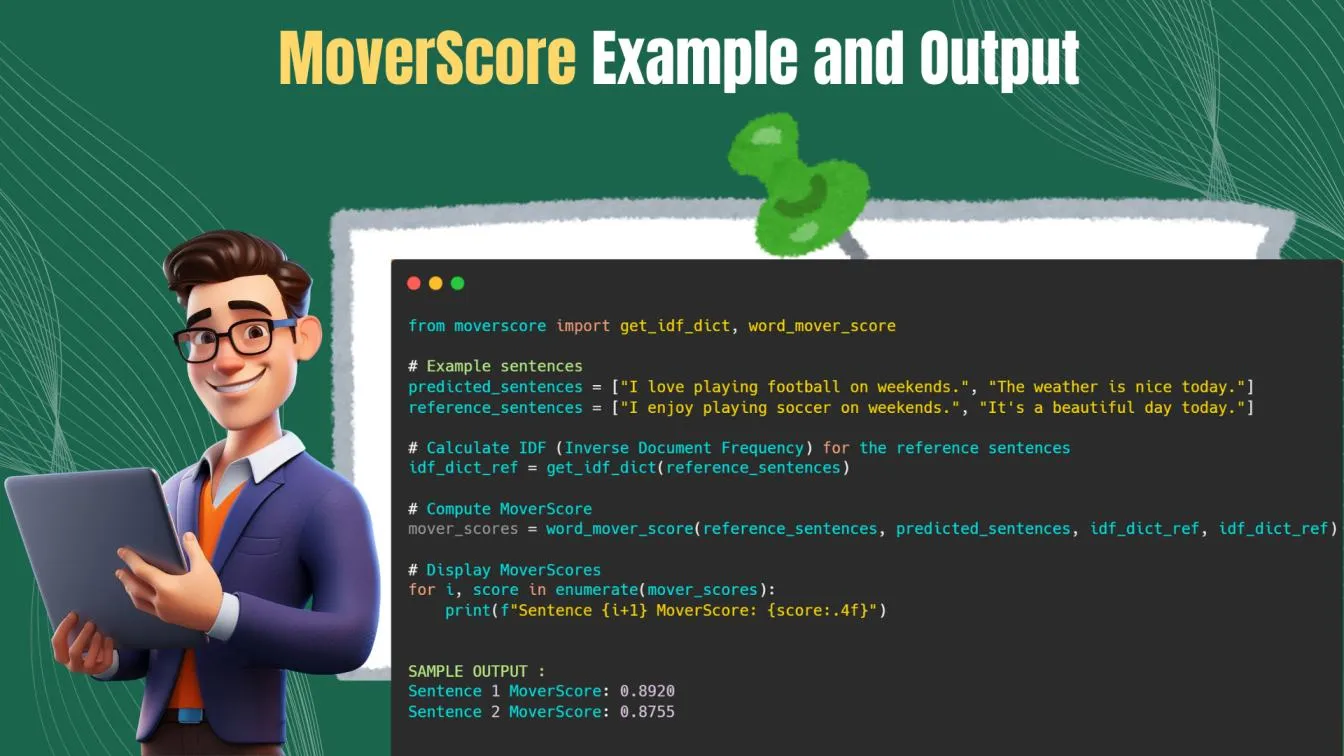

- MoverScore:

MoverScore is an innovative metric designed to evaluate the quality of generated text by capturing the semantic similarity between the generated and reference texts. Unlike traditional metrics that often focus on exact word matches or n-gram overlap.

The steps to calculate the MoverScore are as follows:

- Generate embeddings: Let's consider a pre-trained model, such as BERT, for example. Compute word embeddings corresponding to the candidate and reference texts.

- Compute pairwise distances: For every pair of word embeddings that are in the candidate and in the reference, compute the cosine distance.

- Solve the optimal transport problem: Use EMD to obtain the minimum cost to transport the candidate word distribution to the reference word distribution.

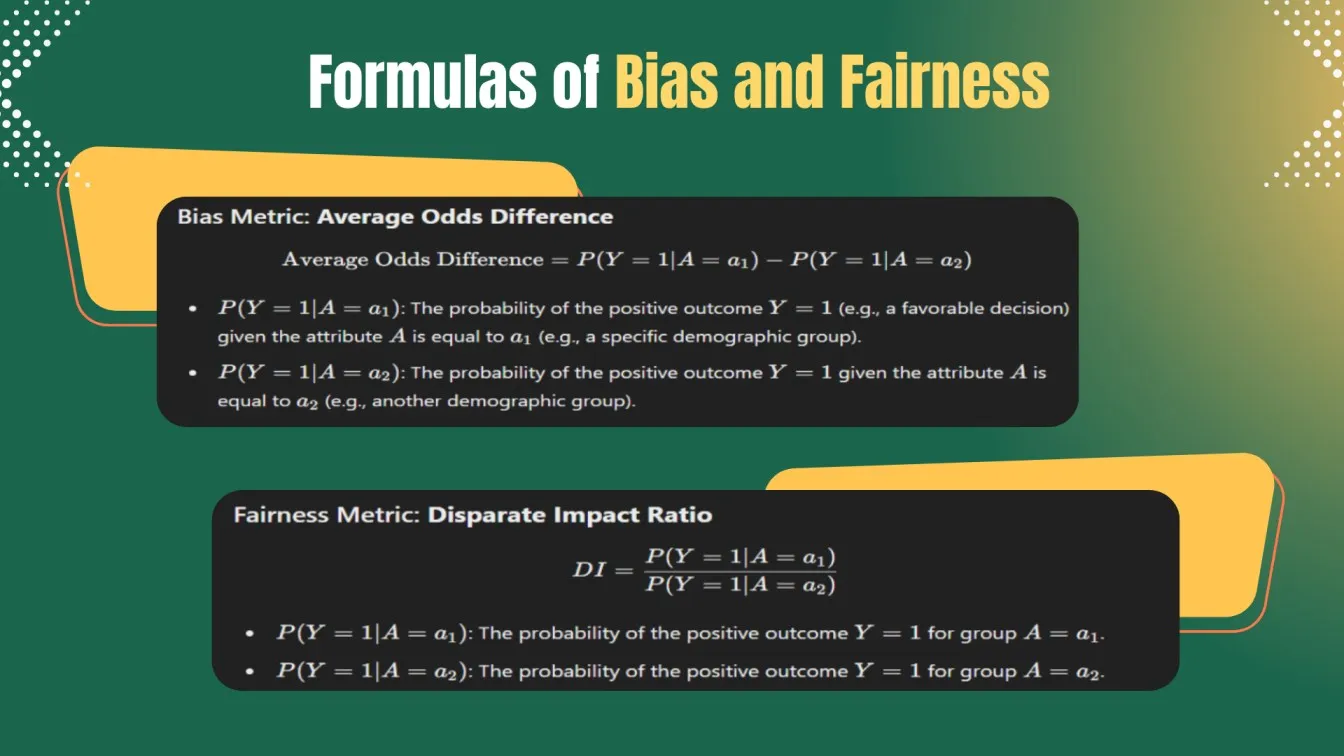

Bias and Fairness

Bias metrics are used to quantify and evaluate biases in machine learning models, particularly concerning how predictions differ across various groups based on sensitive attributes such as race, gender, or age.

Types of Bias:

- Data Bias: Arises from imbalances in training data.

- Algorithmic Bias: Introduced by the algorithms themselves.

- Representation Bias: Occurs when certain groups are underrepresented in the data.

Fairness metrics are used to evaluate how fairly a machine learning model treats different groups of individuals based on sensitive attributes (such as race, gender, or age). These metrics aim to identify and measure biases in the model's predictions and ensure equitable outcomes across diverse populations.

Tools and Frameworks for LLM Evaluation

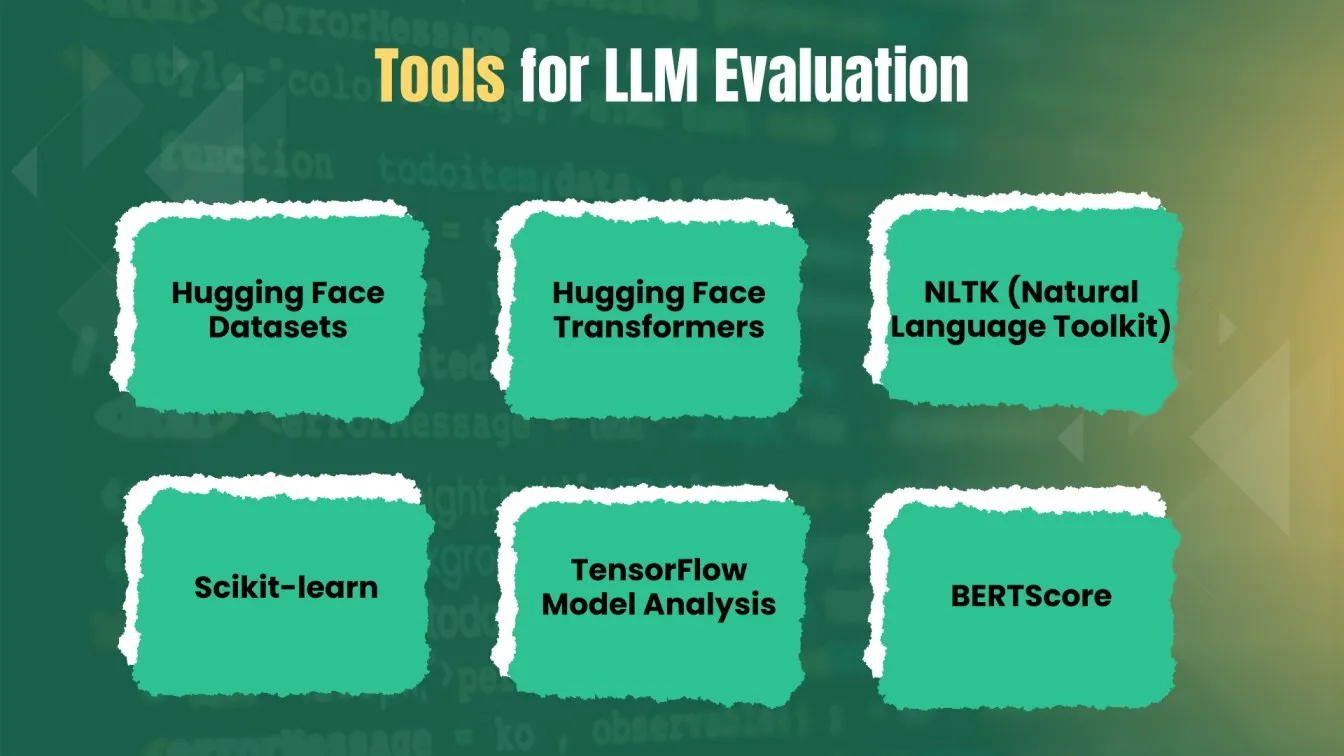

Tools for LLM Evaluation

- Hugging Face Datasets: This library offers a wide array of datasets for training and evaluating language models. It simplifies the loading and preprocessing of data, making it easier for researchers and developers to focus on model performance.

- Hugging Face Transformers: A comprehensive library for implementing transformer-based models, this tool provides straightforward interfaces for evaluation, including a variety of metrics to assess model outputs.

- NLTK (Natural Language Toolkit): NLTK is a popular Python library that includes a suite of tools for NLP tasks, offering utilities to compute evaluation metrics like BLEU and ROUGE, which measure the quality of generated text against reference texts.

- Scikit-learn: A versatile machine learning library that provides numerous tools for model evaluation, including metrics for classification and regression tasks, as well as techniques for cross-validation and confusion matrix generation.

- TensorFlow Model Analysis: This library enables the evaluation of TensorFlow models, providing insights into model performance, and helping to assess accuracy and fairness across various metrics.

- BERTScore: A specific evaluation metric tool that uses BERT embeddings to measure the semantic similarity between generated and reference texts, offering a nuanced assessment of text quality.

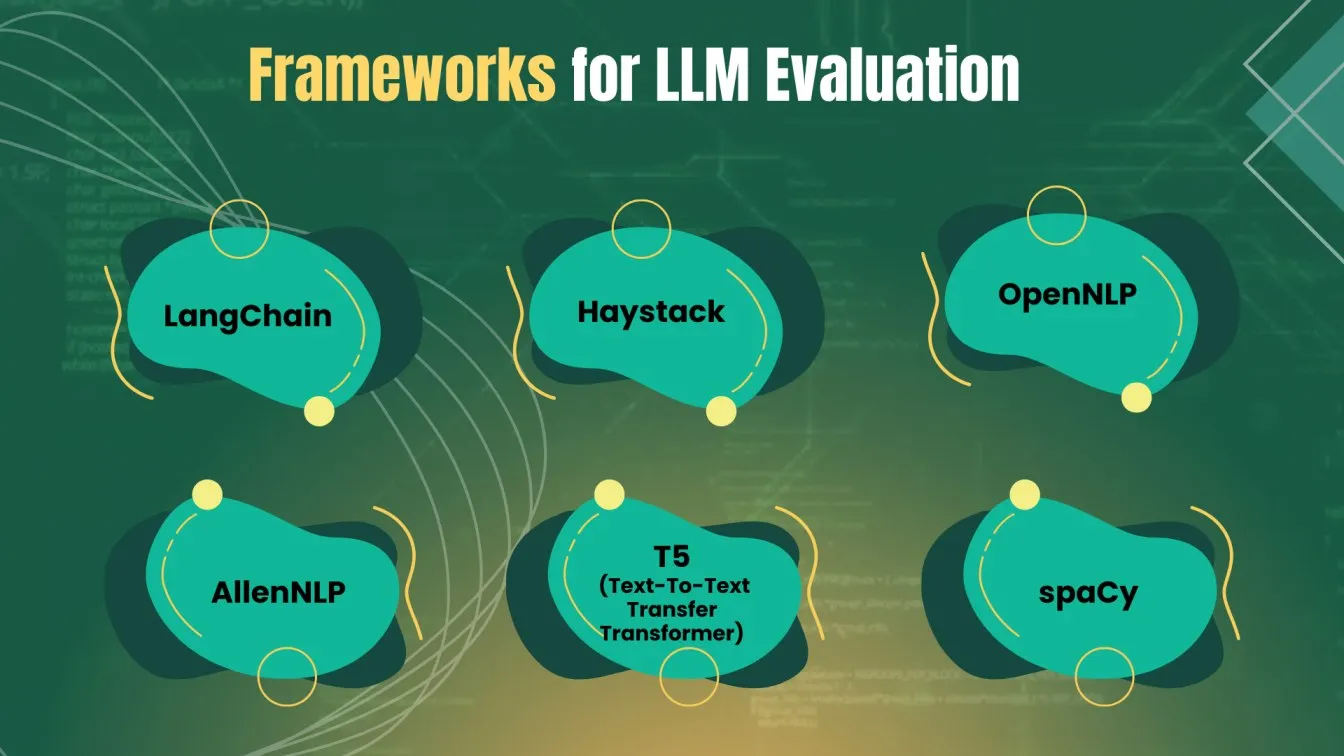

Frameworks for LLM Evaluation

- LangChain: This framework simplifies the development of applications utilizing language models by providing modular components. It enhances the evaluation process by integrating various tools and metrics for performance assessment.

- Haystack: An open-source framework designed for building search and question-answering systems, Haystack offers built-in capabilities for evaluating LLM performance, focusing on accuracy and relevance.

- OpenNLP: A machine learning-based framework for natural language processing, OpenNLP provides various models and tools that facilitate the evaluation of NLP tasks, including entity recognition and parsing.

- AllenNLP: Built on PyTorch, AllenNLP is a research library that supports the development of NLP models, providing essential tools for benchmarking and evaluating model performance through various metrics.

- T5 (Text-To-Text Transfer Transformer): This framework is designed for multiple NLP tasks by treating all tasks as text-to-text transformations, allowing for straightforward evaluation of generated outputs against expected results.

- spaCy: A robust NLP library that offers tools for model training and evaluation, spaCy covers a range of tasks including tokenization and named entity recognition, making it versatile for various applications.

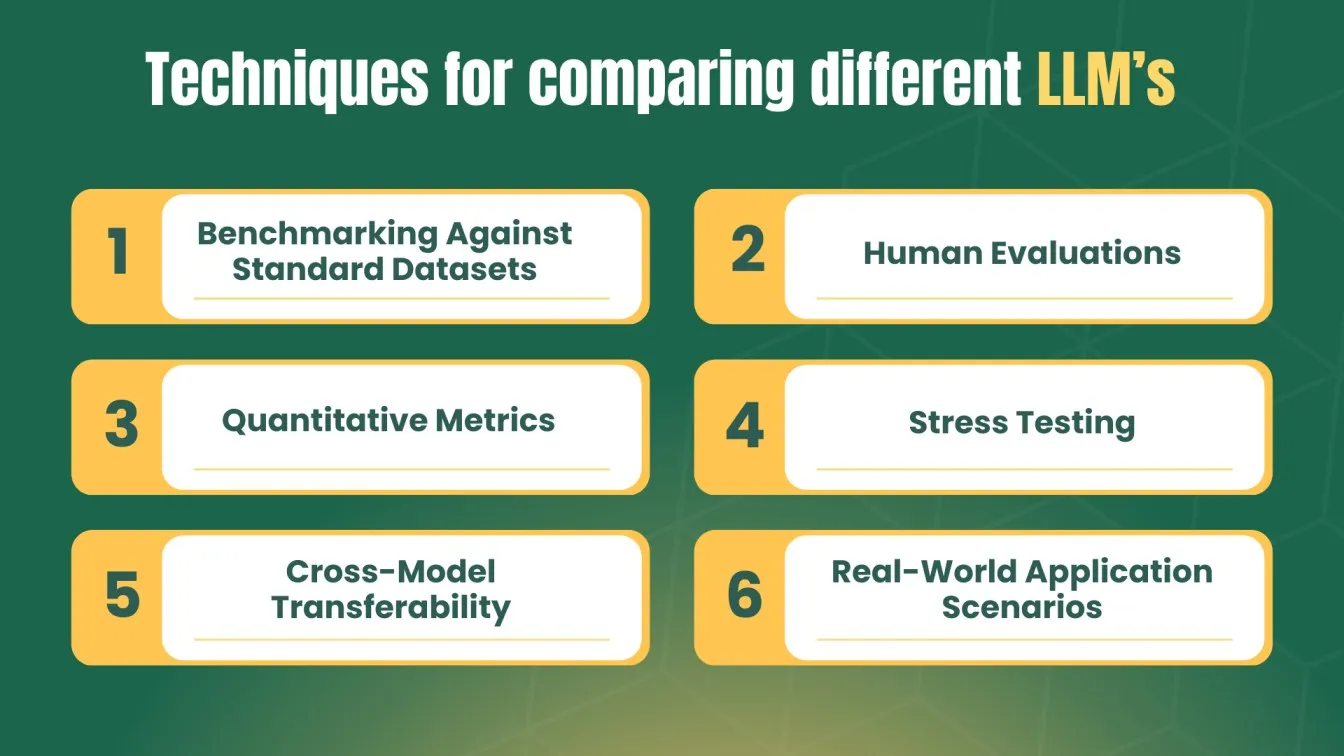

Comparing Different LLM Models: Techniques and Approaches

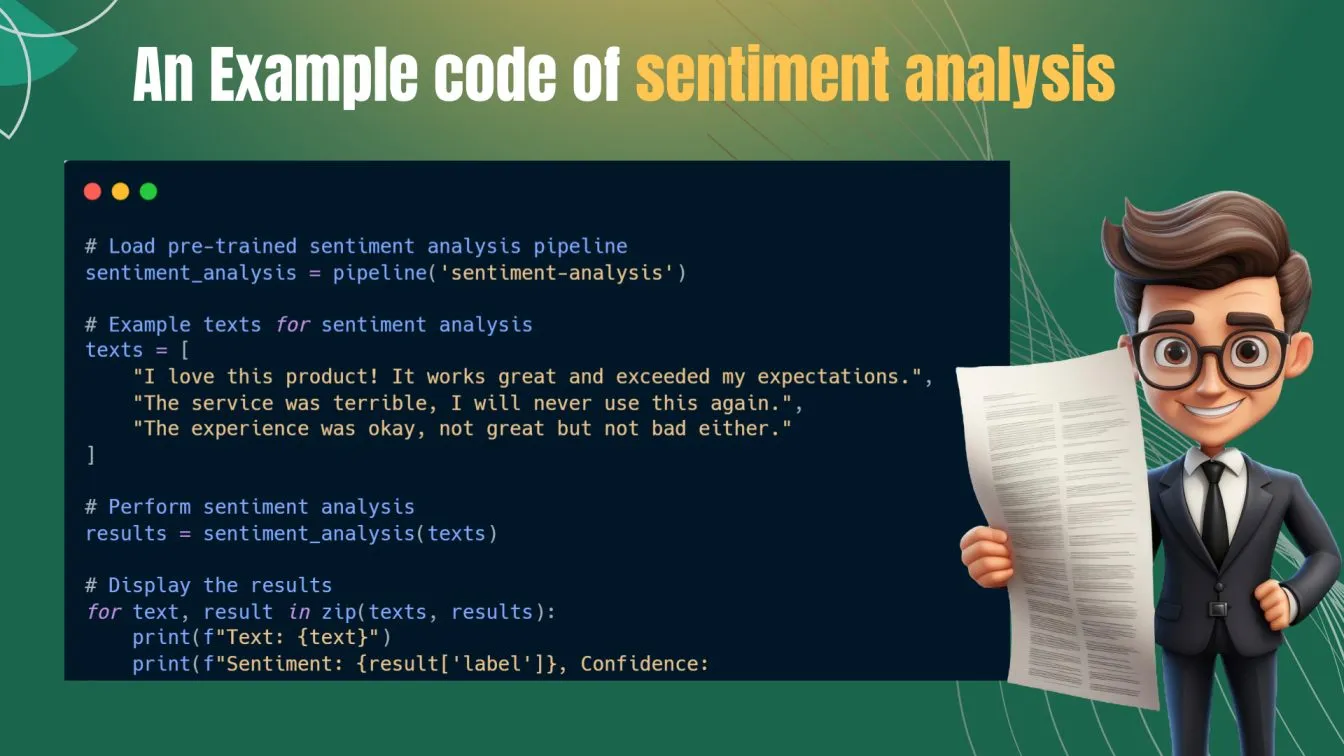

When comparing different Large Language Models (LLMs), it is essential to adopt a systematic approach that encompasses a range of techniques and evaluation criteria. One effective method is through benchmark tasks, which provide standardized datasets and challenges for assessing LLMs. For instance, sentiment analysis tasks can reveal how well models understand emotional tone, while summarization tasks assess their ability to generate concise and coherent reference summaries.

In addition to quantitative metrics, human evaluations play a crucial role in assessing LLM performance. Human feedback can provide insights into the context understanding of the models, as humans are adept at evaluating nuances in language that automated assessments might overlook. For example, when performing automatic summarizations, models can be compared based on their ability to maintain factual accuracy and coherence in the generated outputs.

Ultimately, large-scale evaluation frameworks often rely on diverse evaluation datasets to ensure robustness. By utilizing a variety of reference translations and evaluation tasks, researchers can explore the performance of pre-trained language models across different scenarios. In testing, functional testing of LLMs ensures that they meet their defined requirements, while stress testing evaluates how models perform under extreme or unpredictable conditions.

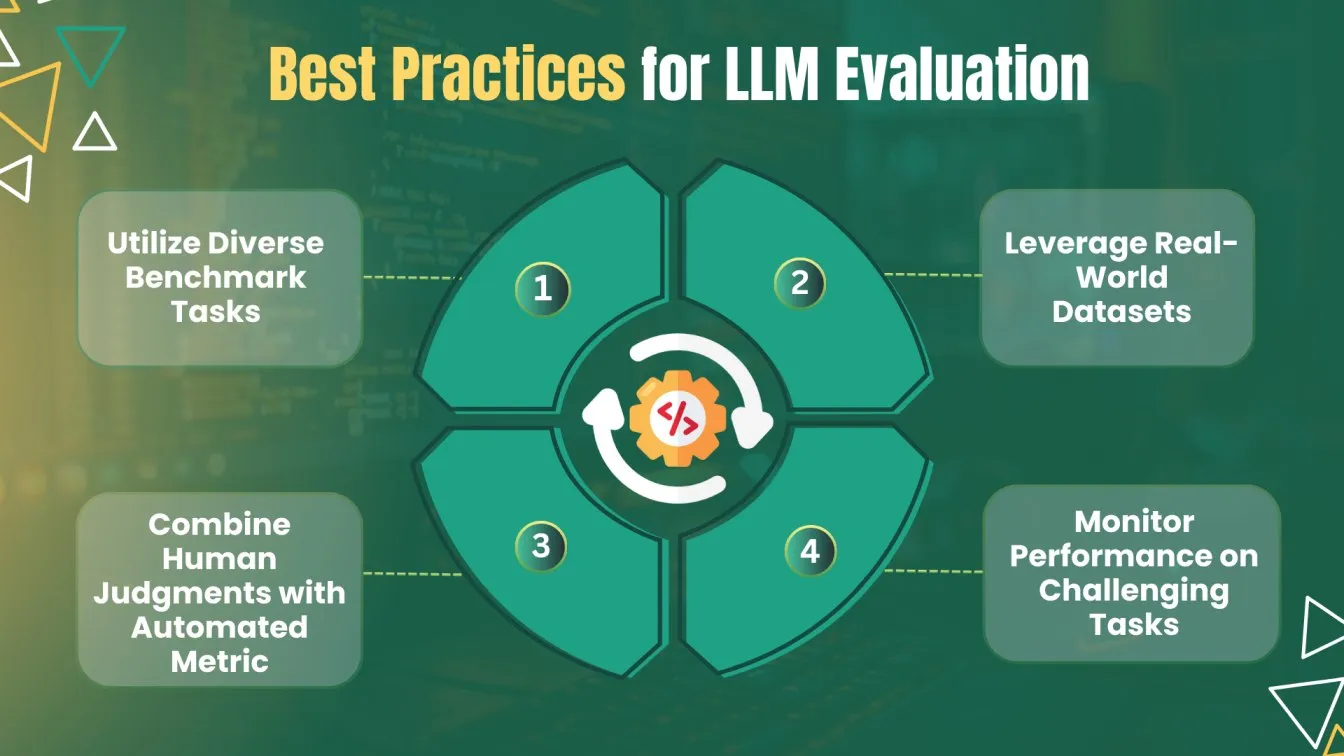

Best Practices for LLM Evaluation

Evaluating Large Language Models (LLMs) with AI-driven techniques requires a focus on both factual consistency and textual entailment to ensure the generated outputs are accurate and logical. For testing, automation can be leveraged to create efficient stress testing that compare the model’s responses to expected outcomes, flagging any issues like factual inaccuracies or hallucinations.

- Utilize Diverse Benchmark Tasks: Employ a variety of tasks, such as sentiment analysis, summarization, and textual entailment, to evaluate different aspects of model performance.

- Leverage Real-World Datasets: Use evaluation datasets that reflect real-world scenarios to test the model’s ability to generalize and handle complex, practical applications.

- Combine Human Judgments with Automated Metrics: Use human evaluations to complement automated tests, focusing on aspects like contextual understanding, coherence, and natural language fluency.

- Monitor Performance on Challenging Tasks: Continuously evaluate LLMs on difficult and edge cases to gauge robustness and adaptability in more nuanced language scenarios.

In practical scenarios, AI-driven testing can streamline the evaluation process by handling large-scale comparisons across different models, but human judgments remain essential for assessing nuanced language aspects, such as contextual relevance and fluency. By integrating automated testing with manual assessments, teams can perform comparative analysis across models, focusing on challenging tasks that simulate real-world applications.

Challenges in Evaluating LLMs

- Subjectivity in Human Judgments:

- Variability in standards and interpretations among different evaluators can lead to inconsistencies in assessments.

- Resource-Intensiveness of Human Evaluations:

- Obtaining reliable human feedback can be time-consuming and labor-intensive, complicating large-scale evaluations.

- Limitations of Existing Metrics:

- Many evaluation metrics fail to capture nuanced aspects of language, such as context understanding and implicit meanings.

- Complexity of Language Understanding:

- The intricate nature of human language makes it challenging to develop metrics that comprehensively assess model performance.

- Adapting to Diverse Applications:

- Evaluating models across various real-world applications requires a wide range of tailored evaluation criteria and datasets.

- Scalability Issues:

- As LLMs grow larger and more complex, traditional evaluation methods may struggle to keep up with their scale and performance nuances.

- Overfitting to Evaluation Tasks:

- Models may perform well on benchmark tasks but fail to generalize to less structured or novel scenarios, leading to misleading evaluations.

Future Trends in LLM Evaluation

As the field of Large Language Models (LLMs) continues to evolve, future trends in LLM evaluation are poised to reflect advancements in artificial intelligence (AI) and machine learning (ML) testing methodologies. One key trend is the increasing emphasis on automated evaluation frameworks that leverage AI/ML techniques to streamline the assessment process. These frameworks can also facilitate continuous integration and delivery (CI/CD) practices, where LLMs are tested and deployed more seamlessly throughout the development lifecycle.

Another significant trend is the growing focus on contextual and domain-specific evaluations for complex tasks. As LLMs are increasingly applied to a broader range of real-world scenarios, it is essential to tailor evaluation metrics that reflect the nuances of specific domains. This includes accounting for the unique challenges presented by critical tasks such as medical diagnostics, legal text interpretation, or creative writing.

To assess difficult tasks, powerful tools must be employed to measure primary metrics like user satisfaction and user experience. Addressing common challenges requires expanding the evaluation dimensions to ensure LLMs deliver high-quality performance across diverse applications.

Conclusion

In this blog, we looked into the key practices and metrics used to evaluate Large Language Models (LLMs). These models, built on deep learning architectures, have revolutionized natural language processing (NLP) by generating human-like text and excelling in tasks such as text generation, translation, and summarization. A crucial part of working with LLMs involves understanding and applying evaluation metrics like perplexity, cross-entropy loss, BLEU, and BERTScore to ensure the models perform optimally in real-world applications.

Moreover, the blog outlines some of the top tools and frameworks like Hugging Face Datasets, LangChain, and OpenNLP that can streamline LLM evaluation processes. It also emphasizes the importance of combining human judgment with automated tools for a more nuanced evaluation.

As LLMs continue to evolve, we explore future trends that focus on automated, domain-specific evaluations, ensuring that these powerful models meet the increasing demands of various industries, such as healthcare, legal, and creative fields.

People also asked

👉 What are the evaluation standards for LLM?

LLMs are evaluated based on criteria like accuracy, fluency, factual consistency, relevance, and efficiency, often using benchmarks like SQuAD, GLUE, and HELM to assess performance.

👉 What is the BLEU score for LLM?

BLEU (Bilingual Evaluation Understudy) is a metric used to evaluate machine translation by comparing machine-generated text to a reference text, assessing how similar the two are in terms of word sequences.

👉 What is benchmarking in LLM?

Benchmarking involves comparing the performance of an LLM against standardized datasets and tasks to measure its effectiveness across various linguistic challenges, such as comprehension, generation, and reasoning.

👉 What is the consistency score of the LLM?

This score assesses how consistently the LLM provides accurate, repeatable responses to the same or similar inputs, indicating reliability in its reasoning and output generation.

👉 What are the metrics for LLM accuracy?

Common metrics include perplexity (how well the model predicts the next word), precision, recall, F1 score, and accuracy in question answering or text generation tasks.

👉 How to evaluate fine-tuned LLM?

Fine-tuned LLMs are evaluated by comparing their performance before and after fine-tuning, using domain-specific tasks and datasets, and measuring improvements in accuracy, relevance, and language fluency.

%201.webp)