The software testing scenario is changing through AI, enabling proactive defect prediction and wide-ranging prevention strategies. Traditionally, experience has shown that most defects are detected post-happening. This leads to expenses in fixes and delays in product release. In contrast, AI-based testing predicts what potential defects could be during the development phase's lifecycle, allowing teams to prevent them from surfacing🤖✨.

This blog explores how Artificial Intelligence (AI) revolutionizes software testing by enabling proactive defect prediction and prevention. Readers will learn about the significance of identifying flaky test cases, the role of AI in enhancing testing efficiency, and practical strategies for optimizing testing processes. By understanding these concepts, software professionals can effectively leverage AI to improve their testing frameworks and deliver higher-quality software.

Besides predicting defects, AI makes prevention holistic since it can help generate test cases with automated means, optimize coverage through coverage optimization, and learn continuously from new data. As the system evolves, AI adjusts its predictions and enhances the accuracy of the predictions since these prove effective in making testing efficient and effective🔧🛠️.

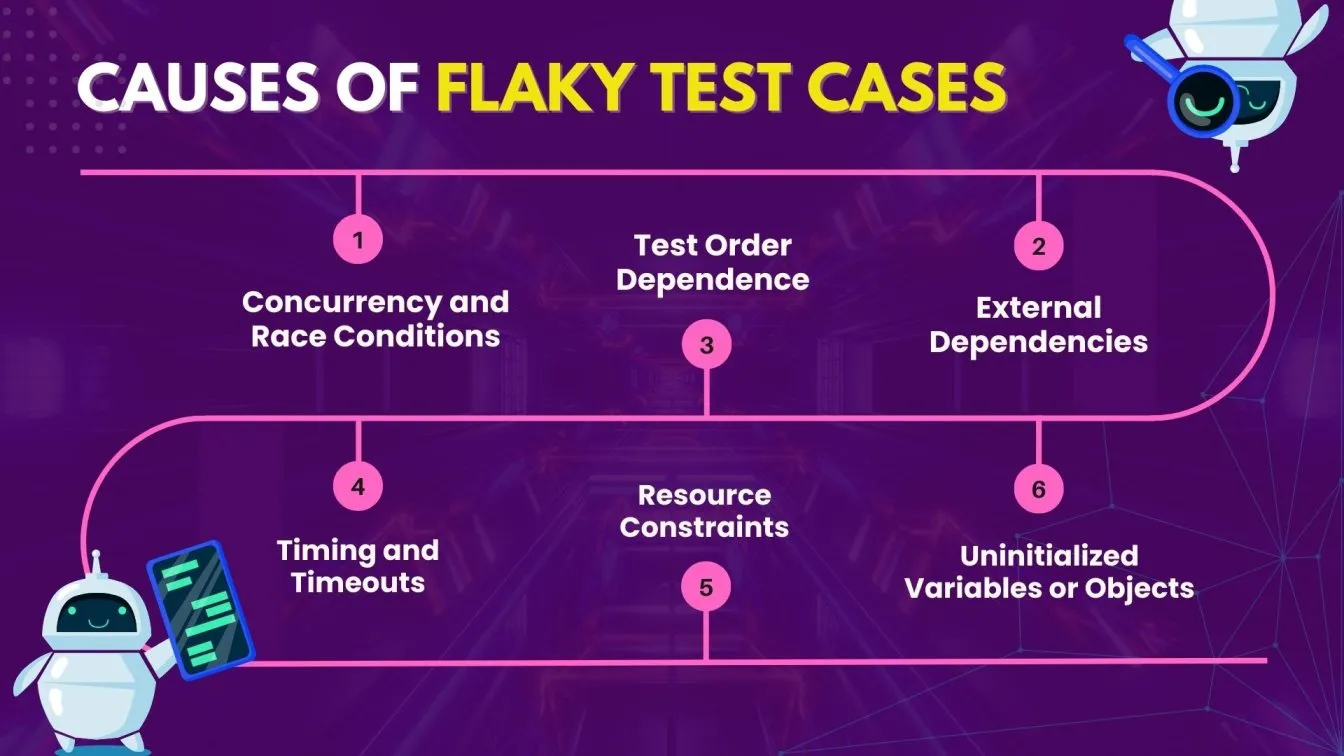

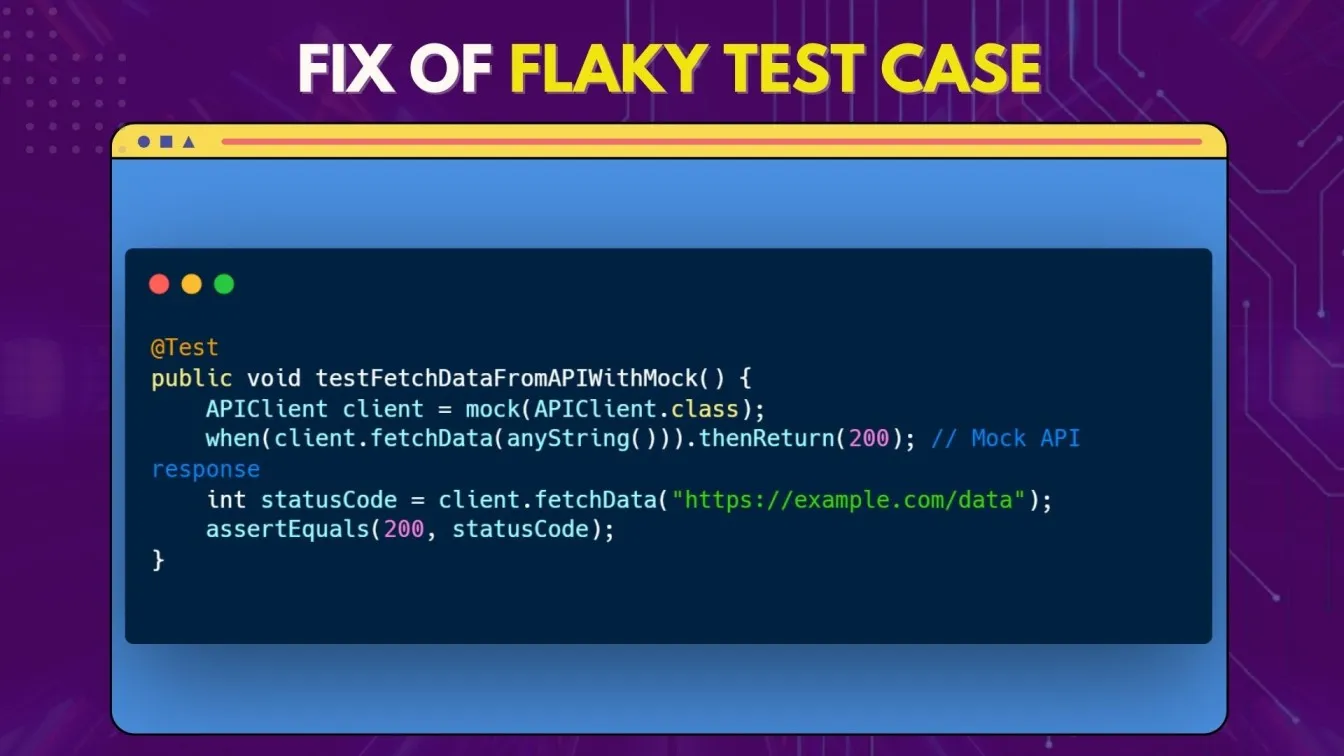

What are Flaky Test Cases??

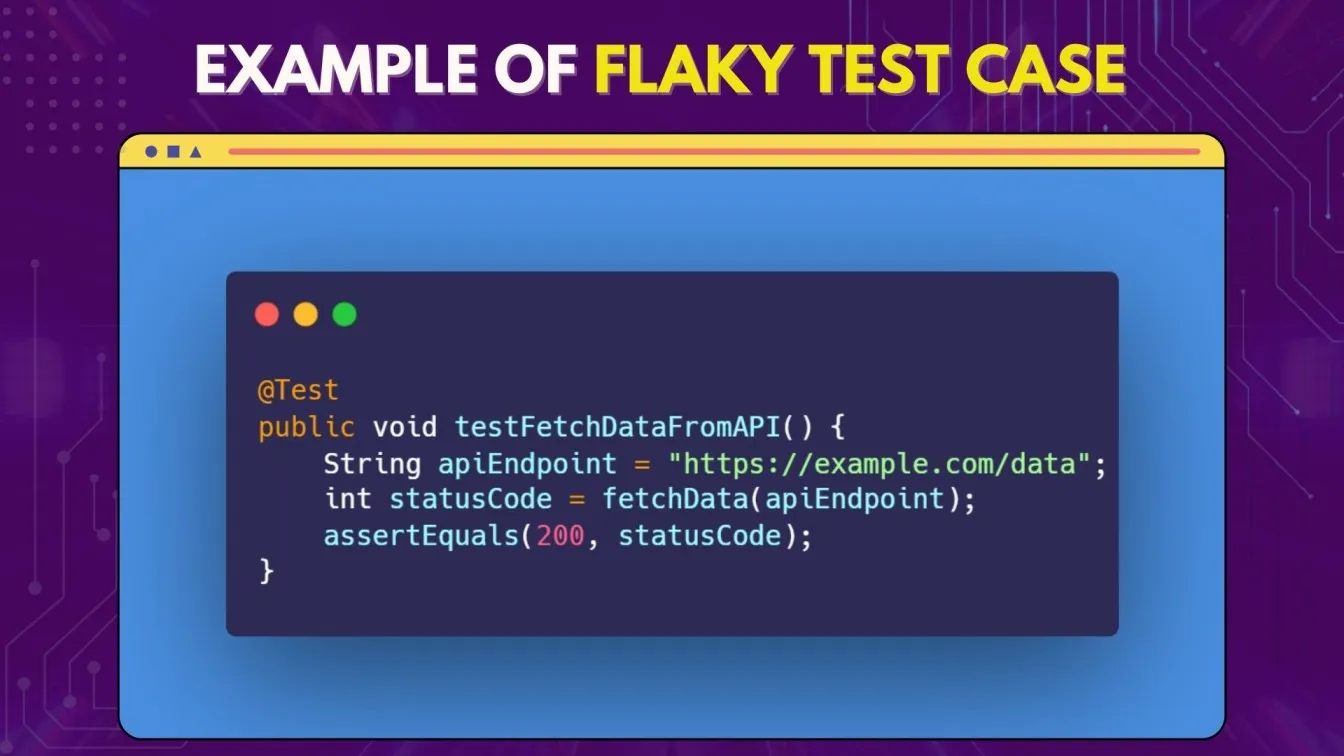

Flaky tests are a category of tests that are said to sometimes pass and sometimes fail on seemingly unrelated runs, hence the term flaky. This means that there doesn't seem to be any compelling reason to explain such inconsistencies: one run they may pass; another run they may fail🤔💻.

Flakiness in testing creates a significant challenge by making it difficult to distinguish whether errors stem from actual bugs in the code or issues specific to the test itself. This ambiguity wastes valuable time and resources, complicating Quality Assuranceefforts, especially in complex scenarios where maintaining enhanced accuracy is crucial. Relying on manual methods to troubleshoot these failures can slow down the process, adding more strain to the CI pipeline.

- Impact of Flaky Test Cases:

- Loss of Confidence in Tests

- It displays the unpredictable nature of flaky tests and breaks the trust in the test suite. The developers become indifferent and get accustomed to failing tests and consider it as a normal case of flakiness, not a bug 😞.

- Higher Development Time Plus Debugging

- Software developers and quality assurance (QA) engineers will waste a lot of time investigating and rerunning flaky tests to confirm whether it is true that the failure was indeed real. This amounts to much overhead in the development cycle and slows up the release speed, especially in CI/CD pipelines⏱️🚧.

- CI Pipeline Failures

- Continuous Integration pipelines have come to rely on automated tests for Code quality. Flaky tests make pipelines fail intermittently and cause unnecessary breaks in the development flow where debugging or reruns are needed, making the process drag on ⚠️🔄.

- Loss of Confidence in Tests

Techniques to Identify Flaky Test Cases

Identifying flaky test cases is crucial to maintaining the reliability of automated test suites. Here are some effective techniques to identify flaky tests:

- Rerun Tests Multiple Times

- One of the simplest ways to detect flaky tests is by running the test suite multiple times in different environments and comparing the results. If a test passes in one run and fails in another without code changes, it is likely flaky🔁.

- Parallel Execution

- Flaky tests often surface in parallel or concurrent environments due to race conditions or improper resource management. Running the test suite in parallel across different threads or machines can expose such issues🌐.

- Analyze Test Logs

- Log analysis helps identify patterns in test behavior. Flaky tests often exhibit inconsistencies in setup, execution, or teardown phases that can be detected through log files.📜

- Randomized Input/Execution Order

- Changing the execution order of tests or using randomized input data can help catch flakiness due to dependency issues between tests or reliance on specific execution orders.🔀

- Test Splitting

- Split large tests into smaller, more focused units. Tests that cover too much ground or try to do too many things are more likely to become flaky. By focusing on small, isolated functionalities, you reduce the chance of inconsistent results.🧩

What is Proactive Defect Prediction?

Proactive defect prediction is an advanced approach in software development aimed at identifying potential defects before they manifest in the software. It leverages historical data, such as past bug reports, code changes, and testing patterns, along with machine learning and statistical models to analyze and predict areas of the codebase that are more prone🔍📊 to errors.

By identifying high-risk segments early, teams can focus their testing and development efforts more effectively, ensuring higher quality and reducing the likelihood of post-release issues. This method not only enhances code reliability but also optimizes resource allocation by targeting areas most likely to need attention, improving overall🚀✨ efficiency in the development process.

How Flaky is Related to Proactive Defect Prediction??

Flaky tests and proactive defect prediction are two crucial concepts in software testing that are interconnected in their aim to enhance software quality and development efficiency.

- Impact on Predictive Accuracy:

- Flaky tests can compromise the accuracy of defect predictions. If tests are unreliable, the data generated from them may lead to incorrect predictions about the code’s quality, making it harder for teams to prioritize their testing efforts effectively.

- Identifying Patterns:

- By analyzing the patterns of flaky tests alongside defect data, teams can gain insights into the conditions that lead to test flakiness. This understanding can help improve both the tests themselves and the proactive defect prediction models.

- Focus on High-Risk Areas:

- Proactive defect prediction can help identify code areas prone to defects, allowing teams to focus their efforts on stabilizing flaky tests in those areas. Addressing the root causes of flakiness can lead to more reliable test results, which in turn enhances the predictive models’ accuracy.

- Enhanced Testing Strategies:

- Integrating proactive defect prediction with flaky test management enables teams to refine their testing strategies. By understanding which tests are flaky, teams can decide to rerun tests under different conditions, refine the tests, or even skip them if they are deemed unreliable.

- Continuous Improvement:

- The relationship between flaky tests and proactive defect prediction fosters a cycle of continuous improvement. As teams identify and address flaky tests, the quality of their defect predictions improves, leading to more effective testing and ultimately, higher software quality.

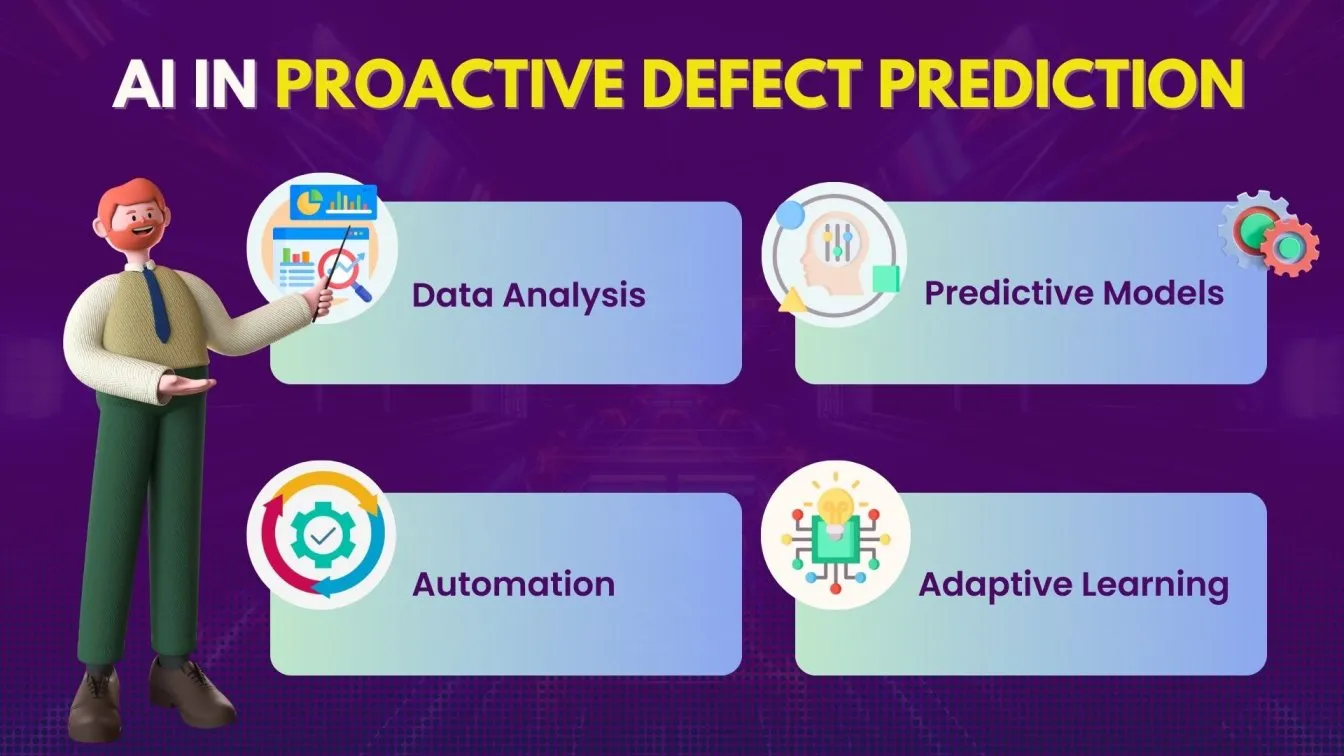

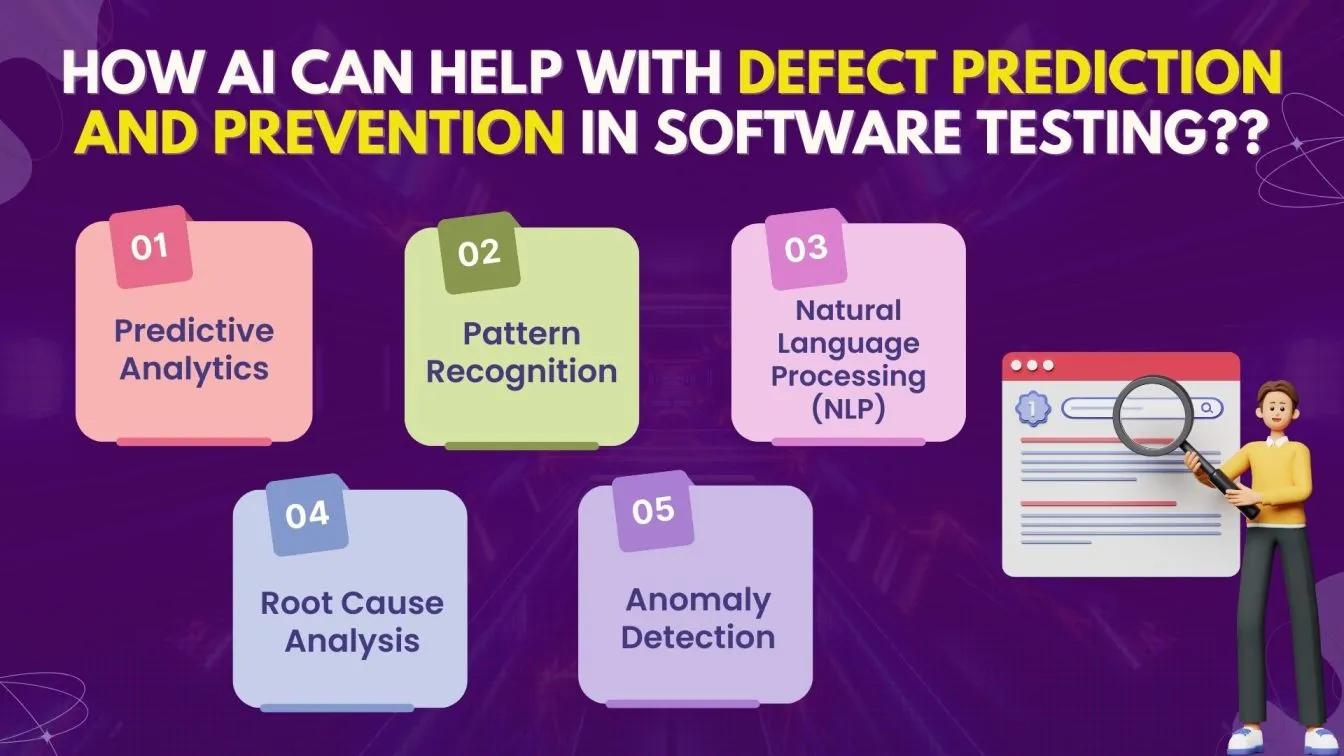

How AI Can Help With Defect Prediction And Prevention In Software Testing??

In the rapidly evolving landscape of software development, ensuring high-quality releases has become a paramount concern for organizations. Proactive defect prediction, powered by artificial intelligence (AI), emerges as a crucial strategy to enhance software testing processes.

By leveraging historical data, predictive analytics, and machine learning algorithms, AI can identify potential issues before they manifest in production. This proactive approach not only streamlines the testing phase but also significantly reduces the likelihood of costly post-release issues, thereby improving overall software quality.

AI's capabilities extend beyond mere prediction; it offers comprehensive insights that facilitate effective prevention strategies. By analyzing various factors, such as code complexity, user behavior, and historical defect patterns, AI can help teams prioritize testing efforts in high-risk areas.

Predictive Analysis

Predictive analysis plays a vital role in defect prediction and proactive defect prevention in software testing by utilizing AI-powered tools to analyze historical data and identify potential issues before they escalate. Unlike traditional testing methods, which often rely on manual testing and fixed parameters, predictive analysis employs advanced algorithms to assess a wide range of factors, including code complexity, past defect patterns, and user interactions.

This data-driven approach allows teams to prioritize their testing efforts, focusing on high-risk areas that are more likely to contain defects. By integrating predictive analysis into existing testing frameworks, organizations can enhance their testing process significantly. AI-driven testing facilitates the automation of test case generation, enabling teams to execute a more comprehensive set of tests efficiently.

The insights generated through predictive analysis empower testers to make informed decisions about where to allocate resources and how to adjust testing strategies in real time. As a result, the combination of AI-powered tools and predictive capabilities leads to improved defect detection and prevention, ultimately delivering higher-quality software products and a more streamlined development lifecycle.

Pattern Recognition

Pattern recognition plays a crucial role in defect prediction and prevention in software testing, particularly through the use of AI-powered testing tools. These AI-based software testing tools leverage advanced algorithms to analyze historical data and identify recurring patterns in code changes and defect occurrences.

AI-enhanced testing tools can analyze inconsistencies and flaky tests, helping teams address underlying issues that affect test reliability. As the AI-enabled testing market continues to grow, AI-based test automation solutions are becoming essential for proactive defect prediction, enabling teams to streamline their testing processes and ensure high-quality software throughout the development lifecycle.

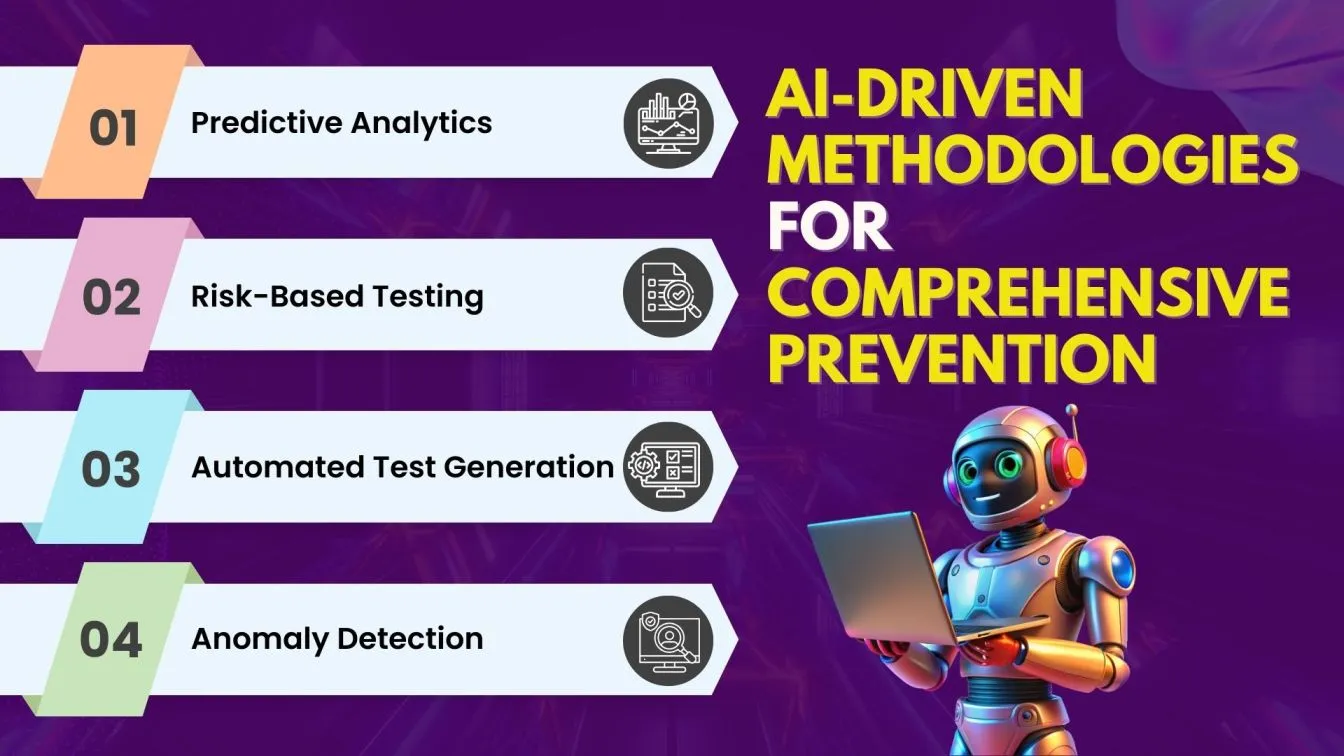

AI-Driven Methodologies for Comprehensive Prevention

In the realm of software testing, AI-driven methodologies have emerged as a transformative force for comprehensive defect prevention. By utilizing advanced algorithms and machine learning techniques, these methodologies enable teams to identify potential issues before they manifest, streamlining the software testing process and enhancing overall software quality.

The integration of AI-driven testing tools not only improves the accuracy of defect detection but also ensures comprehensive coverage across various components, ultimately leading to an improved user experience.

The analysis of high-quality data allows AI to develop proactive strategies that mitigate risks and prevent defects at various stages of the development lifecycle. This dynamic approach to quality assurance enhances the overall user experience, ensuring that high-quality application consistently meets performance expectations and user needs.

- Predictive Analytics:

- This methodology utilizes historical data to forecast performance issues and identify high-risk areas within the codebase. By analyzing patterns and trends, teams can prioritize testing efforts on the most critical components, ensuring that potential issues are addressed before they escalate.

- Risk-Based Testing:

- AI-driven risk assessment tools evaluate the likelihood and impact of defects, allowing teams to focus their testing efforts on functionalities that are most likely to fail. This targeted approach enhances the efficiency of the testing process by maximizing coverage in high-risk areas.

- Automated Test Generation:

- AI-based test automation tools can dynamically create test cases based on code changes and user interactions. This reduces human error and ensures that the testing suite remains relevant and comprehensive as the software evolves.

- Anomaly Detection:

- Utilizing machine learning algorithms, this methodology identifies unusual patterns in system behavior that may indicate potential defects. By flagging anomalies early, teams can investigate and resolve issues proactively, reducing the chances of defects reaching production.

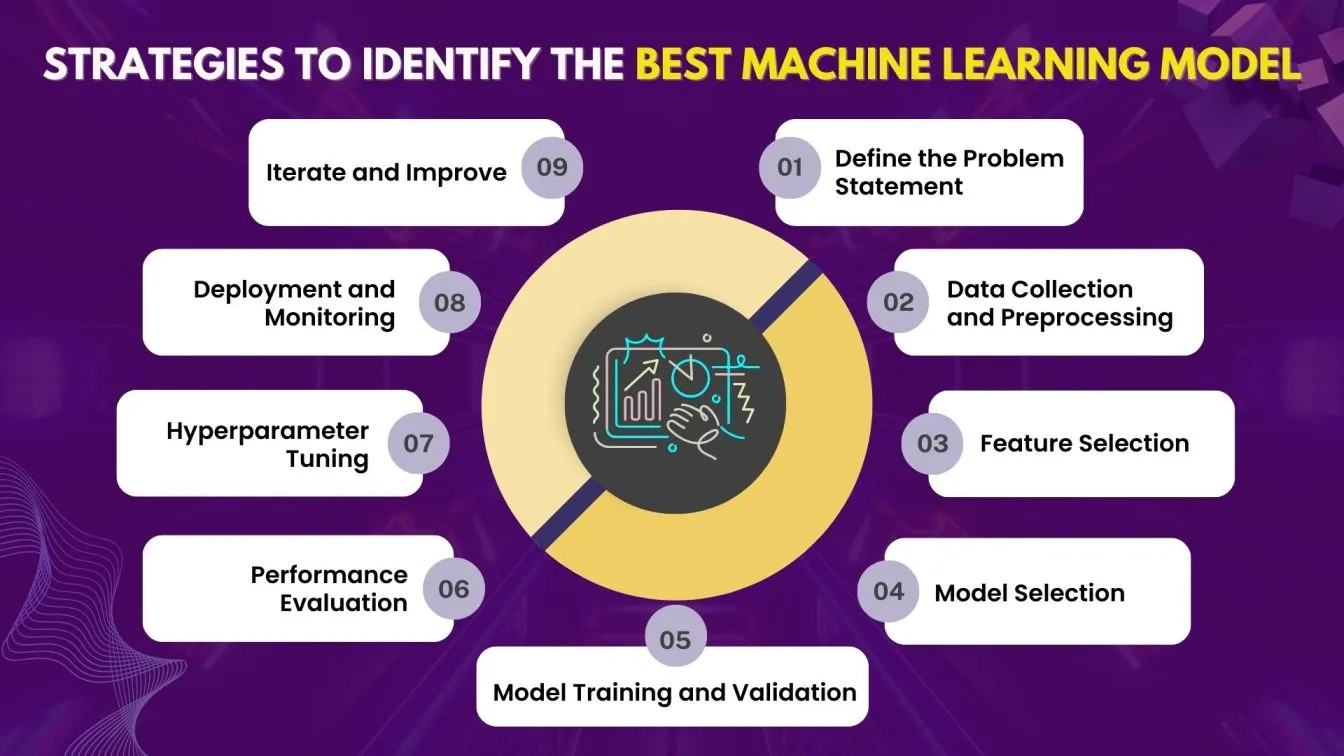

Machine Learning Models for Defect Prediction

Model-based machine learning would be a tool that has been essential in the domain of software testing, used by testing teams for advanced defect prediction, and an AI testing tool will depend on sophisticated algorithms to analyze historical data and user stories for proper test coverage and prevention of bottlenecks in performance.

It could happen with the teams making visual testing with machine learning models. It will assist in the integration of the automated process. It will help in smooth usability and improve both user interface and overall efficiency in testing.

From the available variety of machine learning models for defect prediction, it can be stated that random forests provide very effective use. It is an ensemble learning method that is very good for handling large datasets and can identify very complex patterns pointing toward defects. Due to its effectiveness against overfitting and high-dimensional data, this is an excellent choice for predicting software defects..

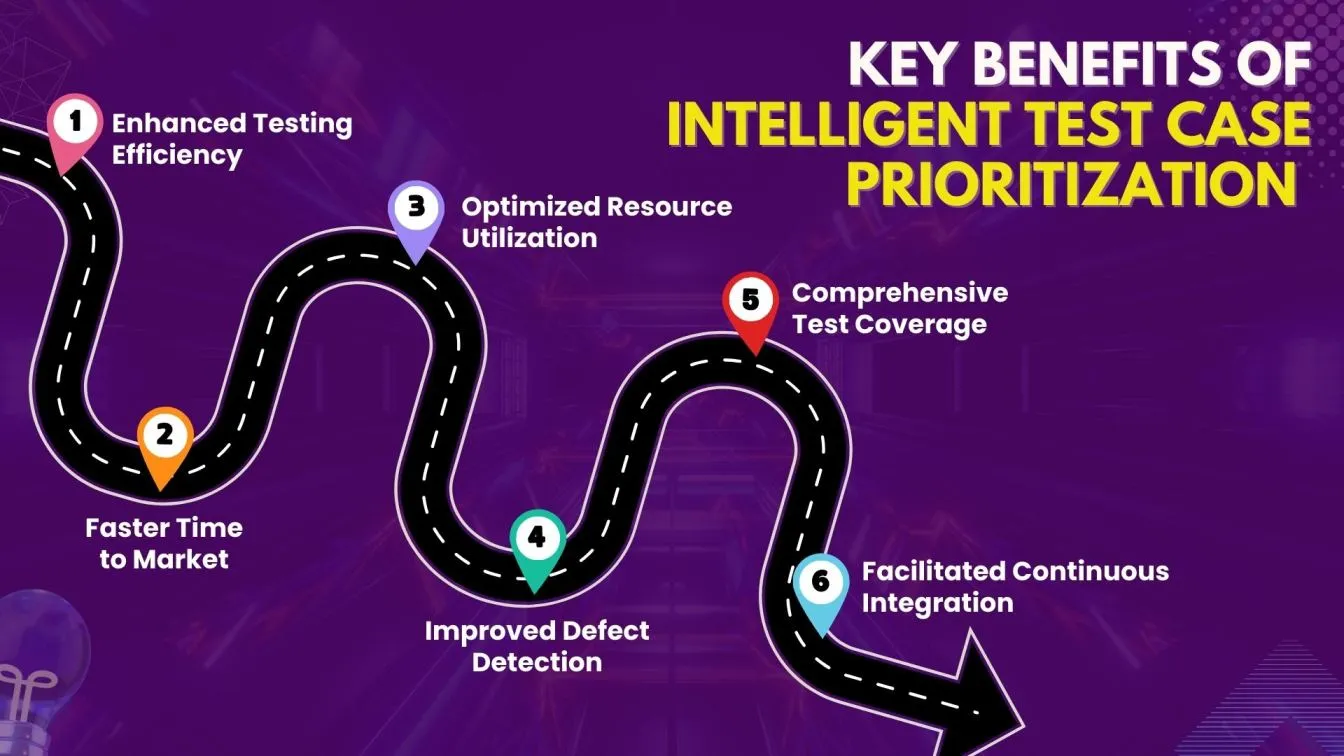

Intelligent Test Case Prioritization

It is through smart test case prioritization, with AI-powered automation, that you are helping in the effective testing efficiency by critical tasks and several scenarios. Effective test case prioritization means resource optimization, much time reduction in execution, and fast time to market.

This approach not only ensures lesser potential bottlenecks in the testing process but also ensures that tests with the highest potential impact are done earliest, leading to better quality and reliability of the software in practical use.

IT scenarios related to intelligent automation are of utmost importance in the process of software testing, as the execution time is significantly reduced. Advanced algorithms use proper test-case identification and execution techniques, giving quick access to markets for any release of software.

Challenges in AI Implementation

The incorporation of AI software testing tools and automated software testing tools in software testing introduces a unique set of challenges for organizations. One of the biggest hurdles is integrating these AI-based tools with existing systems and workflows.

Ensuring compatibility with current processes requires careful planning, especially when introducing automated testing software tools into established systems. If the data used is incomplete, biased, or of poor quality, the efficiency of software automation testing tools is severely compromised. This makes high-quality data a critical asset for any organization utilizing AI tools for software testing.

Therefore, the deployment of Artificial Intelligence tools in testing should be strategic, not just to address technical or operational issues but also to ensure organizations are prepared to overcome cultural and procedural challenges. These organizations must be ready to embrace the benefits of testing automation tools for software testing, improving overall productivity and quality.

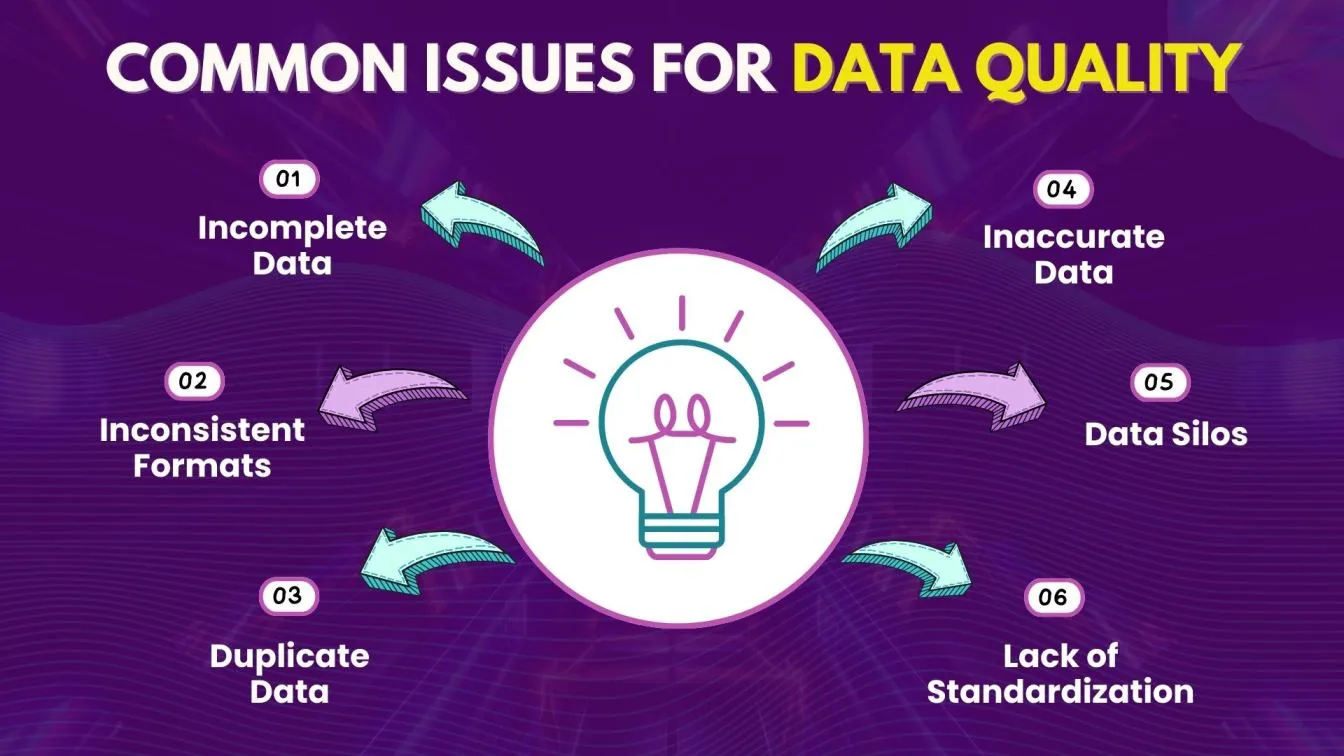

Data Quality Issues

Poor data quality is one of the prime challenges in implementing AI for testing, affecting both pen-testing software and manual software testing efforts. AI-driven tools for testing, such as AB testing software or regression testing in software testing, fundamentally rely on the quality of data used to train and score them. Poor quality data can lead to incorrect predictions, unreliable detection of defects, and ultimately, faulty software releases.

Proper data governance and quality assurance are crucial in maintaining the integrity of continuous testing practices. With enhanced test coverage and faster test execution, organizations can identify performance bottlenecks early and improve overall customer satisfaction.

Organizations will not find it easy to maintain clean and structured data spread throughout various sources, which consequently results in what is referred to as data silos and redundancy. Therefore, organizations must place data quality management at the forefront. Adopt strict validation processes for the data and institute specific guidelines on data governance while encouraging stewardship within teams.

Conclusion

In this blog, we explored how AI is transforming software testing through proactive defect prediction and prevention strategies. By analyzing historical data, AI algorithms can identify potential defects early in the development process, reducing costly post-release fixes and enhancing software reliability.

We also discussed the challenges of flaky tests, which can lead to ambiguity in identifying true defects, ultimately slowing down development cycles, particularly in CI/CD pipelines.

Effective techniques to identify and manage flaky tests are essential for maintaining the integrity of automated testing. Furthermore, we examined how AI enhances the testing process by automating test case generation, improving test coverage, and accelerating delivery timelines. Despite the benefits, organizations must address issues such as data quality, system integration, and skill gaps to fully leverage AI in testing. Ultimately, AI-driven methodologies not only streamline testing efforts but also lead to better software quality through continuous learning and adaptation from previous testing cycles.

People also asked

👉 What is a proactive defect prevention technique?

This technique identifies and mitigates potential defects before they occur through practices like code reviews and static analysis. It ensures higher software quality by addressing issues early in the development cycle.

👉 What is a proactive preventive approach?

A proactive preventive approach implements strategies to mitigate risks before they result in problems. This includes early testing and continuous monitoring to prevent defects from escalating.

👉 What is the difference between proactive and reactive test strategy approaches?

Proactive test strategies focus on early detection and prevention of defects, while reactive strategies respond to defects after they arise. The former enhances quality, whereas the latter often incurs higher costs and delays.

👉 What is a proactive approach to quality improvement?

This approach involves continuously assessing and enhancing processes to prevent quality issues before they manifest. It includes regular audits and adopting best practices for ongoing improvement.

👉 What are proactive learning techniques?

Proactive learning techniques encourage individuals to anticipate knowledge needs and seek learning opportunities. This includes self-directed learning and leveraging feedback to enhance skills in advance of challenges.

%201.webp)